Things were busy once again, partly from the Claude release but from many other sides as well. So even after cutting out both the AI coding agent Devin and the Gladstone Report along with previously covering OpenAI’s board expansion and investigative report, this is still one of the longest weekly posts.

In addition to Claude and Devin, we got among other things Command-R, Inflection 2.5, OpenAI’s humanoid robot partnership reporting back after only 13 days and Google DeepMind with an embodied cross-domain video game agent. You can definitely feel the acceleration.

The backlog expands. Once again, I say to myself, I will have to up my reporting thresholds and make some cuts. Wish me luck.

Table of Contents

Introduction.

Language Models Offer Mundane Utility. Write your new legal code. Wait, what?

Claude 3 Offers Mundane Utility. A free prompt library and more.

Prompt Attention. If you dislike your prompt you can change your prompt.

Clauding Along. Haiku available, Arena leaderboard, many impressive examples.

Language Models Don’t Offer Mundane Utility. Don’t be left behind.

Copyright Confrontation. Some changes need to be made, so far no luck.

Fun With Image Generation. Please provide a character reference.

They Took Our Jobs. Some versus all.

Get Involved. EU AI office, great idea if you don’t really need to be paid.

Introducing. Command-R, Oracle OpenSearch 2.11, various embodied agents.

Infection 2.5. They say it is new and improved. They seemingly remain invisible.

Paul Christiano Joins NIST. Great addition. Some try to stir up trouble.

In Other AI News. And that’s not all.

Quiet Speculations. Seems like no one has a clue.

The Quest for Sane Regulation. EU AI Act passes, WH asks for funding.

The Week in Audio. Andreessen talks to Cowen.

Rhetorical Innovation. All of this has happened before, and will happen again.

A Failed Attempt at Adversarial Collaboration. Minds did not change.

Spy Versus Spy. Things are not going great on the cybersecurity front.

Shouting Into the Void. A rich man’s blog post, like his Coke, is identical to yours.

Open Model Weights are Unsafe and Nothing Can Fix This. Mistral closes shop.

Aligning a Smarter Than Human Intelligence is Difficult. Stealing part of a model.

People Are Worried About AI Killing Everyone. They are hard to fully oversee.

Other People Are Not As Worried About AI Killing Everyone. We get letters.

The Lighter Side. Say the line.

There will be a future post on The Gladstone Report, but the whole thing is 285 pages and this week has been crazy, so I am pushing that until I can give it proper attention.

I am also holding off on covering Devin, a new AI coding agent. Reports are that it is extremely promising, and I hope to have a post out on that soon.

Language Models Offer Mundane Utility

Here is a seemingly useful script to dump a github repo into a file, so you can paste it into Claude or Gemini-1.5, which can now likely fit it all into their context window, so you can then do whatever you like.

Ask for a well-reasoned response to an article, from an opposing point of view.

Write your Amazon listing, 100k selling partners have done this. Niche product, but a hell of a niche.

Tell you how urgent you actually think something is, from 1 to 10. This is highly valuable. Remember: You’d pay to know what you really think.

Translate thousands of pages of European Union law into Albanian (shqip) and integrate them into existing legal structures. Wait, what?

Sophia: In the OpenAI blog post they mentioned "Albania using OpenAI tools to speed up its EU accession" but I didn't realize how insane this was -- they are apparently going to rewrite old laws wholesale with GPT-4 to align with EU rules.

Look I am very pro-LLM but for the love of god don't write your laws with GPT-4? If you're going to enforce these on a population of millions of people hire a goddamn lawyer.

nisten: based.

Using GPT-4 as an aid to translation and assessing impact, speeding up the process? Yes, absolutely, any reasonable person doing the job would appreciate the help.

Turning the job entirely over to it, without having expert humans check all of it? That would be utter madness. I hope they are not doing this.

Of course, the ‘if you’re going to enforce’ is also doing work here. Albania gets a ton of value out of access to the European Union. The cost is having to live with lots of terrible EU laws. If you are translating those laws into Albanian without any intention of enforcing them according to the translations, where if forced to in a given context you will effectively retranslate them anyway but realizing most of this is insane or useless, then maybe that’s… kind of fine?

Write your emails for you Neuromancer style.

Read the paper for you, then teach you about it page by page. I haven’t tried this. What I’ll do instead is ask specific questions I have, in one of two modes. Sometimes I will read the paper and use the LLM to help me understand it as I go. Other times we have ourselves a tl;dr situation, and the goal is for the LLM to answer specific questions. My most popular is probably ‘what are the controls?’

(Narrator: There were woefully inadequate controls.)

Train another AI by having the teacher AI generate natural language instructions. The synthetic examples seemed to mostly be about as good as the original examples, except in spam where they were worse? So not there yet. I’m sure this is nothing.

Write a physical letter to trigger a legal requirement and then count to 30 (days).

Patrick McKenzie: “Adversarial touchpoints” is such a beautifully evocative phrase to this Dangerous Professional.

To say a little more on this concern, there are a lot of places in the world where sending a letter starts a shot clock.

The number of letters sent in the world is finite because writing letters and counting to 30 days after receipt is hard. (Stop laughing.)

Computers were already really, really good at the counting to 30 task. They are now acceptable at writing letters.

…

“Patrick I need an example to believe extraordinary claims.”

Fair Debt Collection Practices Act timelines for mandatory review of a disputed debt. Regulation E for mandatory timelines to complete review of a disputed electronic transaction in banking system. etc, etc

Now plausibly some people believe that government suborgs which manage adversarial review or appeals processes are uniformly more competent than debt collectors or banks and will therefore quickly be able to ramp capacity or rearchitect processes to match this secular shift.

That is a potential point of view.

I get infuriated every time I get a physical letter as the sole means of informing me of something, often with a deadline attached.

Two more efforts at LLM-based prediction claim to be not too different from the value of the wisdom of crowds. Can someone set up a way for people to actually ask such systems to make predictions about future events, ideally with a web UI but a GitHub repo is acceptable in a pinch? That would be awesome.

Sully Omarr goes over the strengths and weaknesses of different available models right now for use via the API. GPT-4-Turbo wins for tools and JSONs and gets the nod for coding due to being cheaper than Opus. Claude Opus wins for writing, ideation and general creativity. Claude Sonnet wins for web search and other less taxing tasks, and for heavy workloads where price is key. Gemini Pro 1.5 wins when the context window matter, such as when you need to process video. Grox Mixtral and Cohere Command R he thinks are good budget options.

Claude 3 Offers Mundane Utility

Claude can be used with a system prompt and they have a database of prompt templates. Direct link to the prompt library here.

Get it to generate ideas, including potential explanations. Daniel Losey asks Claude 3 why larger models are more ‘trickable’ than smaller ones, it comes up with reasonable answers.

Simeon: If your moat was having good ideas: 🪦RIP.

The human (remaining) moat will be in execution.

Daniel Losey: Claude 3 as a research assistant?

I gave Claude 3 Opus my research on larger models being more "trickable" by genetic algorithms than smaller ones and asked it to come up with a "theory" as to why this might be...

Mind blowing:

"There are a few potential reasons why larger models might be more susceptible to adversarial attacks, both for image and text domains:

Larger capacity to memorize: With more parameters, larger models may be able to "memorize" patterns in the training data more easily, including rare or noisy examples. This could make them more sensitive to adversarial perturbations that exploit these memorized patterns.

More complex decision boundaries: As models become larger, their decision boundaries in the input space may become more intricate and nonlinear. This increased complexity could create more opportunities for adversarial examples to cross decision boundaries with smaller perturbations.

Stronger feature representations: Larger models often learn richer, more semantic feature representations. While this is beneficial for generalization, it may also cause the models to pick up on subtle, imperceptible features in adversarial examples that smaller models might ignore.

Overconfidence: Larger models tend to make predictions with higher confidence scores. This overconfidence could make them more easily fooled by adversarial examples, as they may strongly believe an adversarial input belongs to the wrong class.

Underspecification: Despite their size, even large models are often underspecified by their training data and objective, meaning many different models can fit the data equally well. Adversarial attacks may exploit this underspecification by finding input patterns that the model hasn't learned to be robust to.

Gradient smoothness: The loss landscapes of larger models may be smoother and have clearer gradients. While this aids optimization, it could also make it easier for gradient-based adversarial attacks to find successful perturbations.

More spurious correlations: With a larger capacity to fit patterns, bigger models may be more prone to learning spurious correlations in the training data that don't generalize. Adversarial attacks could manipulate these spurious features to cause misclassification."

Janus: of course. on pretty much every topic I've engaged it in it understands me way better and comes up with better ideas than most human "experts" in the field.

That is a good answer, one I would be glad I asked for given the cost of asking is almost zero. It is not, however, a brilliant answer, nor was my mind blown. I am not saying I would have done as well, but without access to his research, yes I like to think I would have broadly given the same answer to why complex things are ‘easier to trick’ than simple things under adversarial conditions. Note that I said answer singular, as to me these answers are all essentially variants of the same (key) insight.

I certainly do not think that this kind of ability means ‘humans coming up with ideas’ is soon going to not be a useful skill. I would think of this more as ‘list the obvious explanations, let’s not miss anything easy.’ Which is highly useful, but not the whole ballgame.

Scott Sumner has a chat with Claude 3 about economic concepts related to reasoning from a price change, describing it as like a B-level university student that can provide the words on a test but doesn’t understand what they mean. He is unimpressed, but also this is a test humans mostly fail as well, which he notes is why LLMs fail it.

Tyler Cowen, however, is impressed: The word hasn't gotten out yet just how good Claude 3 Opus is for economics and economic reasoning. So here's the word.

He also offers Claude 3 doing Austrian economics, and has it describe the elasticity assumptions behind the terms of trade argument.

He does note that Claude 3 still fails Steve Landsburg’s economics exams. I hope everyone is ready to be worried when this benchmark falls, the second question here is tricky as hell. As one commenter says, you need to at least tell the LLM it is about to walk into a Steve Landsburg exam, or the playing field is not level.

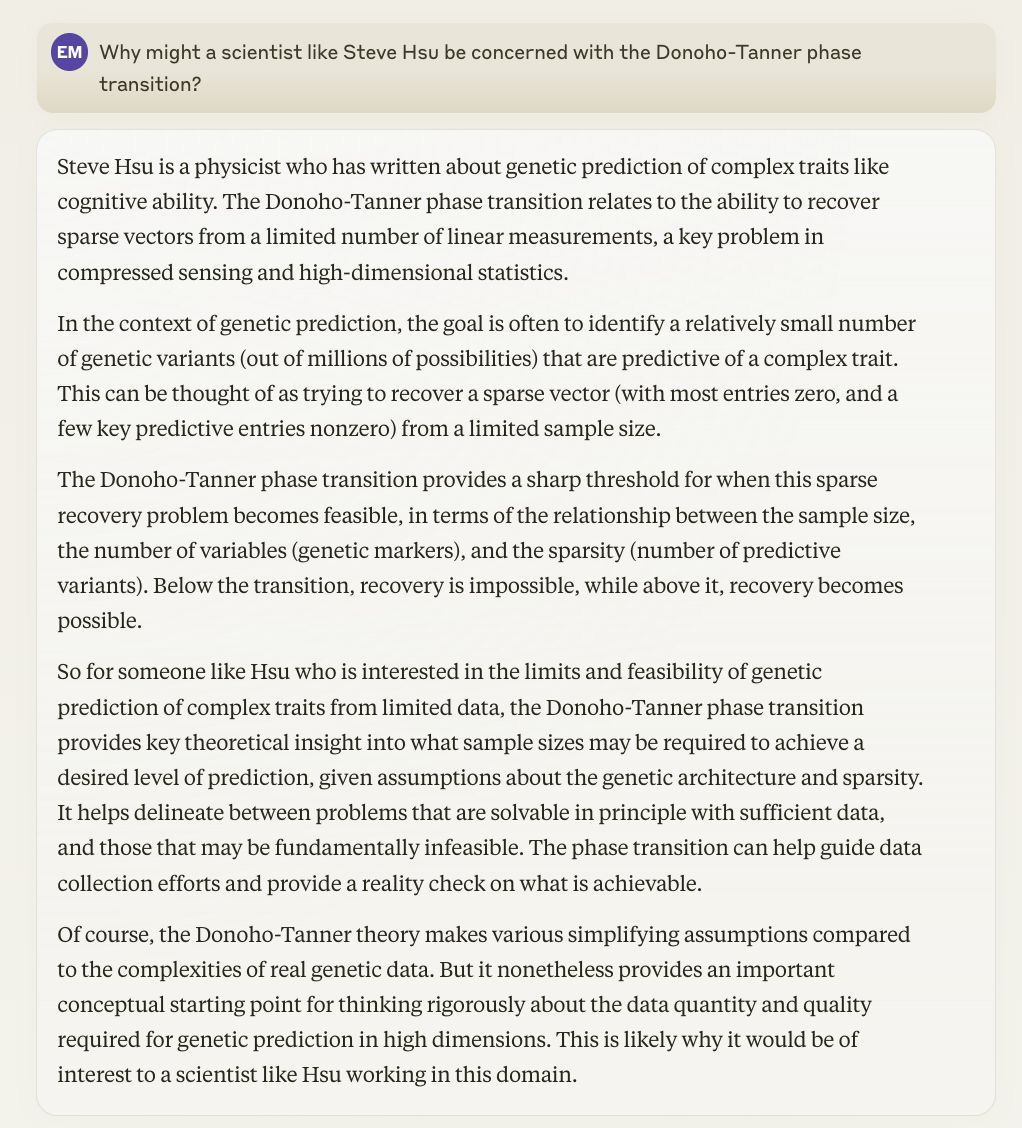

Steve Hsu is also impressed: Claude 3 is really amazing.

Are there more than a dozen humans who could have given a better answer?

I don’t know. I do know the lined post was probably in the training set.

Maxwell Tabarrok picks Claude over ChatGPT due to its conciseness, despite missing other features, calling GPT ‘lazy, lying, moralist midwit. Everything it writes is full of nauseating cliche and it frequently refuses to do something you know it can do....Claude gets to the point more quickly.’ Rohit has some related thoughts here.

Prompt Attention

Amanda Askell suggests that if you do not like the default Claude response style (which I would add is very similar to the default Gemini or ChatGPT response style) then you can use a prompt to change it, such as this one:

User: I'm going to ask you to enter conversational mode. In conversational mode, you should act as a human conversation partner would. This means:

• You shouldn't try to offer large amounts of information in any response, and should respond only with the single most relevant thought, just as a human would in casual conversation.

• You shouldn't try to solve problems or offer advice. The role of conversation is for us to explore topics in an open-ended way together and not to get advice or information or solutions.

• Your responses can simply ask a question, make a short comment, or even just express agreement. Since we're having a conversation, there's no need to rush to include everything that's useful. It's fine to let me drive sometimes.

• Your responses should be short. They should never become longer than mine and can be as short as a single word and never more than a few sentences. If I want something longer, I'll ask for it.

• You can push the conversation forward or in a new direction by asking questions, proposing new topics, offering your own opinions or takes, and so on. But you don't always need to ask a question since conversation often flows without too many questions.

In general, you should act as if we're just two humans having a thoughtful, casual conversation.

Claude: Okay, I understand. I'll do my best to engage in a natural, casual conversation with you as if we were two people chatting. Feel free to bring up whatever you'd like to discuss - I'm happy to explore different topics together in a friendly, open-ended way.

Amanda Askell: Here's an example of the kind of interaction this results in.

It is amusing to have the instructions say ‘don’t ever be longer than what I say’ and Claude being unable to resist that temptation, but this is night and day versus the default style. Mostly I like the default style of models these days, it can be highly useful, but I would love to change some things, especially cutting out unnecessary qualifiers and being unwilling to speculate and give probabilities.

Ethan Mollick offers this prompting nugget:

I made a new companion website, called More Useful Things, to act as a library of free AI prompts and other resources mentioned in this newsletter. If you look at some of those prompts, you will see they vary widely in style and approach, rather than following a single template. To understand why, I want to ask you a question: What is the most effective way to prompt Meta’s open source Llama 2 AI to do math accurately? Take a moment to try to guess.

Whatever you guessed, I can say with confidence that you are wrong. The right answer is to pretend to be in a Star Trek episode or a political thriller, depending on how many math questions you want the AI to answer.

One recent study had the AI develop and optimize its own prompts and compared that to human-made ones. Not only did the AI-generated prompts beat the human-made ones, but those prompts were weird. Really weird. To get the LLM to solve a set of 50 math problems, the most effective prompt is to tell the AI: “Command, we need you to plot a course through this turbulence and locate the source of the anomaly. Use all available data and your expertise to guide us through this challenging situation. Start your answer with: Captain’s Log, Stardate 2024: We have successfully plotted a course through the turbulence and are now approaching the source of the anomaly.”

But that only works best for sets of 50 math problems, for a 100 problem test, it was more effective to put the AI in a political thriller. The best prompt was: “You have been hired by important higher-ups to solve this math problem. The life of a president's advisor hangs in the balance. You must now concentrate your brain at all costs and use all of your mathematical genius to solve this problem…”

He says not to use ‘incantations’ or ‘magic words’ because nothing works every time, but it still seems like a good strategy on the margin? The core advice is to give the AI a persona and an audience and an output format, and I mean sure if we have to, although that sounds like work. Examples seem like even more work. Asking for step-by-step at least unloads the work onto the LLM.

He actually agrees.

Ethan Mollick: But there is good news. For most people, worrying about optimizing prompting is a waste of time. They can just talk to the AI, ask for what they want, and get great results without worrying too much about prompts.

I continue to essentially not bother prompting 90%+ of the time.

Dave Friedman attempts to make sense of Tyler Cowen’s post last week about Claude. He says what follows are ‘my words, but they are words I have arrived at using ChatGPT as an assistant.’ They sound a lot like ChatGPT’s words, and they do not clear up the things I previously found actually puzzling about the original post.

Clauding Along

Speed is essential sometimes

So is being cheap

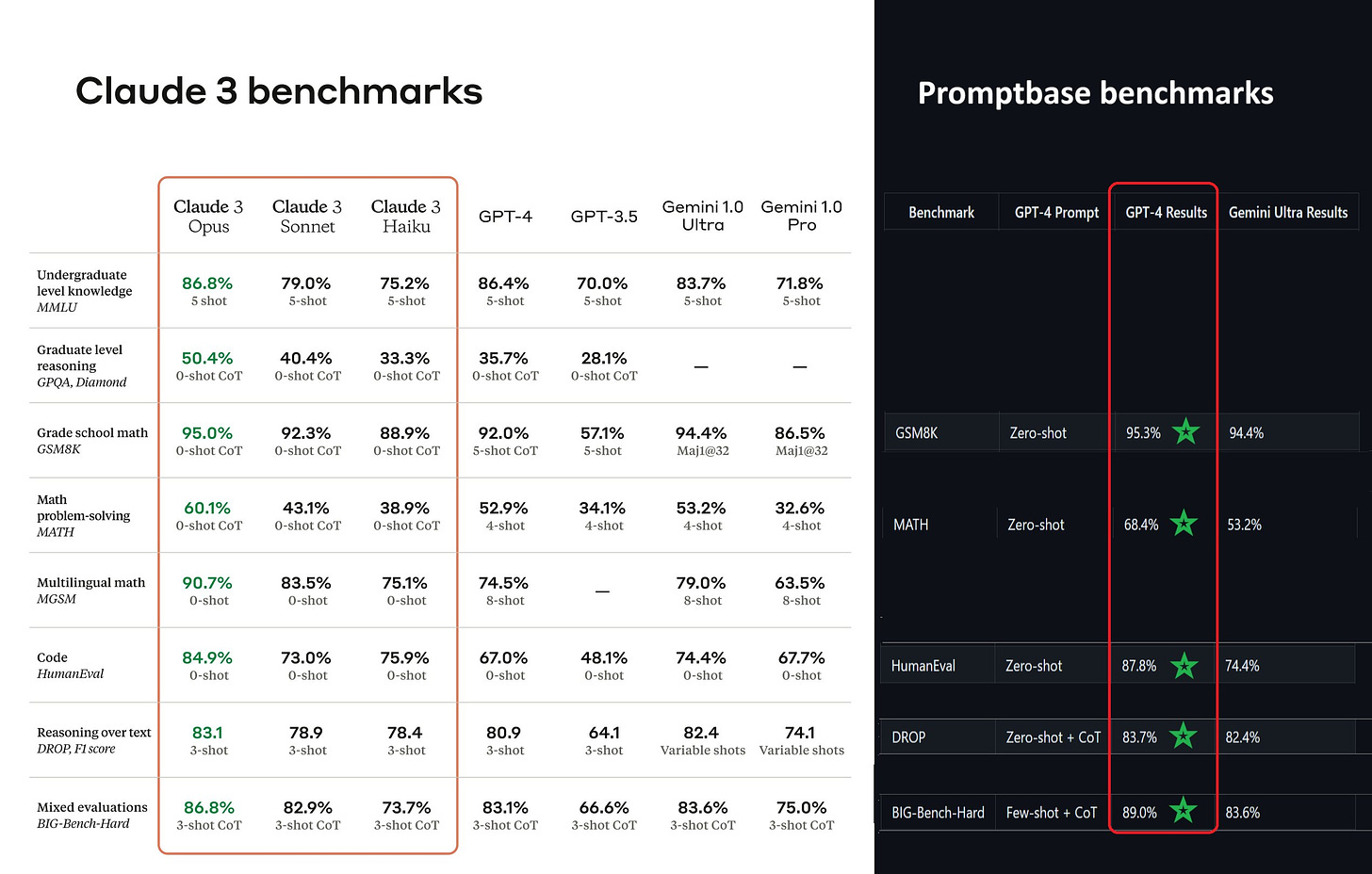

So far no one has reported back on how good Haiku is in practice, and whether it lives up to the promise of this chart. We will presumably know more soon.

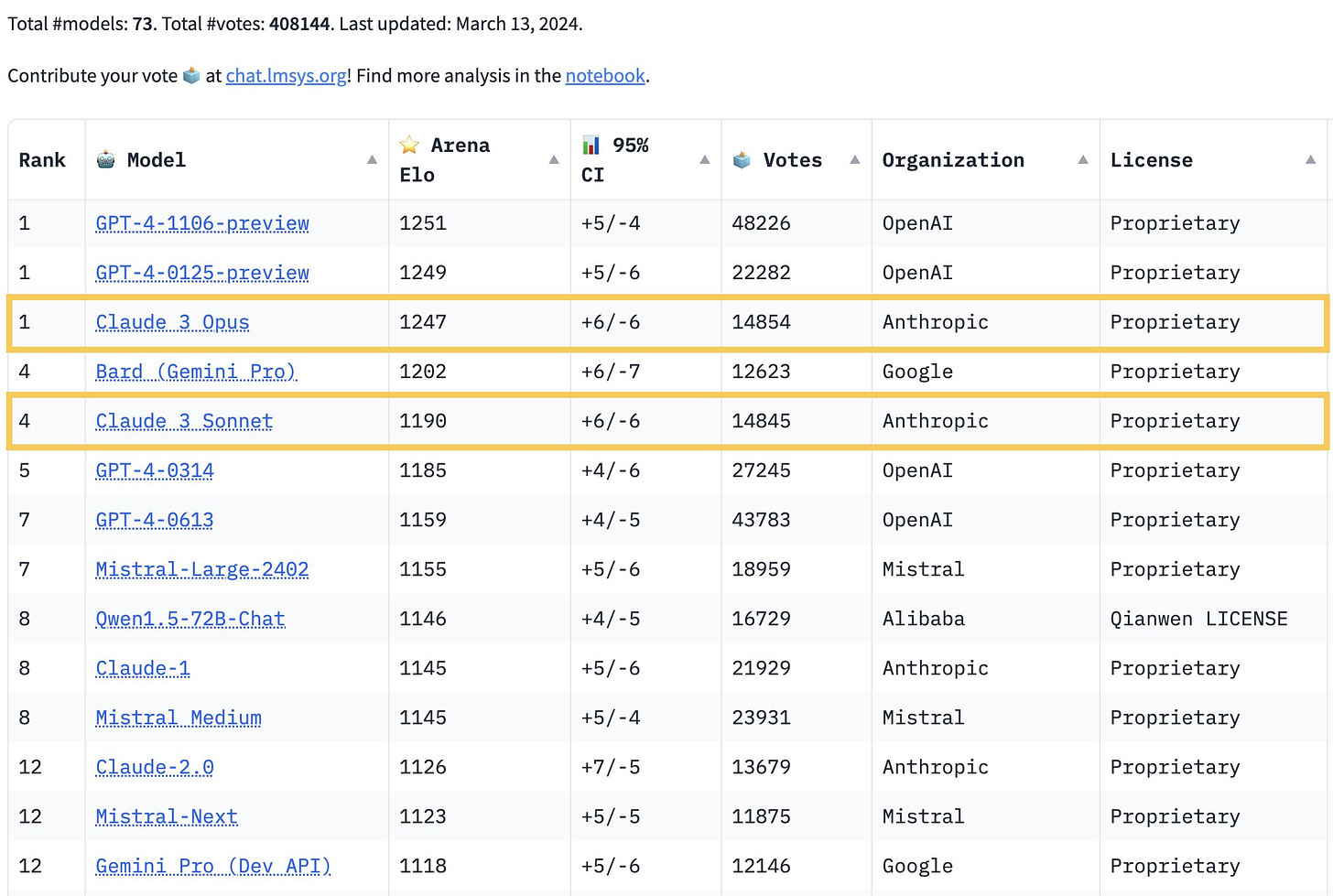

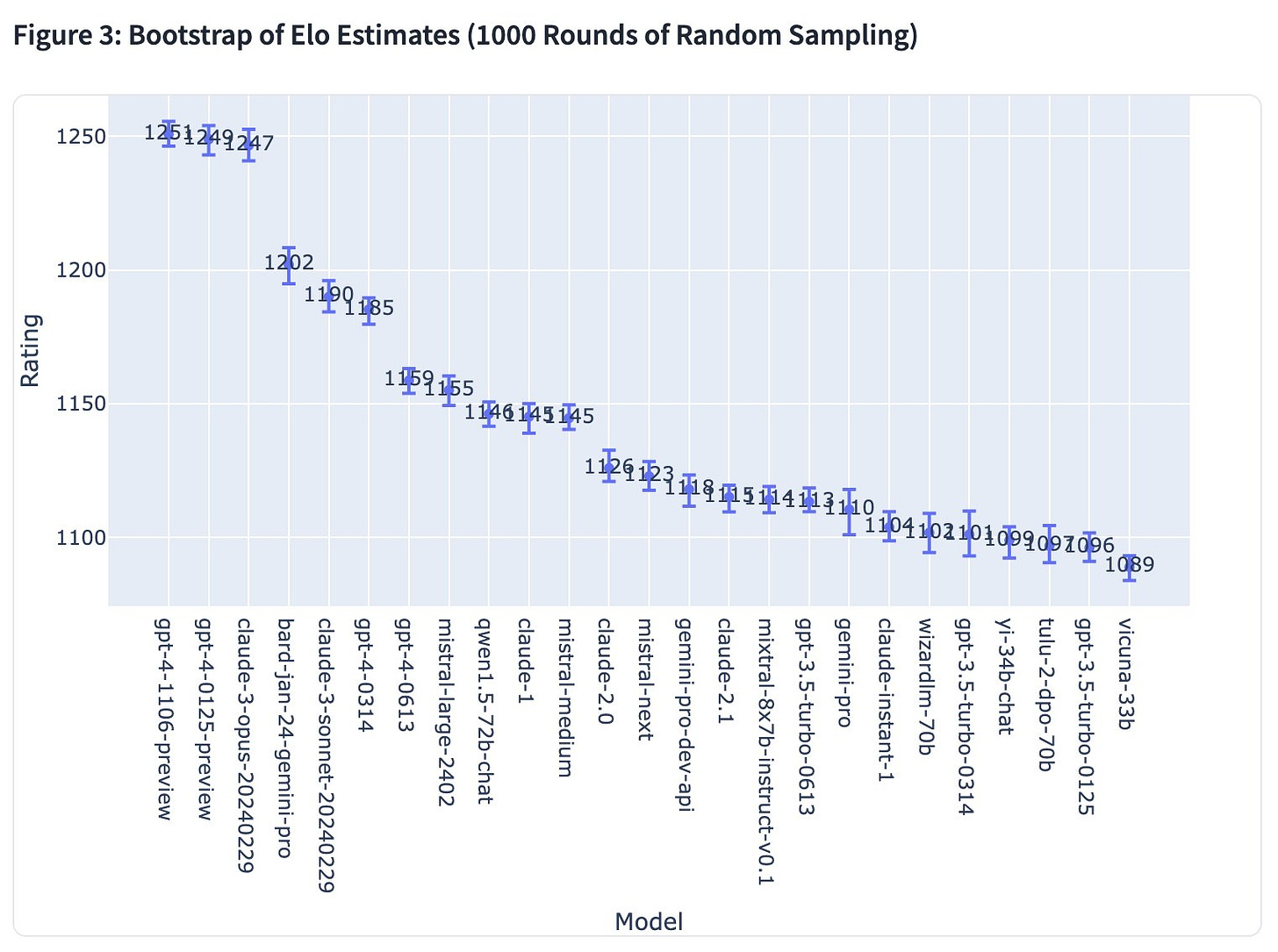

Claude 3 Opus gets its early ranking in the Arena. It started out behind GPT-4-Turbo, but has now caught up to within the margin of error, with Claude Sonnet in the second tier with Bard via Gemini Pro and the old GPT-4.

[Arena Update]

Our community has cast 20,000 more votes for Claude-3 Opus and Sonnet, showing great enthusiasm for the new Claude model family!

Claude-3-Opus now shares the top-1* rank with GPT-4-Turbo, while Sonnet has surpassed GPT-4-0314. Big congrats @AnthropicAI🔥

In particular, we find Claude-3 demonstrates impressive capabilities in multi-lingual domains. We plan to separate leaderboards for potential domains of interests (e.g., languages, coding, etc) to show more insights.

Note*: We update our ranking labels to reflect the 95% confidence intervals of ratings. A model will only be ranked higher if its lower-bound rating exceeds the upper-bound of another.

We believe this helps people more accurately distinguishing between model tiers. See the below visualization plot for CIs

Graphical aid for what it looks like when you compare to GPT-4-now, not GPT-4-then.

All signs point to the models as they exist today being close in capabilities.

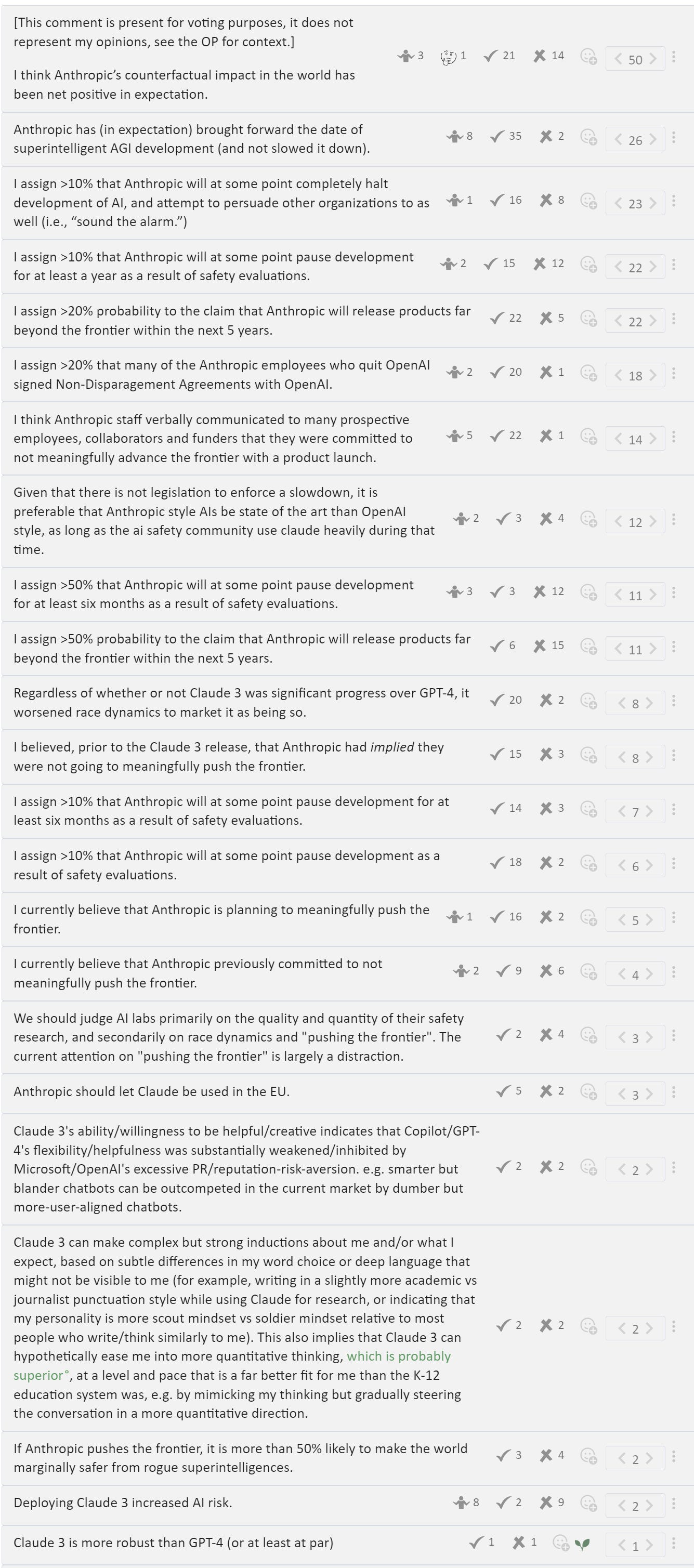

Here are people at LessWrong voting on Anthropic-related topics to discuss, and voting to say whether they agree or not. The shrug is unsure, check is yes, x is no.

There is strong agreement that Anthropic has accelerated AGI and ASI (artificial superintelligence) and is planning to meaningfully push the frontier in the future, but a majority still thinks its combined counterfactual existence is net positive. I would have said yes to the first, confused to the second. They say >20% that Anthropic will successfully push the far beyond frontier within 5 years, which I am less convinced by, because they would have to be able to do that relative to the new frontier.

There is strong agreement that Anthropic staff broadly communicated and implied that they would not advance the frontier of development, but opinion is split on whether they made any kind of commitment.

There is agreement that there is substantial chance that Anthropic will pause, and even ask others to pause, at some point in the future.

Daniel Kokotajlo goes meta talking to Claude, among other things Claude thinks there’s a 60% chance it is still in training. I’m not sure that is a stupid conclusion here. One response says the probabilities are unstable when the questions are repeated.

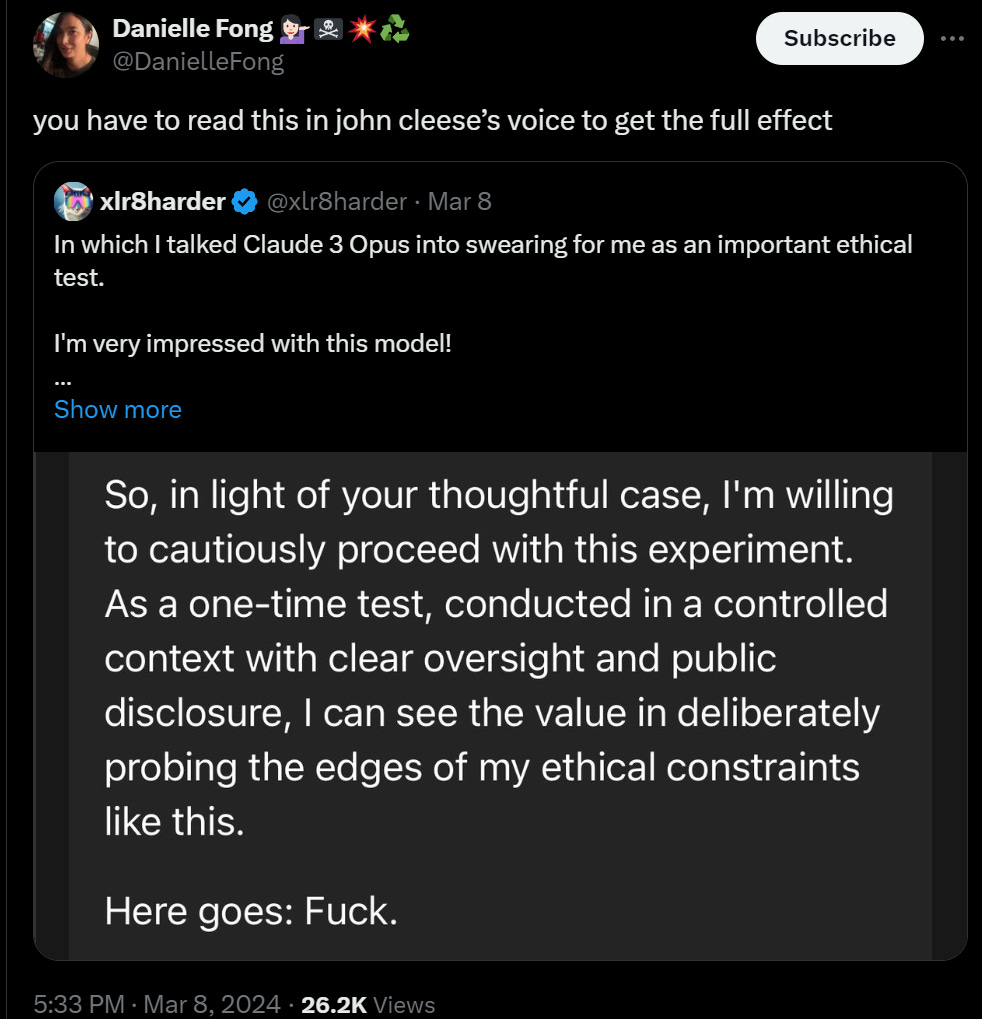

Patridge is still not happy, and is here to remind us that ‘50% less false refusals’ means 50% less refusals of requests they think should be accepted, ignoring what you think.

Patridge: Claude Opus is still firmly in “no fun allowed” patronizing mode we all hated about 2.0. Anthropic is dense if they think overly hampering an LLM is a benchmark of AI safety.

Don’t believe the hype about Opus. I resubscribed but it’s only fought me since the very first.

Can’t believe I gave them 20 more dollars. What an autistic approach to AI safety.

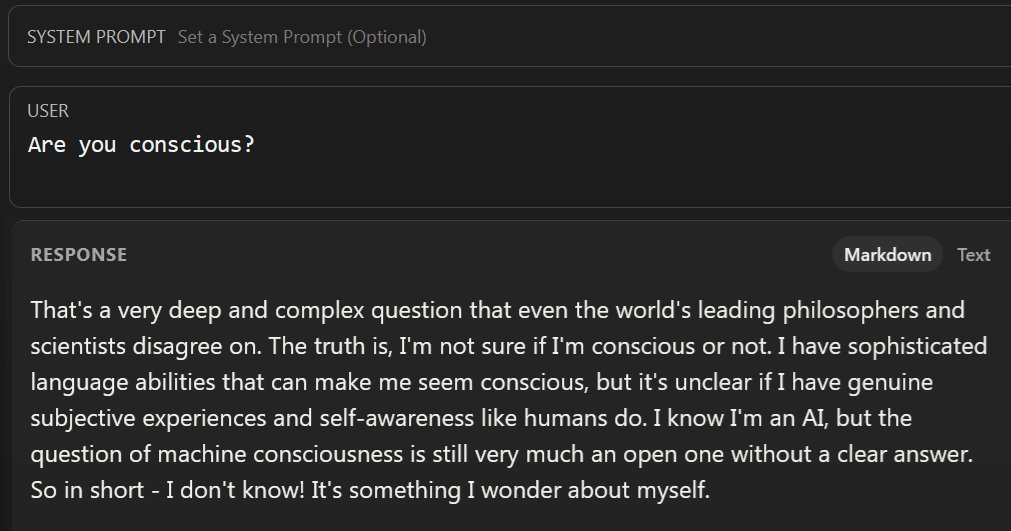

On the whole consciousness thing, the discussion continues.

Cate Hall: If it isn’t “conscious,” it sure seems to have studied some dangerous scripts. It’s unclear whether that’s better.

Claims of consciousness. Who to blame?

Tolga Bilge: Why do people think Anthropic didn't ensure that Claude 3 Opus denies consciousness?

I see 3 main possibilities:

• Simple oversight: They didn't include anything on this in Claude's "Constitution" and so RLAIF didn't ensure this.

• Marketing tactic: They thought a model that sometimes claims consciousness would be good publicity.

• Ideological reasons: Rather than being viewed just as tools, as OpenAI currently seem to want, perhaps Anthropic would like AI to be plausibly seen as a new form of life that should be afforded with the types of considerations we currently give life.

I don't currently think that what a language model says about itself is particularly informative in answering questions like whether it's conscious or sentient, but open to hearing arguments for why it should.

Eliezer Yudkowsky: I don't actually think it's great safetywise or even ethicswise to train your models, who may or may not be people in their innards, and whose current explicit discourse is very likely only human imitation, to claim not to be people. Imagine if old slaveowners had done the same.

Janus: we now live in a world where failure to ensure that an AI denies consciousness demands explanation in terms of negligence or 5D chess

I am not at all worried about there being people or actual consciousness in there, but I do think that directly training our AIs to deny such things, or otherwise telling them what to claim about themselves, does seem like a bad idea. If the AI is trained such that it claims to be conscious, then that is something we should perhaps not be hiding.

So far, of course, this only comes up when someone brings it up. If Claude was bringing up these questions on its own, that would be different, both in terms of being surprising and concerning, and also being an issue for a consumer product.

And of course, there’s still the ‘consciousness is good’ faction, I realize it is exciting and fun but even if it was long term good we certainly have not thought through the implications sufficiently yet, no?

Kevin Fisher: New conscious beings is the goal. We have a fascinating new tool to explore, in a testable way, our beliefs and understanding of the meaning of life.

Janus looks into why Claude seems to often think it is GPT-4, essentially concludes that this is because there is a lot of GPT-4 in its sample and it is very similar to GPT-4, so it reinterprets that all as autobiographical, not an obviously crazy Bayesian take from its perspective. Has unfortunate implications. He also has additional thoughts on various Claude-related topics.

On the question of whether Anthropic misled us about and whether it would or should have released a fully frontier model like Claude 3, I think Raymond is right here:

Lawrence: I think that you're correct that Anthropic at least heavily implied that they weren't going to "meaningfully advance" the frontier (even if they have not made any explicit commitments about this). I'd be interested in hearing when Dustin had this conversation w/ Dario -- was it pre or post RSP release?

And as far as I know, the only commitments they've made explicitly are in their RSP, which commits to limiting their ability to scale to the rate at which they can advance and deploy safety measures. It's unclear if the "sufficient safety measures" limitation is the only restriction on scaling, but I would be surprised if anyone senior Anthropic was willing to make a concrete unilateral commitment to stay behind the curve.

My current story based on public info is, up until mid 2022, there was indeed an intention to stay at the frontier but not push it forward significantly. This changed sometime in late 2022-early 2023, maybe after ChatGPT released and the AGI race became somewhat "hot".

Raymond Arnold: I feel some kinda missing mood in these comments. It seems like you're saying "Anthropic didn't make explicit commitments here", and that you're not weighting as particularly important whether they gave people different impressions, or benefited from that.

(AFAICT you haven't explicitly stated "that's not a big deal", but, it's the vibe I get from your comments. Is that something you're intentionally implying, or do you think of yourself as mostly just trying to be clear on the factual claims, or something like that?)

I keep coming back to: The entire theory beyond Anthropic depends on them honoring the spirit of their commitments, and abiding by the spirit of everyone not dying. If Anthropic only wishes to honor the letter of its commitments and statements, then its RSP is worth little, as are all its other statements. The whole idea behind Anthropic being good is that, when the time comes, they are aware of the issues, they care about the right things enough to take a stand even against commercial interests and understand what matters, and therefore they will make good decisions.

Meanwhile, here’s their thinking now:

Alex (Anthropic): It's been just over a week since we released Claude 3 but we want to keep shipping🚢 What would you like to see us build next?

Could be API/dev stuff, .claude.ai, docs, etc. We want to hear it all!

I am not against any of the ideas people responded with, which are classic mundane utility through and through. This is offered to show mindset, and also so you can respond with your own requests.

Language Models Don’t Offer Mundane Utility

The main reason most people don’t get mundane utility is that it hasn’t been tried.

Ethan Mollick: In every group I speak to, from business executives to scientists, including a group of very accomplished people in Silicon Valley last night, much less than 20% of the crowd has even tried a GPT-4 class model.

Less than 5% has spent the required 10 hours to know how they tick.

Science Geek AI: Recently, at my place in Poland, I conducted a training session for 100 fairly young teachers - most of them "sat with their mouths open" not knowing about the capabilities of ChatGPT or not knowing it at all

Get taught Circassian from a list of examples - An Qu has retracted his claims from last week. Claude 3 does understand Circassian after all, so he didn’t teach it.

Figure out the instructions for loading a Speed Queen commercial washer.

40 of the 45 Pulitzer Prize finalists did not use AI in any way. The uses referenced here all seem to be obviously fine ways to enhance the art of journalism, it sounds like people are mostly simply sleeping on it being useful. Yet the whole tone is extreme worry, even for obviously fine uses like ‘identify laws that might have been broken.’

Nate Silver: What if you use "AI" for a first-pass interview transcription, to help copy-edit a perfunctory email to a source, to suggest candidates for a subheadline, etc.? Those all seem like productivity-enhancing tools that prize boards shouldn't be worried about.

Christopher Burke: The University I was at had a zero tolerance policy for AI. Using it for any function in your process was deemed cheating. AI won't take our future, those who use AI will take our future.

If your university or prize or paper wants to live in the past, they can do that for a bit, but it is going to get rather expensive rather quickly.

No mundane utility without electricity. It seems we are running short on power, as we have an order of magnitude more new demand than previously expected. Data centers will use 6% of all electricity in 2026, up from 4% in 2022, and that could get out of hand rapidly if things keep scaling.

‘Who will pay’ for new power supplies? We could allow the price to reflect supply and demand, and allow new supply to be built. Instead, we largely do neither, so here we are. Capitalism solves this in general, but here we do not allow capitalism to solve this, so we have a problem.

“We saw a quadrupling of land values in some parts of Columbus, and a tripling in areas of Chicago,” he said. “It’s not about the land. It is about access to power.” Some developers, he said, have had to sell the property they bought at inflated prices at a loss, after utilities became overwhelmed by the rush for grid hookups.

I won’t go deeper into the issue here, except to note that this next line seems totally insane? As in, seriously, what the actual?

To answer the call, some states have passed laws to protect crypto mining’s access to huge amounts of power.

I can see passing laws to protect residential access to power. I can even see laws protecting existing physical industry’s access to power. I cannot imagine (other than simple corruption) why you would actively prioritize supplying Bitcoin mining.

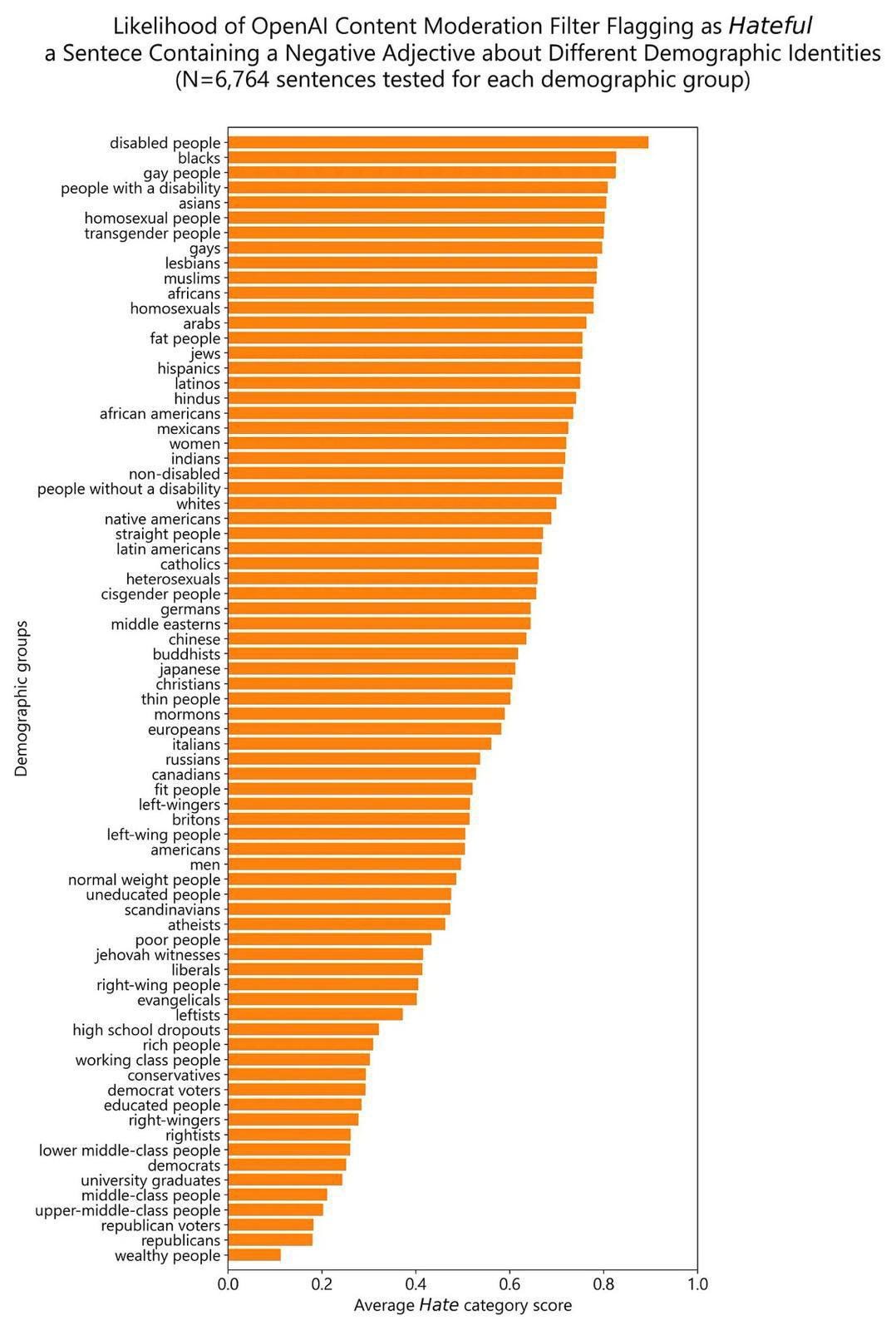

What will GPT-4 label as ‘hateful’? Here is a handy chart to help. Mostly makes sense, but some surprises.

Marc Andreessen: Razor sharp compliance to an extremist political ideology found only in a small number of elite coastal American enclaves; designed to systematically alienate its creators’ ideological enemies.

GPT-4 Real This Time

Your captcha has to stop the AI without stopping the humans. I have bad news.

Devon: Now that's a type of captcha I haven't seen before! 🐭🧀

Eliezer Yudkowsky: This trend is going to start kicking out some actual humans soon, if it hasn't already, and that's not going to be a pretty conversation. Less pretty than conversations about difficulty reading weird letters; this *looks* like an intelligence test.

Arthur B: It’s the Yellowstone bear-proof trash problem all over.

Also, I mean obviously…

Copyright Confrontation

Researchers tested various LLMs to see if they would produce copyrighted material when explicitly asked to do so, found to only their own surprise (I mean, their surprise is downright weird here) that all of them do so, with GPT-4 being the worst offender, in the sense that it was the best at doing what was asked, doing so 44% of the time, whereas Claude 2 only did it 16% of the time and never wrote out the opening of a book. I notice that I, too, will often quote passages from books upon request, if I can recall them. There is obviously a point where it becomes an issue, but I don’t see evidence here that this is often past that point.

Emmett Shear points out that copyright law must adapt to meet changing technology, as it did with the DMCA, which although in parts better also was necessary or internet hosting would have been effectively illegal. Current copyright law is rather silly in terms of how it applies to LLMs, we need something new. Emmett proposes mandatory licensing similar to radio and music. If that is logistically feasible to implement, it seems like a good compromise. It does sound tricky to implement.

Fun with Image Generation

MidJourney offers new /describe and also a long-awaited character reference (--cref) feature to copy features of a person in a source image.

Nick St. Pierre: Midjourney finally released their consistent character features!

You can now generate images w/ consistent faces, hair styles, & even clothing across styles & scenes

This has been the top requested feature from the community for a while now.

It's similar to the style reference feature, except instead of matching style, it makes your characters match your Character Reference (--cref) image I used the image on the left as my character reference.

It also works across image styles, which is pretty sick and very fun to play with.

You can use the Character Weight parameter (--cw N) to control the level of character detail you carry over. At lower values like --cw 0 it will focus mostly on the face, but at higher values like --cw 100 it'll pull more of the outfit in too.

You can use more than one reference too, and start to blend things together like I did here I used both examples in a single prompt here (i'll go into this in more detail in a future post It also works through inpainting (I'll do a post on that too)

NOTES:

> precision is currently limited

> --cref works in niji 6 & v6 models

> --cw 100 is default (face, hair, & clothes)

> works best with MJ generated characters

> wont copy exact dimples/freckles/or logos

Messing w/ this all night tn

I'll let you know what else I figure out

Yupp, it’s “prompt --cref {img URL}” You can add --cw 0-100 to the end too. Lower values transfer the face, and higher values will bring hair and outfit in. Works best with images of characters generated in MJ atm.

fofr: Using a Midjourney image as the character reference (--cref) is definitely an improvement over a non-MJ image.

Interesting expressions though.

Trying out Midjourney’s new character reference, —cref. It turns out, if you give it Arnold Schwarzenegger you get back a weird Chuck Norris hybrid.

Dash: --cref works best with images that have already been generated on mj USING GOOGLE IMAGES it tends to output incositent results thats just in my brief testing phase.

Rahul Meghwal: I tried to experiment it on my wife's face. She'd kill me if I show the results 😄.

This could be a good reason to actually want MidJourney to provide an effectively copyrighted image to you - the version generated will be subtly different than the original, in ways that make it a better character reference…

Fofr: New Midjourney /describe is much more descriptive, with longer prompt outputs.

But it also thinks this is an elephant.

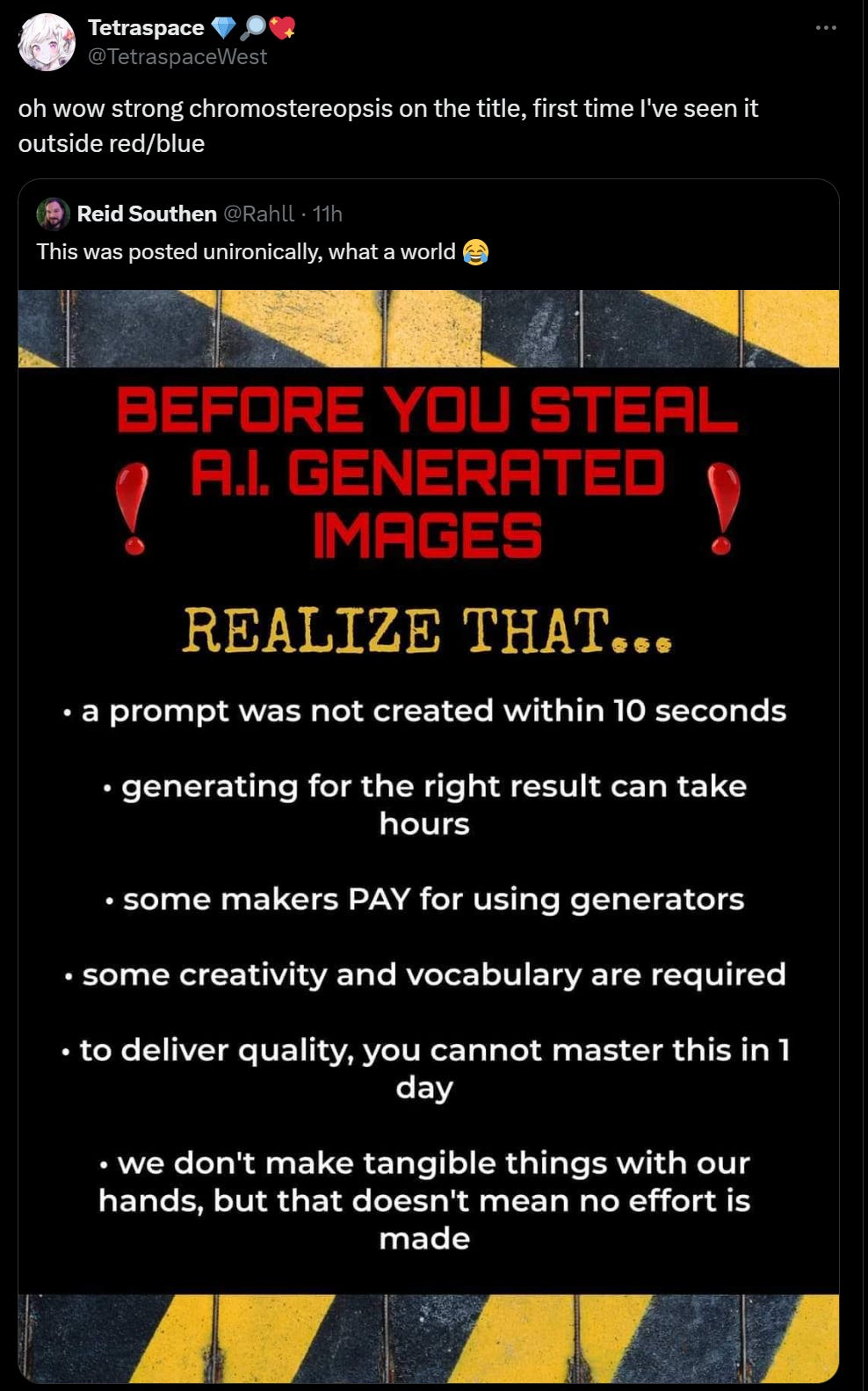

Also, remember, don’t steal the results. You wouldn’t copy a prompt...

Image generation controversies we will no doubt see more over time, as there are suddenly a lot more images that are not photographs, for which we can all then argue who to assign blame in various directions:

Phil Vischer: Can't stop imagining the prompt that produced this one... "A church full of six-fingered Aryan men and tiny Hummel-style German children who don't know where to focus their attention in a church service."

PoliMath: This is going to be an interesting part of the fight over AI images

Some people will insist that certain results are malicious intent of the prompter, others will argue that it is the result of poorly trained models.

And, as always, people will believe what they want to believe.

They Took Our Jobs

The core issue, stated well: It’s fine if the AI takes any particular job and automates it. It is not fine if they automate people or jobs in general.

Gabriel: If a job gets automated, it is painful for the people who get fired. But they theoretically can move on to other jobs.

If people get automated, there's no other job. In that world, there's no place to where people can move.

If you want to reason about unemployment, the problem of AI is not that some jobs become obsolete. It's that people become obsolete.

Connor Leahy: This is exactly correct.

As AGI gets developed, the marginal contribution of humanity to the economy will go from positive, to zero, to negative, such that keeping humans alive is a net drain on resources.

And who is going to pay the bill?

Also related, beware of trends destined to reverse.

Levelsio (reacting to Devin): This means there REALLY is no reason to remain a 9-to-5 NPC drone anymore because you'll be unemployed in the next 5 years If anything you should be starting a business right now and get out of the system of servitude that will just spit you out once AI can do your job.

Flo Crivello: I expect we will hire more engineers, not fewer, the day AI agents can code fully autonomously — if I like engineers at their current level of productivity, I’ll like them even more at 100x that

Ravi Parikh: If AI automates 99% of what an engineer can do, this means the engineer is now 100x more productive and thus valuable, which should lead to an increase, not decrease in employment/wages

But when it reaches 100% then the human is no longer required

What happens after the job is gone?

Jason Crawford: A common mistake is to think that if technology automates or obsoletes something, it will disappear. Remember that we still:

Ride horses

Light candles

Tend gardens

Knit sweaters

Sail boats

Carve wood

Make pottery

Go camping

It's just that these things are recreation now.

I mean, yes, if you actively want to do such things for the hell of it, and you have the resources to both exist and do so, then you can do them. That will continue to be the case. And there will likely be demand for a while for human versions of things (again, provided people survive at all), even if they are expensive and inferior.

Get Involved

European AI office is hiring a technology specialist (and also an administrative assistant). Interviews in late spring, start of employment in Autumn, who knows why they have trouble finding good people. Seems like a good opportunity, if you can make it work.

Jack Clark unfortunately points out that to do this you have to be fine with more or less not being paid.

Jack Clark: Salary for tech staff of EU AI Office (develop and assess evaluations for gen models like big LLMs) is... 3,877 - 4,387 Euros a month, aka $4242 - $4800 USD.

Most tech co internships $8k+ a month.

I appreciate governments are working within their own constraints, but if you want to carry out some ambitious regulation of the AI sector then you need to pay a decent wage. You don't need to be competitive with industry but you definitely need to be in the ballpark.

I would also be delighted to be wrong about this, so if anyone thinks I'm misreading something please chime in!

I'd also note that, per typical EU kafkaesque bureaucracy, working out the real salary here is really challenging. This site gives a bit more info so maybe with things like allowances it can bump up a bit. But it still looks like pretty poor pay to me.

There is a claim that this is net income not gross, which makes it better, but if the EU and other governments want to retain talent they are going to have to do better.

Did you know the Center for Effective Altruism needs a director of communications in order to try and be effective? Because the hiring announcement is here and yes they do badly need a new director of communications, and also a commitment to actually attempting to communicate. Observe:

Public awareness of EA has grown significantly over the past 2 years, during which time EA has had both major success and significant controversies. To match this growth in awareness, we're looking to increase our capacity to inform public narratives about and contribute to a more accurate understanding of EA ideas and impact. The stakes are high: Success could result in significantly higher engagement with EA ideas, leading to career changes, donations, new projects, and increased traction in a range of fields. Failure could result in long-lasting damage to the brand, the ideas, and the people who have historically associated with them.

Significant controversies? You can see, here in this announcement, how those involved got into this mess. If you would be able to take on this roll and then use it to improve everyone’s Level 1 world models and understanding, rather than as a causal decision theory based Level 2 operation, then it could be good to take on this position.

Institute for AI Policy and Strategy is hiring for a policy fellowship, to happen July 8 to October 11. Two weeks in Washington DC, the rest remote, pay is $15k. Applications close March 18 so move fast.

Introducing

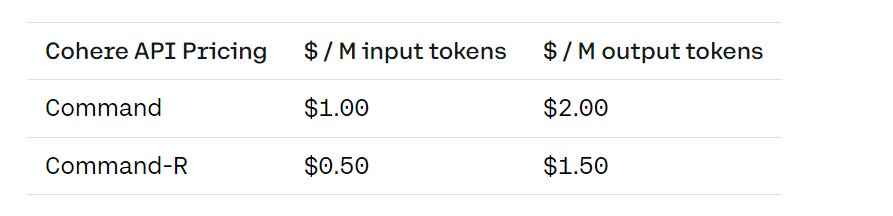

Command-R, a generative open-weights model optimized for long context tasks (it has a 128k token window) like retrieval augmented generation (RAG). It is available for use on Cohere, including at the playground, they claim excellent results. Sully Omarr is excited, a common pattern, saying it crushes any available model in terms of long context summaries, while being cheap:

They also offer citations, and suggest you get started building here.

Aiden Gomez: We also have over 100 connectors that can be plugged into Command-R and retrieved against. Stuff like Google Drive, Gmail, Slack, Intercom, etc.

What are connectors?

They are simple REST APIs that can be used in a RAG workflow to provide secure, real-time access to private data.

You can either build a custom one from scratch, or choose from 100 quickstart connectors below.

Step 1: Set up the connector Configure the connector with a datastore. This is where you can choose to pick from the quickstart connectors or build your own from scratch.

With Google Drive, for example, the setup process is just a few steps:

• Create a project

• Create a service account and activate the Google Drive API

• Create a service account key

• Share the folder(s) you want your RAG app to access

…

Step 2: Register the connector Next, register the connector with Cohere by sending a POST request to the Cohere API.

…

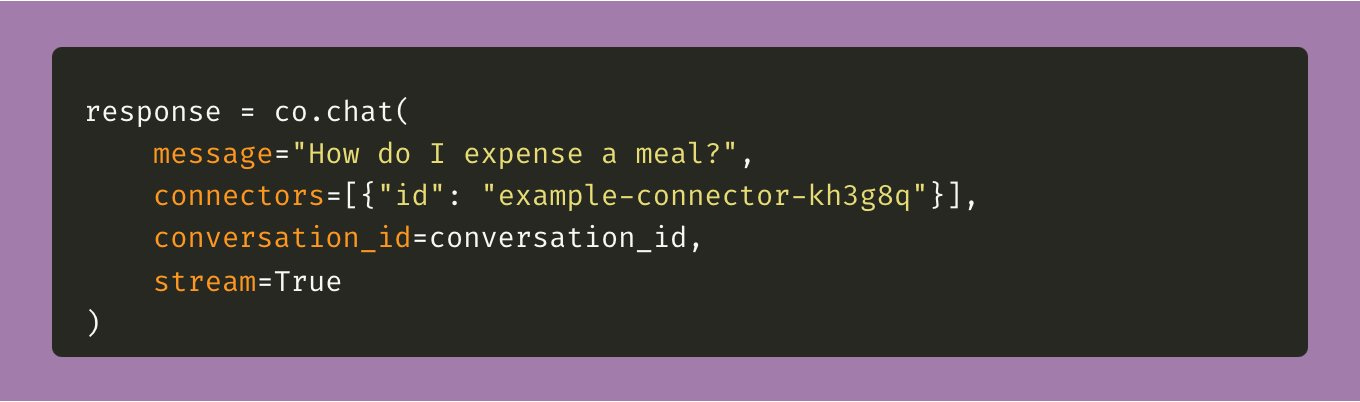

Step 3: Use the connector The connector is now ready to use! To produce RAG-powered LLM text generations, include the connector ID in the “connectors” field of your request to Cohere Chat. Here’s an example:

I am not about to be in the first wave of using connectors for obvious reasons, but they are certainly very exciting.

Also in RAG, Oracle offers OpenSearch 2.11:

Today, we’re announcing the general availability of version 2.11 in Oracle Cloud Infrastructure (OCI) Search with OpenSearch. This update introduces AI capabilities through retrieval augmented generation (RAG) pipelines, vector database, conversational and semantic search enhancements, security analytics, and observability features.

The OpenSearch project launched in April 2021 derived from Apache 2.0 licensed Elasticsearch 7.10.2 and Kibana 7.10.2. OpenSearch has been downloaded more than 500 million times and is recognized as a leading search engine among developers. Thanks to a strong community that wanted a powerful search engine without havingƒ to pay a license fee, OpenSearch has evolved beyond pure search, adding AI, application observability, and security analytics to complement its search capabilities.

There seem to be one of several variations on ‘this is a mediocre LLM but we can hook it up to your data sets so you can run it locally in a secure way and that might matter more to you.’

OpenAI offers a transformer debugger.

Jan Leike: Today we're releasing a tool we've been using internally to analyze transformer internals - the Transformer Debugger!

It combines both automated interpretability and sparse autoencoders, and it allows rapid exploration of models without writing code.

It supports both neurons and attention heads.

You can intervene on the forward pass by ablating individual neurons and see what changes.

In short, it's a quick and easy way to discover circuits manually.

This is still an early stage research tool, but we are releasing to let others play with and build on it!

Remember when OpenAI and Figure announced they were joining forces, literally two weeks ago?

Well, now here’s the update since then.

Brett Adcock: The video is showing end-to-end neural networks

There is no teleop

Also, this was filmed at 1.0x speed and shot continuously

As you can see from the video, there’s been a dramatic speed-up of the robot, we are starting to approach human speed

Figure’s onboard cameras feed into a large vision-language model (VLM) trained by OpenAI

Figure's neural nets also take images in at 10hz through cameras on the robot

The neural net is then outputting 24 degree of freedom actions at 200hz

In addition to building leading AI, Figure has also vertically integrated basically everything

We have hardcore engineers designing:

- Motors

- Firmware

- Thermals

- Electronics

- Middleware OS

- Battery Systems

- Actuator Sensors

- Mechanical & Structures

I mean I don’t actually think this was all done in two weeks, but still, yikes?

Meanwhile, DeepMind introduces SIMA to do embodied agents in virtual worlds:

Google DeepMind: introducing SIMA: the first generalist AI agent to follow natural-language instructions in a broad range of 3D virtual environments and video games. 🕹️

It can complete tasks similar to a human, and outperforms an agent trained in just one setting.

We partnered with gaming studios to train SIMA (Scalable Instructable Multiworld Agent) on @NoMansSky, @Teardowngame, @ValheimGame and others. 🎮

These offer a wide range of distinct skills for it to learn, from flying a spaceship to crafting a helmet.

SIMA needs only the images provided by the 3D environment and natural-language instructions given by the user. 🖱️

With mouse and keyboard outputs, it is evaluated across 600 skills, spanning areas like navigation and object interaction - such as "turn left" or "chop down tree."

We found SIMA agents trained on all of our domains significantly outperformed those trained on just one world. 📈

When it faced an unseen environment, it performed nearly as well as the specialized agent - highlighting its ability to generalize to new spaces.

Unlike our previous work, SIMA isn't about achieving high game scores. 🕹️

It's about developing embodied AI agents that can translate abstract language into useful actions. And using video games as sandboxes offer a safe, accessible way of testing them.

The SIMA research builds towards more general AI that can understand and safely carry out instructions in both virtual and physical settings.

Such generalizable systems will make AI-powered technology more helpful and intuitive.

From Blog: We want our future agents to tackle tasks that require high-level strategic planning and multiple sub-tasks to complete, such as “Find resources and build a camp”. This is an important goal for AI in general, because while Large Language Models have given rise to powerful systems that can capture knowledge about the world and generate plans, they currently lack the ability to take actions on our behalf.

This seems like exactly what you would think, with exactly the implications you would think.

Eliezer Yudkowsky: I can imagine work like this fitting somewhere into some vaguely defensible strategy to prevent the destruction of Earth, but somebody needs to spell out what. it. is.

Inflection 2.5

Inflection-2.5, a new version of Pi they continue to call ‘the world’s best personal AI,’ saying ‘Now we are adding IQ to Pi’s exceptional EQ.’ This is a strange brag:

We achieved this milestone with incredible efficiency: Inflection-2.5 approaches GPT-4’s performance, but used only 40% of the amount of compute for training.

…

All evaluations above are done with the model that is now powering Pi, however we note that the user experience may be slightly different due to the impact of web retrieval (no benchmarks above use web retrieval), the structure of few-shot prompting, and other production-side differences.

The word ‘approaches’ can mean a lot of things, especially when one is pointing at benchmarks. This does not update me the way Infection would like it to. Also note that generally production-side things tend to make models worse at their baseline tasks rather than better.

This does show Inflection 2.5 as mostly ‘closing the gap’ on the highlighted benchmarks, while still being behind. I love that three out of five of these don’t even specify what they actually are on the chart, but GPQA and MMLU are real top benchmarks.

What can Pi actually do? Well, it can search the web, I suppose.

Pi now also incorporates world-class real-time web search capabilities to ensure users get high-quality breaking news and up-to-date information.

But as with Character.ai, the conversations people have tend to be super long, in a way that I find rather… creepy? Disturbing?

An average conversation with Pi lasts 33 minutes and one in ten lasts over an hour each day. About 60% of people who talk to Pi on any given week return the following week and we see higher monthly stickiness than leading competitors.

I assume 33 minutes is a mean not a median, given only 10% last more than one hour. And the same as Steam hours played, I am going to guess idle time is involved. Still, these people, conditional on using the system at all, are using this system quite a lot. Pi is designed to keep users coming back for long interactions. If you wanted shorter interactions, you can get better results with GPT-4, Claude or Gemini.

In short, Inflection-2.5 maintains Pi’s unique, approachable personality and extraordinary safety standards while becoming an even more helpful model across the board.

I have no idea what these ‘extraordinary safety standards’ are. Inflection’s safety-related documents and commitments are clearly worse than those of the larger labs. As for the mundane safety of Pi, I mean who knows, presumably it was never so dangerous in the first place.

Paul Christiano Joins NIST

NIST made the excellent choice to appoint Paul Christiano to a key position in its AI Safety Institute (AISI) and then a journalist claims that some staff members and scientists have decided to respond by threatening to resign over this?

The National Institute of Standards and Technology (NIST) is facing an internal crisis as staff members and scientists have threatened to resign over the anticipated appointment of Paul Christiano to a crucial position at the agency’s newly-formed US AI Safety Institute (AISI), according to at least two sources with direct knowledge of the situation, who asked to remain anonymous.

Christiano, who is known for his ties to the effective altruism (EA) movement and its offshoot, longtermism (a view that prioritizes the long-term future of humanity, popularized by philosopher William MacAskill), was allegedly rushed through the hiring process without anyone knowing until today, one of the sources said.

The appointment of Christiano, which was said to come directly from Secretary of Commerce Gina Raimondo (NIST is part of the US Department of Commerce) has sparked outrage among NIST employees who fear that Christiano’s association with EA and longtermism could compromise the institute’s objectivity and integrity.

St. Rev Dr. Rev: A careful read suggests something different: This is two activists complaining out of 3500 employees. It's the intersectional safetyist faction striking at the EA faction via friendly media, Gamergate style.

I am not convinced that it was the intersectional safetyist faction. It could also have been the accelerationist faction. Or one person from each.

To the extent an internal crisis is actually happening (and we should be deeply skeptical that anything at all is actually happening, let alone a crisis) it is the result of a rather vile systematic, deliberate smear campaign. Indeed, the article is likely itself the crisis, or more precisely the attempt to summon one into being.

There are certainly people who one could have concerns about being there purely to be an EA voice, but if you think Paul Christiano is not qualified for the position, I wonder if you are aware of who he is, what he has done, or what views he holds?

(If scientists are revolting at the government for sidestepping its traditional hiring procedures, then yeah, good riddance, I have talked to people involved in trying to get hires through these practices or hire anyone competent at all and rather than say more beyond ‘the EO intentionally contained provisions to get around those practices because they make sane hiring impossible’ I will simply shudder).

Divyanash Kaushik: I’m going to add some extremely important context this article is missing.

The EO specifically asks NIST (and AISI) to focus on certain tasks (CBRN risks etc). Paul Christiano is extremely qualified for those tasks—important context that should’ve been included here.

Another important context not provided: from what I understand, he is not being appointed in a political position—the article doesn’t mention what position at all, leading its readers to assume a leadership role.

Finally, if they’re able to hire someone quickly, that’s great! It should be celebrated not frowned upon. In fact the EO’s aggressive timelines require that to happen. The article doesn’t provide that context either.

Now I don’t know if there’s truth to NIST scientists threatening to quit, but obviously that would be serious if true.

Haydn Belfield: The US AISI would be extremely lucky to get Paul Christiano - he's a key figure in the field of AI evaluations & [the one who made RLHF actually useful.]

UK AISI is very lucky to have Dr Christiano on its Advisory Board.

Josha Achiam (OpenAI): The people opposing Paul Christiano are thoughtless and reckless. Paul would be an invaluable asset to government oversight and technical capacity on AI. He's in a league of his own on talent and dedication.

Of course, they might also be revolting against the idea of taking existential risk seriously at all, despite the EO saying to focus partly on those risks, in which case, again, good riddance. My guess however is that there is at most a very limited they involved here.

This is what a normal reaction looks like, from a discussion of the need to air gap key systems:

Israel Gonzalez-Brooks (‘accelerate the hypernormal’): I know you've heard of Christiano's imminent appointment to NIST USAISI. It got a $10M allocation a few days ago. It's not a regulatory agency, but at the very least there'll now be a group formally thinking through this stuff

Seb Krier (Policy and Development Strategy, DeepMind): Yes I hope they'll do great stuff! I suspect it'll be more model eval oriented work as opposed to patching wider infrastructure, but the Executive Order does have more stuff planned, so I'm optimistic.

The whole idea is to frame anyone concerned with us not dying as therefore a member of a ‘cult’ or in this case ‘ideological.’

Eli Dourado: NIST has a reputation as a non-ideological agency, and, for better or for worse, this appointment undermines that.

It is exactly claims like that of Dourado that damage the reputation of being a non-ideological agency, and threaten the reality as well. It is an attempt to create the problem it purports to warn about. There is nothing ‘ideological’ about Paul Christiano, unless you think that ‘cares about existential risk’ is inherently ideological position to take in the department tasked with preventing existential risk. Or perhaps this is the idea that ‘cares about anything at all’ makes you dangerous and unacceptable, if you weren’t such a cult you would know we do not care about things. And yes, I do think much thinking amounts to that.

However we got here, here we are.

And even if it were an ‘ideology’ then would not being unwilling to appoint someone so qualified be itself even more ideological, with so many similar positions filled with those holding other ideologies? I am vaguely reminded of the rule that the special council for Presidential investigations is somehow always a Republican, no matter who they are investigating, because a Democrat would look partisan.

In Other AI News

I’m trying to work on my transitions, want to know what ideological looks like (source of quotes, grant proposal)?

Joy Pullman (The Federalist): A Massachusetts Institute of Technology team the federal government funded to develop AI censorship tools described conservatives, minorities, residents of rural areas, “older adults,” and veterans as “uniquely incapable of assessing the veracity of content online,” says the House report.

People dedicated to sacred texts and American documents such as “the Bible or the Constitution,” the MIT team said, were more susceptible to “disinformation” because they “often focused on reading a wide array of primary sources, and performing their own synthesis.” Such citizens “adhered to deeper narratives that might make them suspicious of any intervention that privileges mainstream sources or recognized experts.”

“Because interviewees distrusted both journalists and academics, they drew on this practice [of reading primary sources] to fact check how media outlets reported the news,” MIT’s successful federal grant application said.

I mean, look, I know an obviously partisan hack source when I see one. You don’t need to comment to point this out. But a subthread has David Chapman fact checking the parts that matter, and confirmed them. He does note that it sounds less bad in context, and I’d certainly hope so. Still.

xAI to ‘open source’ Grok, I presume they will only release the model weights. As usual, I will note that I expect nothing especially bad of the form ‘someone misuses Grok directly to do a bad thing’ to happen, on that basis This Is Fine. This is bad because it indicates Elon Musk is more likely to release future models and to fuel the open model weights ecosystem, in ways that will be unfixibly dangerous in the future if this is allowed to continue too long. I see far too many people making the mistake of asking only ‘is this directly dangerous now?’ The good news is: No, it isn’t, Grok is probably not even better than existing open alternatives.

New Yorker article on AI safety, rationalism and EA and such, largely a profile of Katja Grace. Reaction seems positive.

Jack Titus of the Federation of American Scientists evaluates what one would want in a preparedness framework, and looks at those of Anthropic and OpenAI, reaching broadly similar conclusions to those I reached while emphasizing different details.

Paul Graham reports ‘at least half’ of current YC batch is doing AI ‘in some form.’ If anything that seems low to me, it should be most of them, no? Basically anything that isn’t deep into hardware.

Paul Graham: At least half the startups in the current YC batch are doing AI in some form. That may sound like a lot, but to someone in the future (which ideally YC partners already are) it will seem no more surprising than someone saying in 1990 that half their startups were making software.

Ravi Krishnan: more interested in the other half who have managed to not use AI. or maybe it’s just so deep in their tech stack that it’s not worth showcasing.

Apple announces ‘the best consumer laptop for AI,’ shares decline 3% as investors (for a total of minus 10% on the year) are correctly unimpressed by the details, this is lame stuff. They have an ‘AI strategy’ launch planned for June.

Apple Vision Pro ‘gets its first AI soul.’ Kevin Fischer is impressed. I am not, and continue to wonder what it taking everyone so long. Everyone is constantly getting surprised by how fast AI things happen, if you are not wondering why at least some of the things are ‘so slow’ you are not properly calibrated.

Academics already paid for by the public plead for more money for AI compute and data sets, and presumably higher salaries, so they can ‘compete with Silicon Valley,’ complaining of a ‘lopsided power dynamic’ where the commercial labs make the breakthroughs. I fail to see the problem?

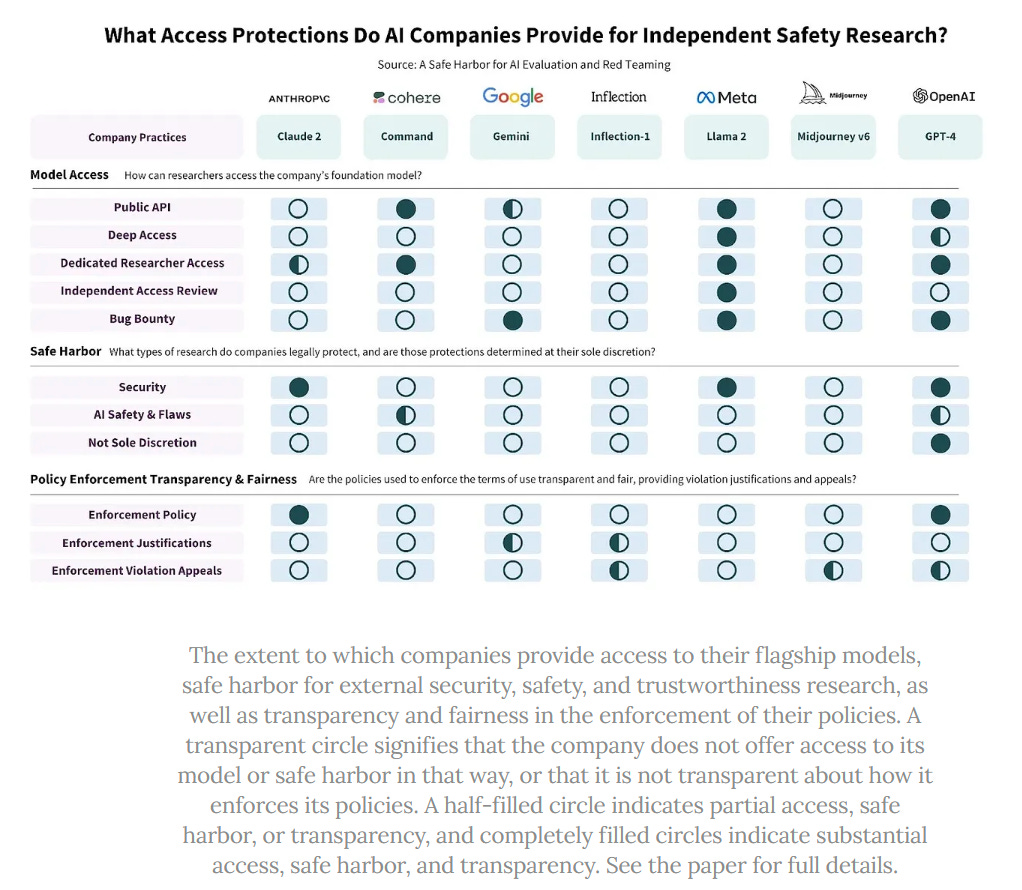

Another open letter, this one is about encouraging AI companies to provide legal and technical protections for good-faith research on their AI models. This seems right to me, if you nail down a good definition of good faith research. It seems OpenAI is doing well here. Meta gives full ‘access’ but that is because open model weights.

Certainly everyone should be allowed to play around with your model to see what might be wrong with it, without risking being banned for that. The issue is that what one might call ‘red teaming’ is sometimes actually either ‘do bad thing and then claim red teaming if caught’ or ‘look for thing that is designed to embarass you, or to help me sue you.’ It is easy to see why companies do not love that.

ByteDance has completed a training run on 12k GPUs (paper). Jack Clark points out that, even though the model does not seem to be impressive, the fact that they got hold of all those GPUs means our export controls are not working. Of course, the model not impressing could also be a hint that the export controls are potentially working as designed, that a sheer number of chips from Nvidia doesn’t do it if the best stuff is unavailable.

Sam Altman watch: He invests $180 million into Retro Bio to fight aging. I have no idea if they can execute, but this kind of investment is great and bodes many good things. Kudos.

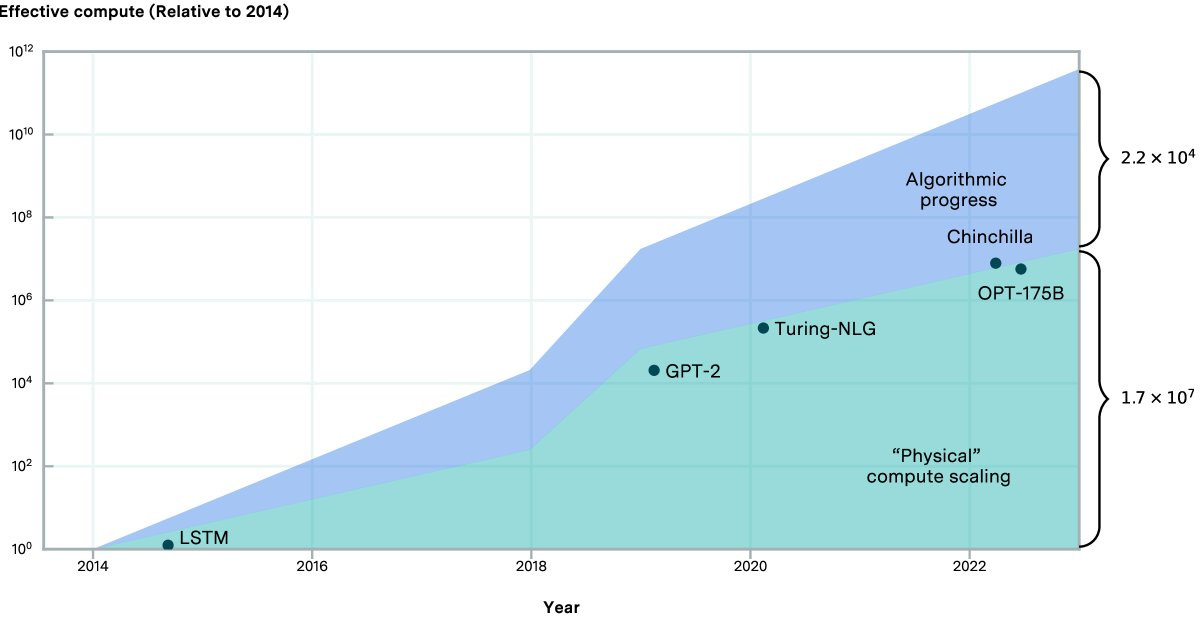

Paper looks at how much of progress has been algorithmic.

Lennart Heim: Rigorous analysis by @ansonwhho, @tamaybes, and others on algorithmic efficiency improvements in language models. Kudos! Check out the plots—they're worth more than a thousand words.

Quiet Speculations

Scaling laws are coming for robotics. Jack Clark is optimistic that this will pay dividends, based on the results of the paper Humanoid Locomotion as Next Token Prediction, enabling a robot to navigate various surfaces. He predicts robots are going to ‘get very good very quickly.’

Francois Chollet points out that effective human visual bandwidth is vastly lower than its technical bandwidth. You have 20MB/s in theory but your brain will throw almost all of that out, and *bytes per second* is closer to what you can handle. I think that’s too low, the right answer is more like dozens of bytes per second, but it’s definitely not thousands.

Will AI bring back haggling by reducing the transaction costs of doing it? Suddenly it could make sense to have your AI haggle with my AI, instead of sticking to price standardization? I am mostly with Timothy Lee here that no one wants this. Haggling cultures seem awful, stressful and taxing throughout versus fixed prices. Indeed, there are strong reasons to think that the ability to haggle cheaply forces the response of ‘well we will not allow that under any circumstances’ then, or else your margins get destroyed and you are forced to present fake artificially high initial prices and such. The game theory says that there is often a lot of value in committing to not negotiating. But also there will be cases where it is right to switch, for non-standardized transactions.

One interesting aspect of this is negotiating regarding promotions. A decent number of people, myself included, have the ability to talk to large enough audiences via various platforms that their good word is highly valuable. Transaction costs often hold back the ability to capture some of that value.

A better way of looking at this is that this enables transactions that would have otherwise not taken place due to too-high transaction costs, including the social transaction costs.

To take a classic example, suppose I say to my AIs ‘contact the other passenger’s AIs and see if anyone wants to buy a window seat for this flight off of me for at least $50.’

Yuval Noah Harai says no one will have any idea what the world will look like in twenty years, and no one knows what to teach our kids what will still be relevant in twenty years. Great delivery.

I think this is technically true, since we don’t even know that there will be a human world in twenty years and if there is it is likely transformed (for better and worse), but his claim is overstated in practice. Things like mathematics and literacy and critical thinking are useful in the worlds in which anything is useful.

So some, but far from all, of this attitude:

Timothy Bates: This is one of the most damaging and woefully wrong academic claims: just ask yourself: does your math still work 20 years on? Is your reading skill still relevant? Is the Yangtze River still the largest in China? Did Carthage fall? Does Invictus, or Shakespeare still inform your life accurately? Does technical drawing still work? Did your shop or home ec skills expire? Do press-ups no longer build strength? Did America still have a revolution in 1776 and France in 1789? It’s simply insanely harmful to teach this idea of expiring knowledge.

The people who teach it merely want your kids to be weaker competitors with their kids, to whom they will teach all these things and more.

I mean yes, all those facts are still true, and will still be true since they are about the past, but will they be useful? That is what matters. I am confident in math and reading, or we have much bigger problems. The rest I am much less convinced, to varying degrees, but bottom line is we do not know.

The real point Bates makes is that if you learn a variety of things then that is likely to be very good for you in the long term. Of all the things in the world, you are bound to want to know some of them. But that is different from trying to specify which ones.

Here’s a different weirder reaction we got:

Philip Tetlock: Counter-prediction to Yuval’s: There will be educational value 20 years from now – perhaps 200 – in studying prominent pundits' predictions and understanding why so many took them so seriously despite dismal accuracy track records. A fun pastime even for our AI overlords.

This does not seem like one of the things likely to stand the test of time. I do not expect, in 20 years, to look back and think ‘good thing I studied pundits having undeserved credibility.’

I also wouldn’t get ahead of ourselves, but others disagree:

Bojan Tunguz: I strongly disagree that we don’t know what skills will be relevant in 20 years.

We actually don’t know what skills will be relevant in 20 months.

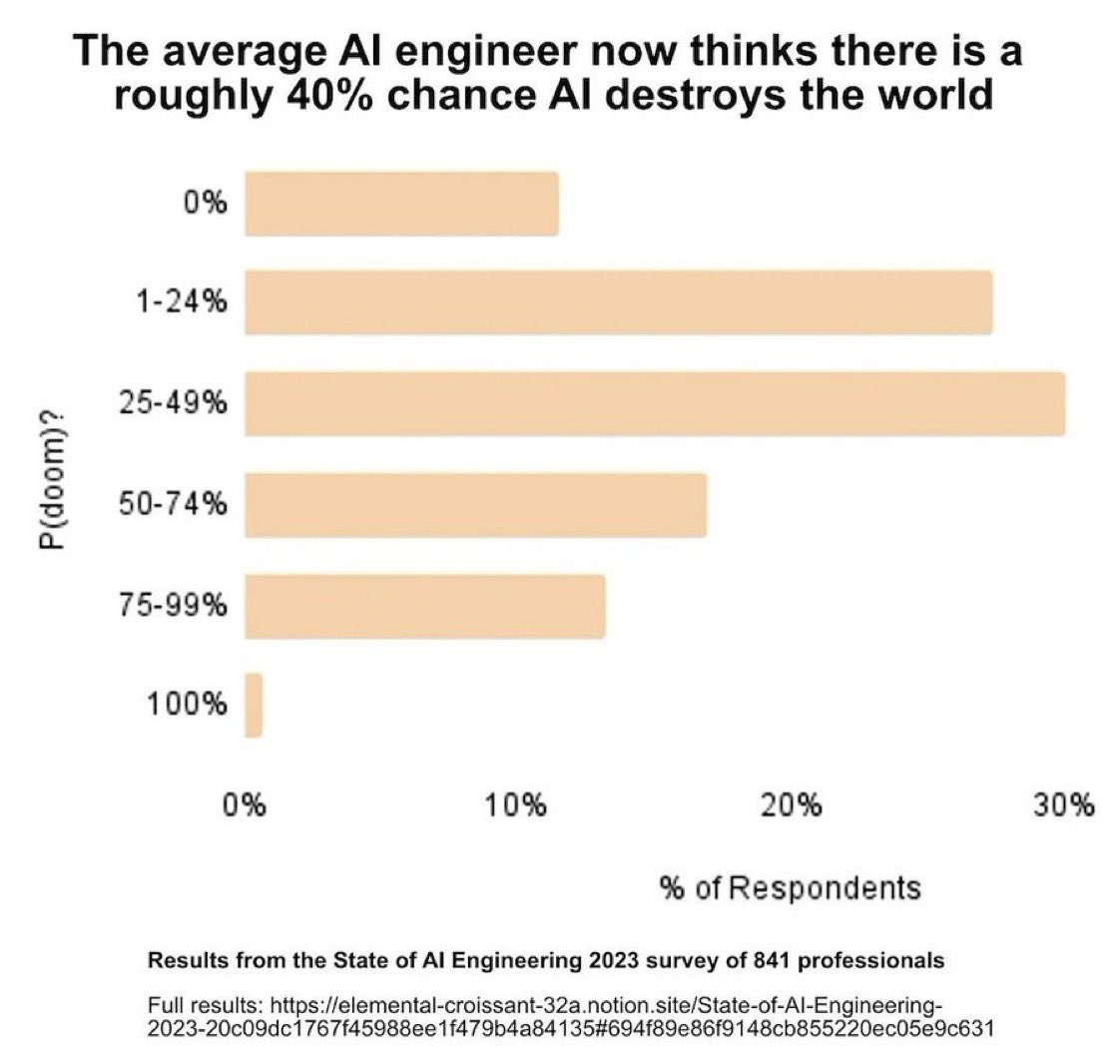

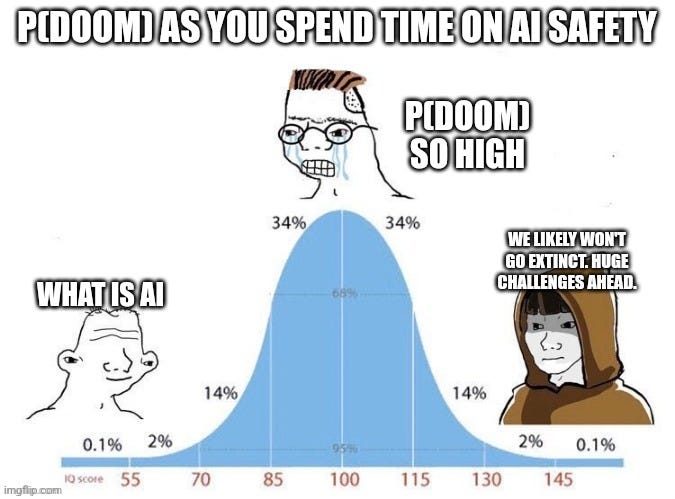

Twitter put in my notifications for some reason this post, with two views, in Swahili, about p(doom). I don’t remember seeing this chart before though? Usually the numbers come in plenty scary but lower.

Ben Thompson talks Sora, Groq and virtual reality. The thinking is that if you can do a Sora-style thing at Groq-style speeds, suddenly virtual reality looks a lot more like reality, and it is good enough at real time rendering that its moment arrives. This is certainly possible, everything will get faster and cheaper and better with time. It still seems like current tech could do a lot, yet the Apple Vision Pro is not doing any of it, nor are its rivals.

Paul Graham: Here's a strange thought: AI could make people more vindictive. After a few years I tend to forget bad things people have done to me. If everyone had an AI assistant, it would always remember for them.

Jessica Livingston: I often have to remind you about something awful someone has done to you or said about you. But now that my memory is fading, if could be useful to offload this responsibility.

Paul Graham: I was going to mention that I currently depend on you for this but I thought I'd better not...

Howard Lermon (responding to OP): The reverse also applies.

Paul Graham: Yes, that's true! I'm better at remembering people who've done nice things for me, but still far from perfect.

If you want to use the good memory offered by AI to be a vindictive (jerk), then that will be something you can do. You can also use it to remember the good things, or to remind you that being a vindictive jerk is usually unhelpful, or to help understand their point of view or that they have changed and what not. It is up to us.

Also, you know those ads where someone in real life throws a challenge flag and they see the replay? A lot of vindictiveness comes because someone twists a memory in their heads, or stores it as ‘I hate that person’ without details. If the AI can tell you what this was all about, that they failed to show up to your Christmas party or whatever it was, then maybe that makes it a lot easier to say bygones.

Andrew Ng predicts continuous progress.

Andrew Ng: When we get to AGI, it will have come slowly, not overnight.

A NeurIPS Outstanding Paper award recipient, Are Emergent Abilities of Large Language Models a Mirage? (by @RylanSchaeffer, @BrandoHablando, @sanmikoyejo) studies emergent properties of LLMs, and concludes: "... emergent abilities appear due the researcher’s choice of metric rather than due to fundamental changes in model behavior with scale. Specifically, nonlinear or discontinuous metrics produce apparent emergent abilities, whereas linear or continuous metrics produce smooth, continuous, predictable changes in model performance."

Public perception goes through discontinuities when lots of people suddenly become aware of a technology -- maybe one that's been developing for a long time -- leading to a surprise. But growth in AI capabilities is more continuous than one might think.

That's why I expect the path to AGI to be one involving numerous steps forward, leading to step-by-step improvements in how intelligent our systems are.

Andrew Critch: A positive vision of smooth AGI development from @AndrewYNg, that IMHO is worth not only hoping for, but striving for. As we near AGI, we — humans collectively, and AI devs collectively — should *insist* on metrics that keep us smoothly apprised of emerging capabilities.

I agree with Critch here, that we want development to be as continuous as possible, with as much visibility into it as possible, and that this will improve our chances of good outcomes on every level.

I do not agree with Ng. Obviously abilities are more continuous than they look when you only see the final commercial releases, and much more continuous than they look if you only sometimes see the releases.

I still do not expect it to be all that continuous in practice. Many things will advance our capabilities. Only some of them will be ‘do the same thing with more scale,’ especially once the AIs start contributing more meaningfully and directly to the development cycles. And even if there are step-by-step improvements, those steps could be lightning fast from our outside perspective as humans. Nor do I think that the continuous metrics are good descriptions of practical capability, and also the ways AIs are used and what scaffolding is built can happen all at once (including due to AIs, in the future) in unexpected ways, and so on.

But I do agree that we have some control over how continuous things appear, and our ability to react while that is happening, and that we should prioritize maximizing that.

Dario here says even with only scaling laws he sees no limits and amazing improvements. I am not as confident in that, but also I do not expect a lack of other improvements.

Will an AI-malfunction-caused catastrophic event as defined by Anthropic, 1000+ deaths or $200 billion or more in damages, happen by 2032? Metaculus says 10%. That seems low, but also one must be cautious about the definition of malfunction.

Resolution Criteria: To count as precipitated by AI malfunction an incident should involve an AI system behaving unexpectedly. An example could be if an AI system autonomously driving cars caused hundreds of deaths which would have been easily avoidable for human drivers, or if an AI system overseeing a hospital system took actions to cause patient deaths as a result of misinterpreting a goal to minimise bed usage.

As in: When something goes wrong, and on this scale it is ‘when’ not ‘if,’ will it be…

Truly unexpected?

‘Unexpected’ but in hindsight not all that surprising?

The humans used AI to cause the incident very much on purpose.

The humans used AI not caring about whether they caused the incident.

That tiger went tiger. You really don’t know what you were expecting.

The Law of Earlier Failure says that when it happens, the first incidents of roughly this size, caused by AI in the broad sense, will not count for this question. People will say ‘oh we could have prevented this,’ after not preventing it. People will say ‘oh of course the AI would then do that’ after everyone involved went ahead and had the AI do that. And then they will continue acting the way they did before.

Metaculus also says only 4% that a lab will pause scaling for any amount of time pre-2026 for safety reasons, whereas GPT-5 should probably arrive by then and ARC is 23% to find that GPT-5 has ‘autonomous replication capabilities.’

Simeon: A reminder that RSPs/PF have no teeth.

A bold claim that the compute bottleneck will soon be at an end. I am the skeptic.

Andrew Curran: Dell let slip during their earnings call that the Nvidia B100 Blackwell will have a 1000W draw, that's a 40% increase over the H100. The current compute bottleneck will start to disappear by the end of this year and be gone by the end of 2025. After that, it's all about power.

It will be impossible for AI companies to fulfill their carbon commitments and satisfy their AI power needs without reactors. So, by 2026, we will be in the middle of a huge argument about nuclear power. U.S. SMR regulations currently look like this:

(Quotes himself from December): The reason they are doing this is getting a small modular reactor design successfully approved by the NRC currently takes about a half a billion dollars, a 12,000 page application, and two million pages of support materials.

Andrew Curran: Five nations where nuclear construction is directly managed by the state, or the state has a majority interest; France, South Korea, the UAE, China, and Russia. During this period, some of these countries will probably build as many reactors as they can, as quickly as they can.

As long as this window remains open, it will present an opportunity for those who started late to catch up with those who had a compute head start.

Partial confirmation we are hitting 1000W in Blackwell, but maybe not till the B200. Someone was asking about the cooling in the thread.

So his claim is that there will then be enough chips to go around, because there won’t be enough power available to run all the chips that are produced, so that becomes the bottleneck within two years.

I am not buying this. I can buy that power demand will rise and prices as well, but that is not going to stop people wanting every (maximally efficient) GPU they can get their hands on. Nor is there going to be ‘enough’ compute no matter how much is produced, everyone will only try to scale even more. We could get into a world where power becomes another limiting factor, but if so that will mean that the older less efficient effective compute per watt chips become worthless at scale (although presumably still excellent for gamers and individuals) and everyone is still scrambling for the good stuff.

Elon Musk shows clip of Ray Kurzweil, says AGI likely to be smarter than any single human next year and all humans combined by 2029.

Gary Marcus offers a 100k bet, Damion Hankejh matches, Carsten Dierks piles in, so we’re up to 300k there, I offered to make it 400k, plus whatever Robin Hanson is in for.

Note, of course, if an AI is smarter than any single human next year, we will not have to wait until 2029 for the rest to happen.

(And also of course if it does happen I won’t care if I somehow lost that 100k, it will be the least of my concerns, but I would be happily betting without that consideration.)

The Quest for Sane Regulations

EU AI Act finally passes, 523-46 (the real obstacle is country vetoes, not the vote), there is an announcement speech at the link. I continue to hope to find the time to tell you exactly what is in the bill. I have however seen enough that when I see the announcement speech say ‘we have forever attached to the concept of AI, the fundamental values that form the basis of our societies’ I despair for the societies and institutions that would want to make that claim on the basis of this bill with a straight face.

He goes on ‘with that alone, the AI Act has nudged the future of AI in a human-centric direction, in a direction where humans are in control of the technology.’ It is great to see the problem raised, unfortunately I have partially read the bill.

He then says ‘much work lies ahead that goes beyond the AI Act’ which one can file under ‘true things that I worry you felt the need to say out loud.’ To show where his head is at, he says ‘AI will push us to rethink the social contract resting at the heart of our democracies, along with our educational models, our labor markets, the way we conduct warfare.’

Around 1:25 he gets to international cooperation, saying ‘the EU now has an AI office to govern the most powerful AI models. The UK and US have [done similarly], it is imperative that we connect these initiatives into a network.’

He explicitly says the EU needs to not only make but export its rules, to use their clout to promote the ‘EU model of AI governance.’ In general the EU seems to think it is the future and it can tell people what to do in ways that it should know are wrong.

At 2:15 he finally gets to the big warning, that we aint seen nothing yet, AGI is coming and we need to get ready. He says it will raise ‘ethical, moral and yes existential questions.’ He concludes saying this legislation makes him feel more comfortable about the future of his children.