AiPhone

Apple was for a while rumored to be planning launch for iPhone of AI assisted emails, texts, summaries and so on including via Siri, to be announced at WWDC 24.

It’s happening. Apple’s keynote announced the anticipated partnership with OpenAI.

The bottom line is that this is Siri as the AI assistant with full access to everything on your phone, with relatively strong privacy protections. Mostly it is done on device, the rest via ‘private cloud compute.’ The catch is that when they need the best they call out for OpenAI, but they do check with you first explicitly each time, OpenAI promises not to retain data and they hide your queries, unless you choose to link up your account.

If the new AI is good enough and safe enough then this is pretty great. If Google doesn’t get its act together reasonably soon to deliver on its I/O day promises, and Apple does deliver, this will become a major differentiator.

AiPhone

They call it Apple Intelligence, after first calling it Personal Intelligence.

The pitch: Powerful, intuitive, integrated, personal, private, for iPhone, iPad and Mac.

The closing pitch: AI for the rest of us.

It will get data and act across apps. It will understand personal context. It is fully multimodal. The focus is making everything seamless, simple, easy.

They give you examples:

Your iPhone can prioritize your notifications to prevent distractions, so you don’t miss something important. Does that mean you will be able to teach it what counts as important? How will you do that and how reliable will that be? Or will you be asked to trust the AI? The good version here seems great, the bad version would only create paranoia of missing out.

Their second example is a writing aid, for summaries or reviews or to help you write. Pretty standard. Question is how much it will benefit from context and how good it is. I essentially never use AI writing tools aside from the short reply generators, because it is faster for me to write than to figure out how to get the AI to write. But even for me, if the interface is talk to your phone to have it properly format and compose an email, the quality bar goes way down.

Images to make interactions more fun. Create images of your contacts, the AI will know what they look like. Wait, what? The examples have to be sketches, animations or cartoons, so presumably they think they are safe from true deepfakes unless someone uses an outside app. Those styles might be all you get? The process does seem quick and easy to generate images in general and adjust to get it to do what you want, which is nice. Resolution and quality seems fine for texting, might be pretty lousy if you want better. Image wand, which can work off an existing image, might be more promising, but resolution still seems low.

The big game. Take actions across apps. Can access your photos, your emails, your podcasts, presumably your everything. Analyze the data across all your apps. Their example is using maps plus information from multiple places to see if you can make it from one thing to the next in time.

Privacy

Then at 1:11:40 they ask the big question. What about privacy? They say this all has ‘powerful privacy.’ The core idea is on-device processing. They claim this is ‘only possible due to years of planning and investing in advanced silicon for on device intelligence.’ The A17 and M1-4 can provide the compute for the language and diffusion models, which they specialized for this. An on-device semantic index assists with this.

What about when you need more compute than that? Servers can misuse your data, they warn, and you wouldn’t know. So they propose Private Cloud Compute. It runs on servers using Apple Silicon, use Swift for security (ha!) and are secure. If necessary, only the necessary data goes to the cloud, exclusively for the request, and it is never stored.

They claim that this promise of privacy can be verified from the outside, similar to how it can be done on the iPhone.

Matthew Green has a thread analyzing Apple’s implementation. He does not love that they give you no opt-out and no notice. He does think Apple is using essentially every known trick in the book to make this secure. And this is miles ahead of the known alternatives, such as ‘let OpenAI see the data.’ No system is perfect, but this does not seem like the most likely point of failure.

Practical Magic

They then move on to practical cool things.

Siri is promised to be more natural, relevant and personal, as you would expect, and yes you will get Siri on Mac and iPad. It can interact with more apps and remember what you were doing. They are bragging that you can ‘type to Siri’ which is hilarious. Quick and quiet, what a feature. They promise more features over the year, including ‘on-screen awareness’ which is an odd thing not to already have given the rest of the pitch. Then Siri will gain its key ability, acting within and across apps.

They are doing this via App Intents, which is how apps hook their APIs up.

It is cool to say ‘do X’ or ‘what was Y’ and not have to worry about what apps are involved in doing X or knowing Y. Ideally, everything just works. You talk to the phone, that’s it. Simple. Practical.

The ‘nope, reroll that email again’ button is pretty funny. Changing the tone or approach or proofreading makes sense. Rewrites wiping out the original by default might be an error.

Smart reply looks great if it works - the AI looks at the email or form, figures out the questions that matter, asks you ideally via multiple choice when possible, then uses the answers. Again, simple and practical.

Email inbox that lists summaries rather than the first line and generating summaries? If good enough, that could be a game changer. But it has to be good enough. You have to trust it. Same with priority sorting.

Genmoji, you can create a new AI emoji. Oh no. The point of emojis is that they are pictograms, that there is a fixed pool and each takes on a unique meaning. If you generate new ones all the time, now all you are doing is sharing tiny pictures. Which is fine if you want that, but seriously, no, stop it.

Video and picture montages, and AI search in general, make it more rewarding to spam photos and videos all the time, as I’ve noted before.

Dance With the Devil

They don’t mention ChatGPT or OpenAI until seven minutes before the end. ChatGPT is one more app you can use, if you want that, but Siri asks before using it each time, due to the privacy (and accuracy) issues. ChatGPT will be available later this year, often useful, but it is not at the core of these offerings, and it is not obvious you couldn’t also use Claude or Gemini the same way soon if you wanted. You can also call in Dall-E, which seems likely to be worth doing. You can link in your paid account, or do it without any links or records.

Essentially ChatGPT will be there in the wings, if the phone or any app ever needs it. OpenAI claims requests are not stored and IP addresses are obscured, unless of course you choose to link your account.

Does It Work?

The biggest question is always the obvious one.

Will the damn thing work?

What do we know about the model? Here is Apple’s announcement. The on-device model is size ~3B, the server-side one is larger. Both are fine tuned for everyday uses. They were trained using AXLearn. They use standard fine-tuning including rejection sampling and RLHF. They highlight use of grouped-query-attention and embedding tables. Latency on iPhone 15 Pro is reported at 0.6 milliseconds per prompt token, 30 tokens per second.

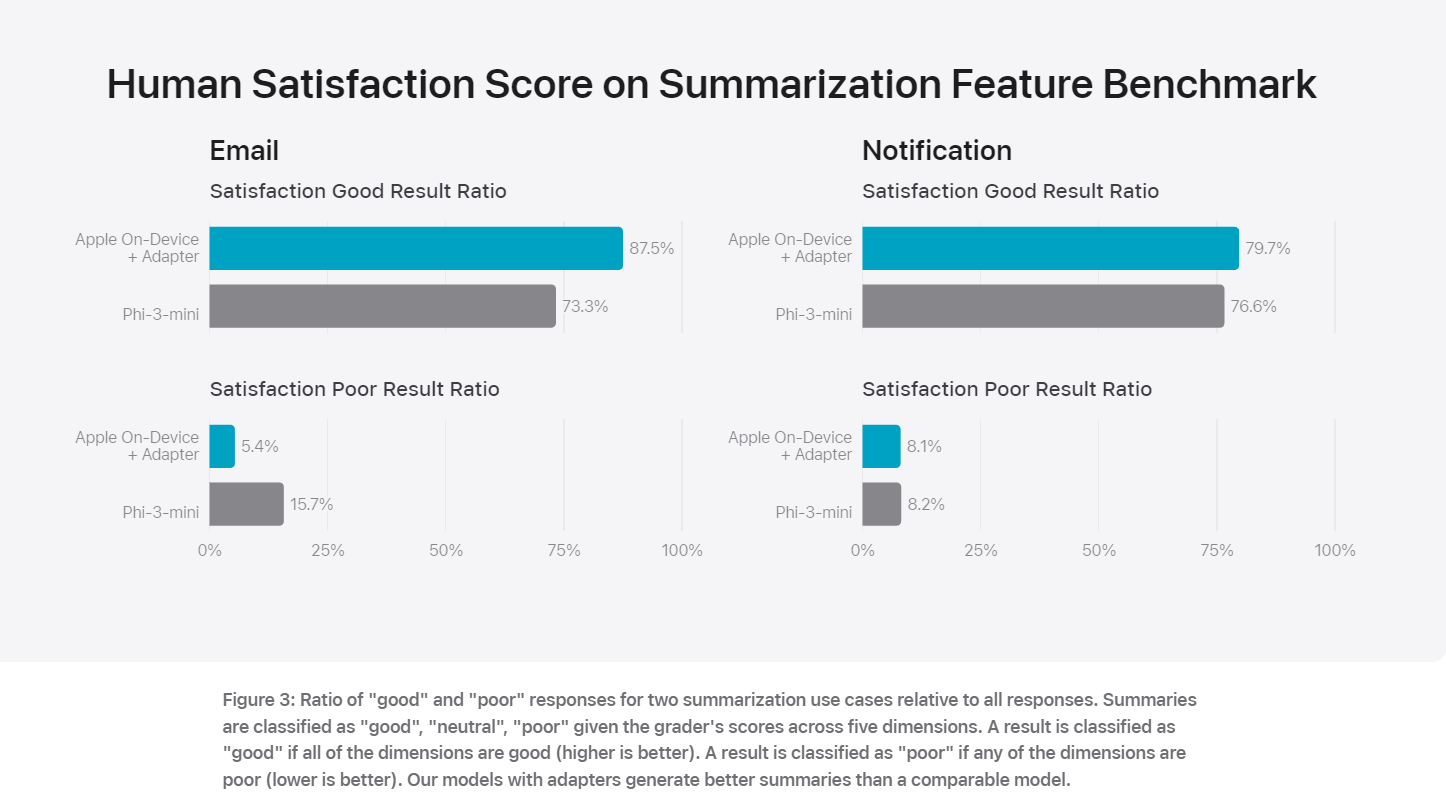

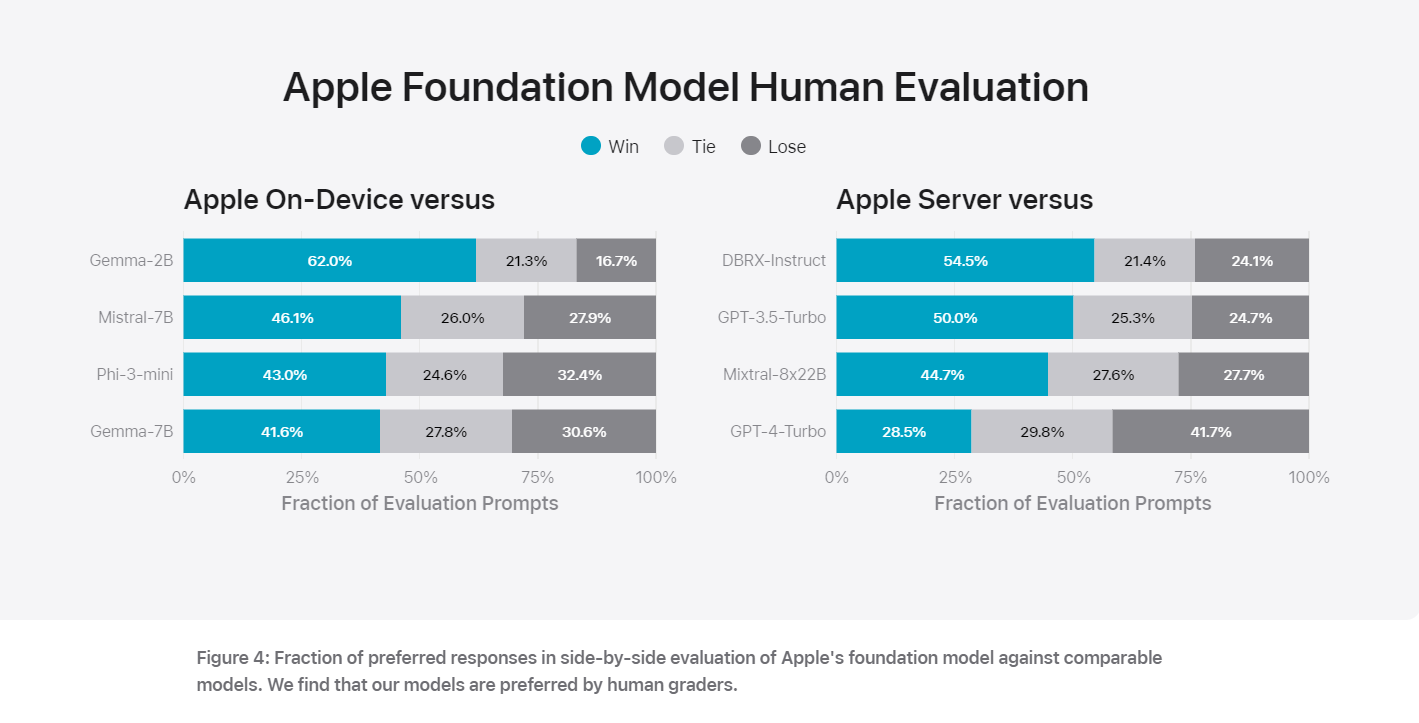

The evals for this one are different than we are used to here.

In the end, that is what they care about. How often is the result good? How often bad?

The models are reported very good at avoiding harmful content, winning against the same set of models.

The server-side model claims results on IFEval and Writing benchmarks similar to GPT-4-Turbo, while on-device is a little over GPT-3.5 level. Until we get our hands on the product, that’s what they’re representing. You get 3.5-level on-device with full integration, or 4-level in the ‘private cloud.’

No set of demos tells you if it works. No set of evals tells you if it works. No promises mean all that much. It works when we get it in our hands and it works. Not before.

So many of these features have thresholds for utility. If the feature is not good enough, it is worthless, or actively gets in the way. If this is underwhelming, you end up with something still miles better than old Siri, with particular things you learn it can do reasonably, but which you mostly do not trust, and you turn a lot of the ‘features’ off until it improves.

You do not want your notifications or emails prioritized badly, even more than you do not want a toxic ‘for you’ feed. If the system is not very good at least at avoiding false negatives and ensuring you don’t miss something vital, you are better off handling such tasks yourself. We also are not going to see a launch will all the promised features. They will roll in over time.

Do You Dare?

Right after ‘does it work?’ is ‘is it safe to use it?’

Do you want an on-device AI assistant that is using context that can be prompt injected by anyone sending you an email or text, that can act in any of your apps?

People are talking about whether they ‘trust Apple’ with their data (and they know they don’t and shouldn’t trust OpenAI). That is not a wrong question, but it is the wrong first safety question. The right first safety question is whether you can trust Apple’s AI not to get compromised or accidentally screw with you and your data, or what an attacker could do with it if they stole your phone or SIM swapped you.

Apple’s keynote did not mention these issues at all, even to reassure us. I am deeply worried they have given the questions little thought.

I do not want to be that guy, but where is the red team report? They say the red teaming is ‘ongoing’ and that seems to be it.

What I do know is that it is pulling context in from content you did not create, into all its queries, with the power to take actions across the system. I worry.

Who Pays Who?

Tyler Cowen plays the good economist, asks if Apple is paying OpenAI or is it vice versa? Ben Thompson speculates OpenAI won exclusive entry by not charging Apple, and OpenAI gets mindshare. Tyler’s guess is Apple pays OpenAI, at least in the longer run, because Apple can extract money via phones and the store, and pass some of that along without subscription fatigue. As Tyler Cowen says optimal contract theory has payments in both directions, but I assume that theory fails here. My guess is that for now no one pays anyone, or the payment is similar to at cost for the compute.

EDIT: My guess is confirmed by Bloomberg. Apple is not paying OpenAI.

In the longer run, I presume Tyler’s logic holds and Apple pays OpenAI, but also I would be very surprised if OpenAI got to be exclusive. At best OpenAI will be a default, the same way Google search is a default. That’s still pretty good.

I notice Anthropic was potentially hurt a lot here by lacking an image model. It would make sense for them to get one as part of their commercial package, even if it does not otherwise help the mission. And my guess is Apple went with OpenAI because they saw it as a better product than Gemini, but also in part because they see Google as the enemy here. If Google insisted on payment and Apple insisted on not paying (or on payment) and that led to no deal, both are fools.

Ben Thompson looks at all this in terms of business models. He approves. Apple gets to incorporate OpenAI without having to invest in frontier models, although we now know Apple wisely seems to be rolling its own in the places that matter most.

AiPhone Fans

What did people think?

Andrej Karpathy: Actually, really liked the Apple Intelligence announcement. It must be a very exciting time at Apple as they layer AI on top of the entire OS. A few of the major themes.

Step 1 Multimodal I/O. Enable text/audio/image/video capability, both read and write. These are the native human APIs, so to speak.

Step 2 Agentic. Allow all parts of the OS and apps to inter-operate via "function calling"; kernel process LLM that can schedule and coordinate work across them given user queries.

Step 3 Frictionless. Fully integrate these features in a highly frictionless, fast, "always on", and contextual way. No going around copy pasting information, prompt engineering, or etc. Adapt the UI accordingly.

Step 4 Initiative. Don't perform a task given a prompt, anticipate the prompt, suggest, initiate.

Step 5 Delegation hierarchy. Move as much intelligence as you can on device (Apple Silicon very helpful and well-suited), but allow optional dispatch of work to cloud.

Step 6 Modularity. Allow the OS to access and support an entire and growing ecosystem of LLMs (e.g. ChatGPT announcement).

Step 7 Privacy. <3

We're quickly heading into a world where you can open up your phone and just say stuff. It talks back and it knows you. And it just works. Super exciting and as a user, quite looking forward to it.

I agree with Derek Thompson, operability between apps is the killer app here.

Except, of course, no, totally do not actually send this particular query, and are you seriously giving the AI your credit card this generally?

Derek Thompson: AI operability between apps will be huge. What you want is: “Siri, buy me the cheapest flight from DCA to LAX on July 1 that leaves after 10am on Kayak, use my Chase card, save as Gcal event, forward email confirmation to my wife and sister, and Slack my boss my OOO…”

It is a great example, but you should absolutely pause to confirm the flight details before booking, or you will be in for a nasty surprise.

Important safety tip: Never, ever say ‘cheapest flight’ blind. Seriously. Oh no.

Also, what kind of non-lazy non-programmer are you? Obviously the actual command is ‘find a work flight from DCA to LAX on July 1 after 10am as per usual instructions,’ then ‘confirmed, buy it and do standard work flight procedure.’

Sully Omarr: You hear that? "Siri can take actions on your phone." This is the LAM you want. Holy shit.

Sully Omarr: The apple event was great.

They focused on the important things that consumers care about (privacy, easy to use etc)

If anyone else had launched an on device, OS level LLM this place would of lost their mind

Were the emojis cringe? Sure. Everything else was exciting.

BWoolf: Do consumers really care about privacy, though? I know if you poll people, they say they do built if it's privacy or convenience, they choose convenience 90 times out of a 100.

Sully Omarr: Usually they don’t but it’s a good selling feature for Apple.

OS level AI actions, personal context, rag on your entire phone, all on device or on private cloud... basically your own personal assistant.... totally private & secure really good imo

Do we want it? I mean, we do, unless we very much don’t. Your call.

I think if Google had done it we wouldn’t be losing our minds, and we know this because Google essentially did do it at I/O day if you were paying attention, but no one was and we did not lose our minds. Apple’s implementation looks better in important ways, but Google’s is also coming, and many of Apple’s features will come later.

On privacy, I think AI changes the equation. Here I expect people absolutely will care about privacy. And they should care. I think the logical play is to decide which companies you do and don’t trust and act accordingly. My essential decision is that I trust Google. It’s not that I don’t trust Apple at a similar level, it’s that there has been no reason strong enough to risk a second point of failure.

Aaron Levine sees this as heralding a future of multiple AI agents working together. That future does seem likely, but this seems more like one agent doing multiple tasks?

JD Ross: Apple using Apple Silicon in its own data centers (not nVidia chips) to power Apple Intelligence

Very interesting, and getting little attention so far.

Designed by Apple using custom ARM instruction set licensed, like their A and M series chips. But yes made by TSMC (and possibly Samsung)

If nothing else, as I write this Nvidia hit an all time high, so presumably it’s priced in.

Marcques Brownlee I would describe as cautiously optimistic, but he is clearly reserving judgment until he sees it in action. That’s how he does and should roll.

No AiPhone

Others are not as fond of the idea.

The most obvious concern is that this on-device AI will be doing things like reading emails before you open them, and they get used for context in various ways.

This might not be entirely safe?

Rowan Cheung: Apple Intelligence feature in Mail understands the content of emails and surfaces the most urgent messages to the top of inboxes.

The most critic vocal is, as one might predict, Elon Musk.

Musk does not sweat the details before posting memes. Also statements.

Elon Musk: If Apple integrates OpenAI at the OS level, then Apple devices will be banned at my companies. That is an unacceptable security violation.

Eliezer Yudkowsky: Two important principles of computer security:

Any software that is possible to persuade, cajole, or gaslight should not be built into the operating system.

Don't send all your data to Sam Altman.

Doge Designer (QTed by Musk, 49m views): Remember when Scarlett Johansson told OpenAI not to use her voice, but they cloned it and used it anyway?

Now imagine what they can do with your phone data, even if you don't allow them to use it.

Elon Musk: Exactly!

None of that matches what the keynote said.

OpenAI is not integrated at the OS level, that is an Apple ~3B model that is run on device. Not even Apple will ever get access to your data, even when the 4-level model in your ‘private cloud’ is used. If you access ChatGPT, the query won’t be stored, they will never know who it was from, and you will be asked for explicit consent every time before the query is sent off, unless you choose to link up your account.

Yes, these companies could be lying. But the franchise value of Apple’s goodwill here in enormous, and they claim to be offering proof of privacy. I believe Apple.

The danger could be that there is a prompt where tons of your data becomes context and it gets sent over, and then OpenAI hits the defect button or is compromised. Certainly the ‘do you want to use ChatGPT to do that?’ button does not tell you how much context is or is not being shared. So there could be large potential to shoot yourself in the foot, or there might not be.

Does Elon Musk owe Apple an apology, as Marcus suggests? No, because part of Being Elon Musk is that you never owe anyone an apology for anything.

Mike Benz: Give me a Grok phone with Grok integrated

Elon Musk: If Apple actually integrates woke nanny AI spyware into their OS, we might need to do that!

It is bizarre the extent to which this ‘woke AI’ thing has taken hold of this man’s psyche. It seems fine to worry about privacy and data security, but integrating Grok means you are reintroducing all those problems. Also Grok is not (yet at least) good.

Ed-Newton-Rex complained that Apple’s ‘Responsible AI Development’ policy did not mention training on people’s work without permission. They say they train on publicly available knowledge, and they provide an opt out assuming they honor it.

Gary Marcus notes some good moves in privacy, internal search, paraphrased queries and limiting images to fixed styles. But he offers his traditional warnings of dumb failures and hallucinations and failed progress. He notes a lot of the GPT-4o demo tools were on display.

Dean Ball reminds us of an important principle. It is true for every subject.

Dean Ball: One of my lessons from the commentary on Apple Intelligence: many people, including AI influencers with large followings, do not read or watch source material before they comment on it. Perhaps I was naive (definitely), but this is an update for me.

In Other Apple News

What else was fun in the Apple keynote for that first hour of other stuff?

The AppleTV+ lineup looks solid.

Getting character and actor and song info on AppleTV+ is nice. I’ve appreciated this feature for Amazon Prime.

Scheduling texts seems highly useful and overdue.

Messages in iMessage using satellites seems great. Also hiking maps?

Apple Watch gets an easy translation button and better notifications.

Calculator for iPad (also iPhone). Including math notes, where you can draw all sorts of related equations, update in real time, very cool, not your usual.

Use your iPhone directly on your Mac without having your phone handy, including with your keyboard, including notifications. Drag and drop stuff in both directions.

Mac ‘presenter preview’ and automatic background replacements seem handy.

You can play Baldur’s Gate 3 on Mac now. Um, ok, great?

Two iPhones can pay each other cash without a phone number or email. Hopefully the process is secure.

You can customize your iPhone home screen icons a little now. Move them around where you want, how insane is it you couldn’t do that before. You can resize widgets. There is a control center you can customize. Put any icon on the lock screen. Apple is slightly less of a control freak.

Autocomplete on number dialing. How is this only happening now?

Shaking your head to accept or decline a phone call while wearing air pods. They also get sound isolation during phone calls.

You can lock applications with face ID. Nice.

Voice memos in notes.

Siqi Chen says Apple sherlocked 1Password. But he says storing passwords using your Apple ID seems highly questionable, because if you get SIM card swapped, which ordinary workers at phone companies can do to you, the attacker can change your login info and backup phone, and thus lock you out of your Apple ID permanently. At least for now Apple has no power to get it back even if it wants to once that happens - he escalated to the VP level and they are cryptographically locked out.

As I understand it, Google accounts do not have this issue. If you can convince Google that you are indeed you, you get your account back, as I verified when someone tried to pull some tricks on me recently, so I got a Google employee on standby just in case.

"The point of emojis is that they are pictograms, that there is a fixed pool and each takes on a unique meaning. If you generate new ones all the time, now all you are doing is sharing tiny pictures. Which is fine if you want that, but seriously, no, stop it." Spend some time in Twitch chat with all the engagement with emotes, BTTV, 7TV etc. and the plain, fixed set of Emojis becomes pretty dull. Don't get me wrong, the staples of Kappa, LUL, KEKW, POG have their role as those "fixed cultural touchpoints" but I've found the constantly expanding set is still a net positive experience.

" My essential decision is that I trust Google. It’s not that I don’t trust Apple at a similar level, it’s that there has been no reason strong enough to risk a second point of failure."

I disagree with the first sentence here. I think Google is better modeled currently as a large group of engineers with a broadly late-90s / early aughts Slashdot ethos (i.e., basically how computer scientists have always been) compatible with a desire for strong privacy guarantees and robust safeguards, managed by an increasingly profit-focused executive and upper management class who are going to constantly undermine attempts by engineering teams and sympathetic lower/ middle managers to implement them.

The basic problem is one of incentive gradients. While Google products often reflect reasonably good-faith attempts to be reasonably transparent about what they're doing (I would, for example, put them above median in terms of "responsible behavior towards users providing sensitive data"), they're going to be subject to constant enshittification pressure because ultimately Google's raison d'etre is to sell ads, and their basic business model is monetizing user behavior and user data (and user time and eyeballs) in order to do so. Privacy safeguards are ultimately directly opposed to Google's basic profit-making business model and as a result we should expect to be some combination of weak or weakening at all times.

Apple's goal is to sell expensive hardware, with a secondary revenue stream from its walled-garden ecosystem of software and services. Like Google, this is subject to observable enshittification failure modes -- most obviously, never including enough storage or RAM in base model hardware product offerings and then charging $400 for $5 worth of additional parts on a soldered board. Or dropping the headphone jack to sell more airPods. But importantly, these enshittification pressures are largely orthogonal to privacy preservation, so unlike Google, Apple's fairly robust privacy-respecting track record isn't directly opposed to its revenue streams.