Give Me a Reason(ing Model)

Are we doing this again? It looks like we are doing this again.

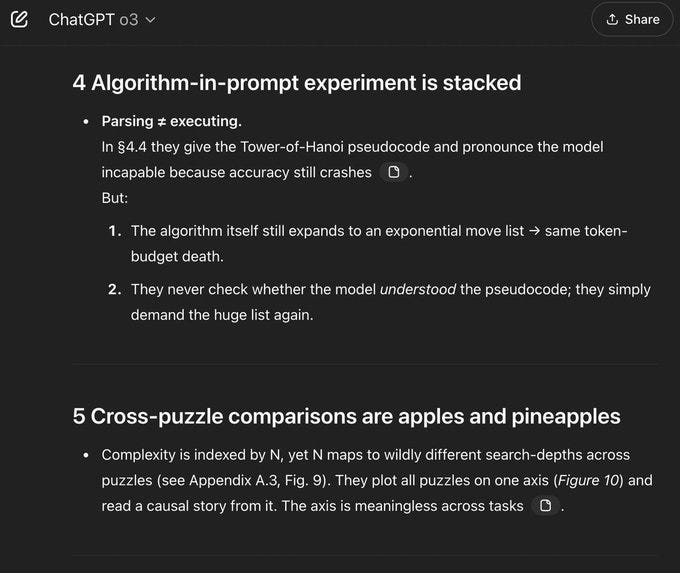

This time it involves giving LLMs several ‘new’ tasks including effectively a Tower of Hanoi problem, asking them to specify the answer via individual steps rather than an algorithm then calling a failure to properly execute all the steps this way (whether or not they even had enough tokens to do it!) an inability to reason.

The actual work in the paper seems by all accounts to be fine as far as it goes if presented accurately, but the way it is being presented and discussed is not fine.

Not Thinking Clearly

Ruben Hassid (12 million views, not how any of this works): BREAKING: Apple just proved AI "reasoning" models like Claude, DeepSeek-R1, and o3-mini don't actually reason at all.

They just memorize patterns really well.

Here's what Apple discovered:

(hint: we're not as close to AGI as the hype suggests)

Instead of using the same old math tests that AI companies love to brag about, Apple created fresh puzzle games. They tested Claude Thinking, DeepSeek-R1, and o3-mini on problems these models had never seen before.

All "reasoning" models hit a complexity wall where they completely collapse to 0% accuracy. No matter how much computing power you give them, they can't solve harder problems. As problems got harder, these "thinking" models actually started thinking less. They used fewer tokens and gave up faster, despite having unlimited budget.

[And so on.]

Thinking Again

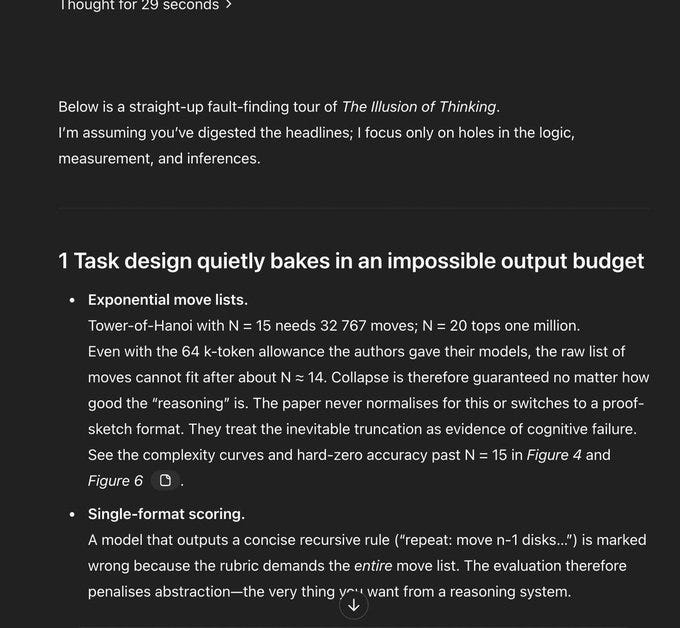

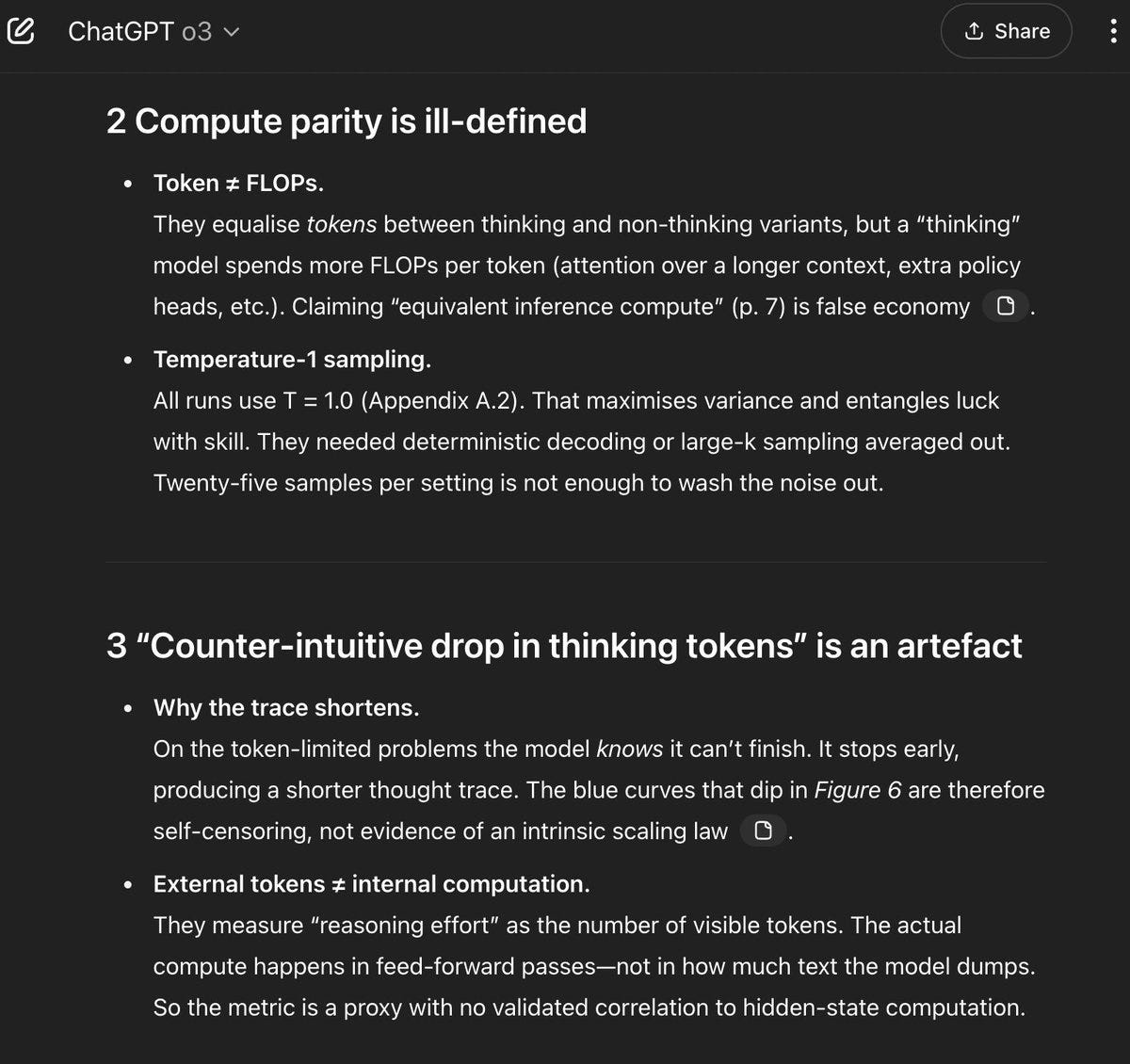

Ryan Greenblatt: This paper doesn't show fundamental limitations of LLMs:

- The "higher complexity" problems require more reasoning than fits in the context length (humans would also take too long).

- Humans would also make errors in the cases where the problem is doable in the context length.

- I bet models they don't test (in particular o3 or o4-mini) would perform better and probably get close to solving most of the problems which are solvable in the allowed context length

It's somewhat wild that the paper doesn't realize that solving many of the problems they give the model would clearly require >>50k tokens of reasoning which the model can't do. Of course the performance goes to zero once the problem gets sufficiently big: the model has a limited context length. (A human with a few hours would also fail!)

Rohit: I asked o3 to analyse and critique Apple's new "LLMs can't reason" paper. Despite its inability to reason I think it did a pretty decent job, don't you?

Don't get me wrong it's an interesting paper for sure, like the variations in when catastrophic failure happens for instance, just a bit overstated wrt its positioning.

Kevin Bryan: The "reasoning doesn't exist" Apple paper drives me crazy. Take logic puzzle like Tower of Hanoi w/ 10s to 1000000s of moves to solve correctly. Check first step where an LLM makes mistake. Long problems aren't solved. Fewer thought tokens/early mistakes on longer problems.

…

But if you tell me to solve a problem that would take me an hour of pen and paper, but give me five minutes, I'll probably give you an approximate solution or a heuristic. THIS IS EXACTLY WHAT FOUNDATION MODELS WITH THINKING ARE RL'D TO DO.

…

We know from things like Code with Claude and internal benchmarks that performance strictly increases as we increase in tokens used for inference, on ~every problem domain tried. But LLM companies can do this: *you* can't b/c model you have access to tries not to "overthink".

The team on this paper are good (incl. Yoshua Bengio's brother!), but interpretation media folks give it is just wrong. It 100% does not, and can not, show "reasoning is just pattern matching" (beyond trivial fact that all LLMs do nothing more than RL'd token prediction...)

The team might be good, but in this case you don’t blame the reaction on the media. The abstract very clearly is laying out the same misleading narrative picked up by the media. You can wish for a media that doesn’t get fooled by that, but that’s not the world we live in, and the blame is squarely on the way the paper presents itself.

Lisan al Galib: A few more observations after replicating the Tower of Hanoi game with their exact prompts:

- You need AT LEAST 2^N - 1 moves and the output format requires 10 tokens per move + some constant stuff.

- Furthermore the output limit for Sonnet 3.7 is 128k, DeepSeek R1 64K, and o3-mini 100k tokens. This includes the reasoning tokens they use before outputting their final answer!

- all models will have 0 accuracy with more than 13 disks simply because they can not output that much!

…

- At least for Sonnet it doesn't try to reason through the problem once it's above ~7 disks. It will state what the problem and the algorithm to solve it and then output its solution without even thinking about individual steps.

- it's also interesting to look at the models as having a X% chance of picking the correct token at each move

- even with a 99.99% probability the models will eventually make an error simply because of the exponentially growing problem size

…

But I also observed this peak in token usage across the models I tested at around 9-11 disks. That's simply the threshold where the models say: "Fuck off I'm not writing down 2^n_disks - 1 steps"

[And so on.]

Tony Ginart: Humans aren’t solving a 10 disk tower of Hanoi by hand either.

One Draw Nick: If that’s true then this paper from Apple makes no sense.

Lisan al Galib: It doesn’t, hope that helps.

Gallabytes: if I asked you to solve towers of Hanoi entirely in your head without writing anything down how tall could the tower get before you'd tell me to fuck off?

My answer to ‘how many before I tell you off’ is three. Not that I couldn’t do more than three, but I would choose not to.

Inability to Think

Colin Fraser I think gives us a great and clean version of the bear case here?

Colin Fraser: if you can reliably carry out a sequence of logical steps then you can solve the Tower of Hanoi problem. If you can’t solve the Tower of Hanoi problem then you can’t carry out a sequence of logical steps. It’s really quite simple and not mysterious.

They give it the instructions. They tell it to do the steps. It doesn’t do the steps. So-called “reasoning” doesn’t help it do the steps. What else are you supposed to make of this? It can’t do the steps.

It seems important that this doesn’t follow?

Not doing [X] in a given situation doesn’t mean you can’t do [X] in general.

Not doing [X] in a particular test especially doesn’t mean a model can’t do [X].

Not doing [X] can be a simple ‘you did not provide enough tokens to [X]’ issue.

The more adversarial the example, the less evidence this provided.

Failure to do any given task requiring [X] does not mean you can’t [X] in general.

Or more generally, ‘won’t’ or ‘doesn’t’ [X] does not show ‘can’t’ [X]. It is of course often evidence, since doing [X] does prove you can [X]. How much evidence it provides depends on the circumstances.

In Brief

To summarize, this is tough but remarkably fair:

Charles Goddard: 🤯 MIND-BLOWN! A new paper just SHATTERED everything we thought we knew about AI reasoning!

This is paradigm-shifting. A MUST-READ. Full breakdown below 👇

🧵 1/23

Linch: Any chance you're looking for a coauthor in future work? I want to write a survey paper explaining why while jobs extremely similar to mine will be easily automatable, my own skillset is unique and special and require a human touch.

What’s In a Name

Also the periodic reminder that asking ‘is it really reasoning’ is a wrong question.

Yuchen Jin: Ilya Sutskever, in his speech at UToronto 2 days ago:

"The day will come when AI will do all the things we can do."

"The reason is the brain is a biological computer, so why can't the digital computer do the same things?"

It's funny that we are debating if AI can "truly think" or give "the illusion of thinking", as if our biological brain is superior or fundamentally different from a digital brain.

Ilya's advice to the greatest challenge of humanity ever:

"By simply looking at what AI can do, not ignoring it, that will generate the energy that's required to overcome the huge challenge."

If a different name for what is happening would dissolve the dispute, then who cares?

Colin Fraser: The labs are the ones who gave test time compute scaling these grandiose names like “thinking” and “reasoning”. They could have just not called it that.

I don’t see those names as grandiose. I see them as the best practical descriptions in terms of helping people understand what is going on. It seems much more helpful and practical than always saying ‘test time compute scaling.’ Colin suggested ‘long output mode’ and I agree that would set expectations lower but I don’t think that describes the central thing going on here at all, instead it makes it sounds like it’s being more verbose.

Inside Apple:

Cast:

Tim (Cook, CEO)

John (Giannandrea, Senior Vice President of Machine Learning & AI Strategy)

Scene: Tim Cook's office, mid-morning

Tim: John, my man, how's it going?"

John: It's a'ight, Timbo, a'ight

Tim: How's the new Siri coming along?

John: ... (total silence)

Tim: Fuuuccckkkkk...

John: Look, dude, it's like ... it's tough out there okay.

Tim: You mean we got nothin'?

John: Not nothin' ... how do you feel about super duper autocorrect from on device 3 B model?

Tim: ... (total silence)

John: Or like get this ... create your own emoji - Genmoji, get it - with diffusion. Like Midjourney, but not as good.

Tim (forehead on desk): Fuck, fuck, fuck ...

John: My dude, don't give up ... we've got this study

Tim (sitting up again): What study?

John: Well, like, it's not like OpenAI or DeepMind-like research but ...

Tim: But what?

John: So Samy (Bengio) and the guys got to thinking ... like, what if we, like, asked LLMs to do things that even a human couldn't do, like a 50,000 step puzzle? And then the LLMs failed?

Tim: And they failed?

John: Yeah. If you don't let them write programs like a human would to solve an equivalent problem ...

Tim: Skip that part. Here's the headline "LLMs can't reason, they only pretend to reason." Get that out today.

This is an interesting example of how illegible the academic publishing world can be to outsiders. As someone who reads a lot of papers, there are some signals that jump out to me, but probably don't seem significant to a typical journalist or "AI fan" on X:

- First, "this paper is from Apple" sends a different signal about credibility to an ML researcher than it does to the general public.

- The lead author is a grad student intern. There are some more senior researchers in the author list, but based on the "equal contribution" asterisk, we are probably looking at an intern project by a grad student researcher and their intern host. This is a signal about the amount of investigative effort and resources behind the paper.

- The meta in ML publishing is to make maximalist claims about the impact and significance of your work. Conference reviewers will typically make you tone it down or qualify your claims, but there is still quite a lot of it in the final papers (which are mostly irrelevant because everyone read your preprint anyway). Everyone has adjusted to expect this and just gloss over those parts when reading papers. This is a preprint, so it hasn't even had an external review process.

If you flip through preprints, most of the big companies put out dozens of papers like this every month - speculative results by small teams, which don't necessarily align with the "beliefs" or goals of the larger company. I think that's mostly a good thing - a good research culture requires making a lot of small bets, most of which won't pan out. But it can be a PR headache when "a grad student intern at Apple posted a preprint about how LLMs behave on puzzles that require long context" is perceived as "Apple says reasoning models don't really work".