This is the endgame. Very soon the session will end, and various bills either will or won’t head to Newsom’s desk. Some will then get signed and become law.

Time is rapidly running out to have your voice impact that decision.

Since my last weekly, we got a variety of people coming in to stand for or against the final version of SB 1047. There could still be more, but probably all the major players have spoken at this point.

So here, today, I’m going to round up all that rhetoric, all those positions, in one place. After this, I plan to be much more stingy about talking about the whole thing, and only cover important new arguments or major news.

I’m not going to get into the weeds arguing about the merits of SB 1047 - I stand by my analysis in the Guide to SB 1047, and the reasons I believe it is a good bill, sir.

I do however look at the revised AB 3211. I was planning on letting that one go, but it turns out it has a key backer, and thus seems far more worthy of our attention.

The Media

I saw two major media positions taken, one pro and one anti.

Neither worried itself about the details of the bill contents.

The Los Angeles Times Editorial Board endorses SB 1047, since the Federal Government is not going to step up, and using an outside view and big picture analysis. I doubt they thought much about the bill’s implementation details.

The Economist is opposed, in a quite bad editorial calling belief in the possibility of a catastrophic harm ‘quasi-religious’ without argument, and uses that to dismiss the bill, instead calling for regulations that address mundane harms. That’s actually it.

OpenAI Opposes SB 1047

The first half of the story is that OpenAI came out publicly against SB 1047.

They took four pages to state its only criticism in what could have and should have been a Tweet: That it is a state bill and they would prefer this be handled at the Federal level. To which, I say, okay, I agree that would have been first best and that is one of the best real criticisms. I strongly believe we should pass the bill anyway because I am a realist about Congress, do not expect them to act in similar fashion any time soon even if Harris wins and certainly if Trump wins, and if they pass a similar bill that supersedes this one I will be happily wrong.

Except the letter is four pages long, so they can echo various industry talking points, and echo their echoes. In it, they say: Look at all the things we are doing to promote safety, and the bills before Congress, OpenAI says, as if to imply the situation is being handled. Once again, we see the argument ‘this might prevent CBRN risks, but it is a state bill, so doing so would not only not be first bet, it would be bad, actually.’

They say the bill would ‘threaten competitiveness’ but provide no evidence or argument for this. They echo, once again without offering any mechanism, reason or evidence, Rep. Lofgren’s unsubstantiated claims that this risks companies leaving California. The same with ‘stifle innovation.’

In four pages, there is no mention of any specific provision that OpenAI thinks would have negative consequences. There is no suggestion of what the bill should have done differently, other than to leave the matter to the Feds. A duck, running after a person, asking for a mechanism.

My challenge to OpenAI would be to ask: If SB 1047 was a Federal law, that left all responsibilities in the bill to the USA AISI and NIST and the Department of Justice, funding a national rather than state Compute fund, and was otherwise identical, would OpenAI then support? Would they say their position is Support if Federal?

Or, would they admit that the only concrete objection is not their True Objection?

I would also confront them with AB 3211, but hold that thought.

My challenge to certain others: Now that OpenAI has come out in opposition to the bill, would you like to take back your claims that SB 1047 would enshrine OpenAI and others in Big Tech with a permanent monopoly, or other such Obvious Nonsense?

Max Tegmark: Jason [Kwon], it will be great if you can clarify *how* you want AI to be regulated rather than just explaining *how not*. Please list specific rules and standards that you want @OpenAI to be legally bound by as long as your competitors are too.

I think this is generous. OpenAI did not explain how not to regulate AI, other than that it should not be by California. I couldn’t find a single thing in the bill OpenAI would not want the Federal Government to do they were willing to name?

Anthony Aguirre: Happy to be proven wrong, but I think the way to interpret this is straightforward.

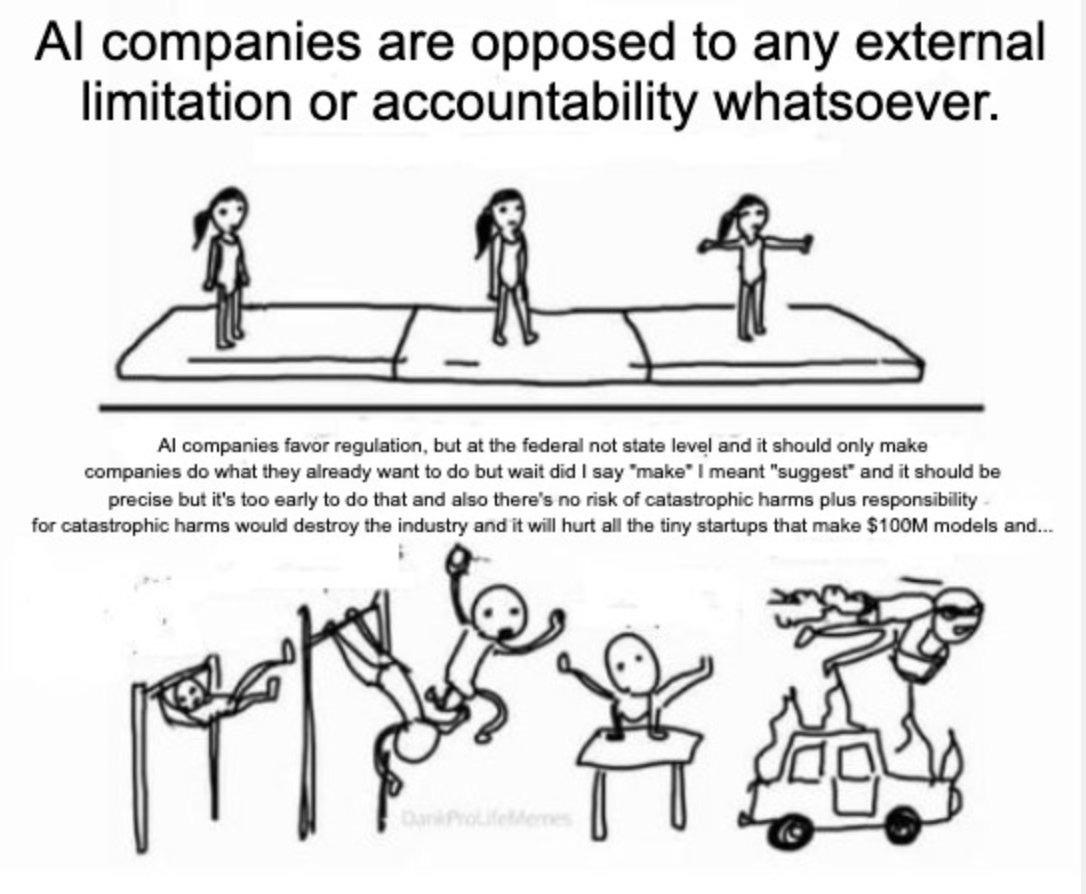

Dylan Matthews: You're telling me that Silicon Valley companies oppose an attempt to regulate their products?

Wow. I didn’t know that. You’re telling me now for the first time.

Obv the fact that OpenAI, Anthropic, etc are pushing against the bill is not proof it's a good idea — some regulations are bad!

But it's like … the most classic story in all of politics, and it's weird how much coverage has treated it as a kind of oddity.

Two former OpenAI employees point out some obvious things about OpenAI deciding to oppose SB 1047 after speaking of the need for regulation. To be fair, Rohit is very right that any given regulation can be bad, but again they only list one specific criticism, and do not say they would support if that criticism were fixed.

OpenAI Backs AB 3211

For SB 1047, OpenAI took four pages to say essentially this one sentence:

OpenAI: However, the broad and significant implications of Al for U.S. competitiveness and national security require that regulation of frontier models be shaped and implemented at the federal level.

So presumably that would mean they oppose all state-level regulations. They then go on to note they support three federal bills. I see those bills as a mixed bag, not unreasonable things to be supporting, but nothing in them substitutes for SB 1047.

Again, I agree that would be the first best solution to do this Federally. Sure.

For AB 3211, they… support it? Wait, what?

Anna Tong (Reuters): ChatGPT developer OpenAI is supporting a California bill that would require tech companies to label AI-generated content, which can range from harmless memes to deepfakes aimed at spreading misinformation about political candidates.

The bill, called AB 3211, has so far been overshadowed by attention on another California state artificial intelligence (AI) bill, SB 1047, which mandates that AI developers conduct safety testing on some of their own models.

…

San Francisco-based OpenAI believes that for AI-generated content, transparency and requirements around provenance such as watermarking are important, especially in an election year, according to a letter sent to California State Assembly member Buffy Wicks, who authored the bill.

You’re supposed to be able to request such things. I have been trying for several days to get a copy of the support letter, getting bounced around by several officials. So far, I got them to say they got my request, but no luck on the actual letter, so we don’t get to see their reasoning, as the article does not say. Nor does it clarify if they offered this support before or after recent changes. The old version was very clearly a no good, very bad bill with a humongous blast radius, although many claim it has since been improved to be less awful.

OpenAI justifies this position as saying ‘there is a role for states to play’ in such issues, despite AB 3211 very clearly being similar to SB 1047 in the degree to which it is a Federal law in California guise. It would absolutely apply outside state lines and impose its rules on everyone. So I don’t see this line of reasoning as valid. Is this saying that preventing CBRN harms at the state level is bad (which they actually used as an argument), but deepfakes don’t harm national security so preventing them at the state level is good? I guess? I mean, I suppose that is a thing one can say.

The bill has changed dramatically from when I looked at it. I am still opposed to it, but much less worried about what might happen if it passed, and supporting it on the merits is no longer utterly insane if you have a different world model. But that world model would have to include the idea that California should be regulating frontier generative AI, at least for audio, video and images.

There are three obvious reasons why OpenAI might support this bill.

The first is that it might be trying to head off other bills. If Newsom is under pressure to sign something, and different bills are playing off against each other, perhaps they think AB 3211 passing could stop SB 1047 or one of many other bills - I’ve only covered the two, RTFB is unpleasant and slow, but there are lots more. Probably most of them are not good.

The second reason is if they believe that AB 3211 would assist them in regulatory capture, or at least be easier for them to comply with than for others and thus give them an advantage.

Which the old version certainly would have done. The central thing the bill intends to do is to require effective watermarking for all AIs capable of fooling humans into thinking they are producing ‘real’ content, and labeling of all content everywhere.

OpenAI is known to have been sitting on a 99.9% effective (by their own measure) watermarking system for a year. They chose not to deploy it, because it would hurt their business - people want to turn in essays and write emails, and would rather the other person not know that ChatGPT wrote them.

As far as we know, no other company has similar technology. It makes sense that they would want to mandate watermarking everywhere.

The third reason is they might actually think this is a good idea, in which case they think it is good for California to be regulating in this way, and they are willing to accept the blast radius, rather than actively welcoming that blast radius or trying to head off other bills. I am… skeptical that this dominates, but it is possible.

What we do now know, even if we are maximally generous, is that OpenAI has no particular issue with regulating AI at the state level.

Anthropic Says SB 1047’s Benefits Likely Exceed Costs

Anthropic sends a letter to Governor Newsom regarding SB 1047, saying its benefits likely exceed its costs. Jack Clark explains.

Jack Clark: Here's a letter we sent to Governor Newsom about SB 1047. This isn't an endorsement but rather a view of the costs and benefits of the bill.

You can read the letter for the main details, but I'd say on a personal level SB 1047 has struck me as representative of many of the problems society encounters when thinking about safety at the frontier of a rapidly evolving industry...

How should we balance precaution with an experimental and empirically driven mindset? How does safety get 'baked in' to companies at the frontier without stifling them? What is the appropriate role for third-parties ranging from government bodies to auditors?

These are all questions that SB 1047 tries to deal with - which is partly why the bill has been so divisive; these are complicated questions for which few obvious answers exist.

Nonetheless, we felt it important to give our view on the bill following its amendments. We hope this helps with the broader debate about AI legislation.

Jack Clack’s description seems accurate. While the letter says that benefits likely exceed costs, it expresses uncertainty on that. It is net positive on the bill, in a way that would normally imply it was a support letter, but makes clear Anthropic and Dario Amodei technically do not support or endorse SB 1047.

So first off, thank you to Dario Amodei and Anthropic for this letter. It is a helpful thing to do, and if this is Dario’s actual point of view then I support him saying so. More people should do that. And the letter’s details are far more lopsided than their introduction suggests, they would be fully compatible with a full endorsement.

Shirin Ghaffary: Anthropic is voicing support for CA AI safety bill SB 1047, saying the benefits outweigh the costs but still stopping short of calling it a full endorsement.

Tess Hegarty: Wow! That’s great from @AnthropicAI. Sure makes @OpenAI and

@Meta look kinda behind on the degree of caution warranted here 👀

Dan Hendrycks: Anthropic has carefully explained the importance, urgency, and feasibility of SB 1047 in its letter to @GavinNewsom.

"We want to be clear, as we were in our original support if amended letter, that SB 1047 addresses real and serious concerns with catastrophic risk in AI systems. AI systems are advancing in capabilities extremely quickly, which offers both great promise for California’s economy and substantial risk. Our work with biodefense experts, cyber experts, and others shows a trend towards the potential for serious misuses in the coming years – perhaps in as little as 1-3 years."

Garrison Lovely: Anthropic's letter may be a critical factor in whether CA AI safety bill SB 1047 lives or dies.

The existence of an AI company at the frontier saying that the bill actually won't be a disaster really undermines the 'sky is falling' attitude taken by many opponents.

Every other top AI company has opposed the bill, making the usual anti-regulatory arguments.

Up front, this statement is huge: "In our assessment the new SB 1047 is substantially improved, to the point where we believe its benefits likely outweigh its costs." ... [thread continues]

Simeon: Credit must be given where credit is due. This move from Anthropic is a big deal and must be applauded as such.

Cicero (reminder for full accuracy: Anthropic said ‘benefits likely exceed costs’ but made clear they did not fully support or endorse):

Details of Anthropic’s Letter

The letter is a bit too long to quote in full but consider reading the whole thing. Here’s the topline and the section headings, basically.

Dario Amodei (CEO Anthropic) to Governor Newsom: Dear Governor Newsom: As you may be aware, several weeks ago Anthropic submitted a Support if Amended letter regarding SB 1047, in which we suggested a series of amendments to the bill. Last week the bill emerged from the Assembly Appropriations Committee and appears to us to be halfway between our suggested version and the original bill: many of our amendments were adopted while many others were not.

In our assessment the new SB 1047 is substantially improved, to the point where we believe its benefits likely outweigh its costs. However, we are not certain of this, and there are still some aspects of the bill which seem concerning or ambiguous to us.

In the hopes of helping to inform your decision, we lay out the pros and cons of SB 1047 as we see them, and more broadly we discuss what we see as some key principles for crafting effective and efficient regulation for frontier AI systems based on our experience developing these systems over the past decade.

They say the main advantages are:

Developing SSPs and being honest with the public about them.

Deterrence of downstream harms through clarifying the standard of care.

Pushing forward the science of AI risk reduction.

And these are their remaining concerns:

Some concerning aspects of pre-harm enforcement are preserved in auditing and GovOps.

The bill’s treatment of injunctive relief.

Miscellaneous other issues, basically the KYC provisions, which they oppose.

They also offer principles on regulating frontier systems:

The key dilemma of AI regulation is driven by speed of progress.

One resolution to this dilemma is very adaptable regulation.

Catastrophic risks are important to address.

They see three elements as essential:

Transparent safety and security practices.

Incentives to make safety and security plans effective in preventing catastrophes.

Minimize collateral damage.

As you might expect, I have thoughts.

I would challenge Dario’s assessment that this is only ‘halfway.’ I analyzed the bill last week to compare it to Anthropic’s requests, using the public letter. On major changes, I found they got three, mostly got another two and were refused on one, the KYC issue. On minor issues, they fully got 5, they partially got 3 and they got refused on expanding the reporting time of incidents. Overall, I would say this is at least 75% of Anthropic’s requests weighted by how important they seem to me.

I would also note that they themselves call for ‘very adaptable’ regulation, and that this request is not inherently compatible with this level of paranoia about how things will adapt. SB 1047 is about as flexible as I can imagine a law being here, while simultaneously being this hard to implement in damaging fashion. I’ve discussed those details previously, my earlier analysis stands.

I continue to be baffled by the idea that in a world where AGI is near and existential risks are important, Anthropic is terrified of absolutely any form of pre-harm enforcement. They want to say that no matter how obviously irresponsible you are being, until something goes horribly wrong, we should count purely on deterrence. And indeed, they even got most of what they wanted. But they should understand why that is not a viable strategy on its own.

And I would take issue with their statement that SB 1047 drew so much opposition because it was ‘insufficiently clean,’ as opposed to the bill being the target of a systematic well-funded disinformation campaign from a16z and others, most of whom would have opposed any bill, and who so profoundly misunderstood the bill they successfully killed a key previous provision that purely narrowed the bill, the Limited Duty Exception, without (I have to presume?) realizing what they were doing.

To me, if you take Anthropic’s report at face value, they clear up that many talking points opposing the bill are false, and are clearly saying to Newsom that if you are going to sign an AI regulation bill with any teeth whatsoever, that SB 1047 is a good choice for that bill. Even if they’d, if given the choice, prefer it with even less teeth.

Another way of putting this is that I think it is excellent that Anthropic sent this letter, that it accurately represents the bill (modulo the minor ‘halfway’ line) and I presume also how Anthropic leadership is thinking about it, and I thank them for it.

I wish we had a version of Anthropic where this letter was instead disappointing.

I am grateful we do have at least this version of Anthropic.

Elon Musk Says California Should Probably Pass SB 1047

You know who else is conflicted but ultimately decided SB 1047 should probably pass?

Elon Musk (August 26, 6:59pm eastern): This is a tough call and will make some people upset, but, all things considered, I think California should probably pass the SB 1047 AI safety bill.

For over 20 years, I have been an advocate for AI regulation, just as we regulate any product/technology that is a potential risk to the public.

Notice Elon Musk noticing that this will cost him social capital, and piss people off, and doing it anyway, while also stating his nuanced opinion - a sharp contrast with his usual political statements. A good principle is that when someone says they are conflicted (which can happen in both directions, e.g. Danielle Fong here saying she opposes the bill about at the level Anthropic is in favor of it) it is a good bet they are sincere even if you disagree.

OK, I’ve got my popcorn ready, everyone it’s time to tell us who you are, let’s go.

As in, who understands that Elon Musk has for a long time cared deeply about AI existential risk, and who assumes that any such concern must purely be a mask for some nefarious commercial plot? Who does that thing where they turn on anyone who dares disagree with them, and who sees an honest disagreement?

Bindu Reddy: I am pretty sure Grok-2 wouldn't have caught up to SOTA models without open-source models and techniques

SB-1047 will materially hurt xAI, so why support it?

People can support bills for reasons under than their own narrow self-interest?

Perhaps he might care about existential risk, as evidenced by him talking a ton over the years about existential risk? And that being the reason he helped found OpenAI? From the beginning I thought that move was a mistake, but that was indeed his reasoning. Similarly, his ideas of things like ‘a truth seeking AI would keep us around’ seem to me like Elon grasping at straws and thinking poorly, but he’s trying.

Adam Thierer: You gotta really appreciate the chutzpah of a guy who has spent the last decade effectively evading NHTSA bureaucrats on AV regs declaring that he's a long-time advocate of AI safety. 😂.

Musk has also repeatedly called AI an existential threat to humanity while simultaneously going on a massive hiring spree for AI engineers at X. You gotta appreciate that level of moral hypocrisy!

Meanwhile, Musk is also making it easier for MAGA conservatives to come out in favor of extreme AI regulation with all this nonsense. Regardless of how this plays out in California with this particular bill, this is horrible over the long haul.

Here we have some fun not-entirely-unfair meta-chutzpah given Elon’s views on government and California otherwise, suddenly calling out Musk for doing xAI despite thinking AI is an existential risk (which is actually a pretty great point), and a rather bizarre theory of future debates about regulatory paths.

Step 1: Move out of California

Step 2: Support legislation that’ll hurt California.

Well played Mr. Musk. Well played.

That is such a great encapsulation of the a16z mindset. Everything is a con, everyone has an angle, Musk must be out there trying to hurt his enemies. That must be it. Beff Jezos went with the same angle.

xAI is, of course, still in California.

Jeremy White (Senior California politics reporter, Politico): .@elonmusk and @Scott_Wiener have clashed often, but here Musk -- an early OpenAI co-founder - backs Wiener's AI safety bill contra @OpenAI and much of the tech industry.

Sam D’Amico (CEO Impulse Labs): Honestly good that this issue is one that appears to have no clear partisan valence, yet.

Dean Ball: As I said earlier, I'm not surprised by this, but I do think it's interesting that AI policy continues to be... weird. Certainly nonpartisan. We've got Nancy Pelosi and e/acc on one side, and Elon Musk and Scott Wiener on the other.

I like this about AI policy.

This is an excellent point. Whichever side you are on, you should be very happy the issue remains non-partisan. Let’s all work to keep it that way.

Andrew Critch: Seems like Musk actually read the bill! Congrats to all who wrote and critiqued it until its present form 😀 And to everyone who's causally opposing it based on vibes or old drafts: check again. This is the regulation you want, not crazy backlash laws if this one fails.

Another excellent point and a consistent pattern. Watch who has clearly RTFB (read the bill) especially in its final form, and who has not.

Negative Reactions to Anthropic’s Letter, Attempts to Suppress Dissent

We also have at least one prominent reaction (>600k views) from a bill opponent calling for a boycott of Anthropic, highlighting the statement about benefits likely exceeding costs and making Obvious Nonsense accusations that the bill is some Anthropic plot (I can directly assure you this is not true, or you could, ya know, read the letter, or the bill), confirming how this is being interpreted. To his credit, even Brian Chau noticed this kind of hostile reaction made him uncomfortable, and he warns about the dangers of purity spirals.

Meanwhile Garry Tan (among others, but he’s the one Chau quoted) is doing exactly what Chau warns about, saying things like ‘your API customers will notice how decelerationist you are’ and that is absolutely a threat and an attempt to silence dissent against the consensus. The message, over and over, loud and clear, is: We tolerate no talk that there might be any risk in the room whatsoever, or any move to take safety precautions or encourage them in others. If you dare not go with the vibe they will work to ensure you lose business.

(And of course, everyone who doesn’t think you should go forward with reckless disregard, and ‘move fast and break things,’ is automatically a ‘decel,’ which should absolutely be read in-context the way you would a jingoistic slur.)

It should not be underestimated the extent to which, in the VC-SV core, dissent is being suppressed, with people and companies voicing the wrong support or the wrong vibes risking being cut off from their social networks and funding sources. When there are prominent calls for even the lightest of all support for acting responsibly - such as a non-binding letter saying maybe we should pay attention to safety risks that was so harmless SoftBank signed it - there are calls to boycott everyone in question, on principle.

The thinness of skin is remarkable. They fight hard for the vibes.

Aaron Levie: California should be leading the way on accelerating AI (safely), not creating the template to slow things down. If SB 1047 were written 2 years ago, we would have prevented all the AI progress we’ve seen thus far. We’re simply too early in the state of AI to taper progress.

I like the refreshing clarity of Aaron’s first sentence. He says we should not ‘create the template to slow things down,’ on principle. As in, we should not only not slow things down in exchange for other benefits, we should intentionally not have the ability to, in the future, take actions that might do that. The second sentence then goes on to make a concrete counterfactual claim, also a good thing to do, although I strongly claim that the second sentence is false, such a bill would have done very little.

If you’re wondering why so many in VC/YC/SV worlds think ‘everyone is against SB 1047,’ this kind of purity spiral and echo chamber is a lot of why. Well played, a16z?

Positions In Brief

Yoshua Bengio is interviewed by Shirin Ghaffary of Bloomberg about the need for regulation, and SB 1047 in particular, warning that we are running out of time. Bloomberg took no position I can see, and Bengio’s position is not new.

Dan Hendrycks offers a final op-ed in Time Magazine, pointing out that it is important for the AI industry that it prevent catastrophic harms. Otherwise, it could provoke a large negative reaction. Another externality problem.

Here is a list of industry opposition to SB 1047.

Nathan Labenz (Cognitive Revolution): Don’t believe the SB 1047 hype folks!

Few models thus far created would be covered (only those that cost $100M+), and their developers are voluntarily doing extensive safety testing anyway

I think it’s a prudent step, but I don’t expect a huge impact either way.

Nathan Lebenz had a full podcast, featuring both the pro (Nathan Calvin) and the con (Dean Ball) sides.

In the Atlantic, bill author Scott Weiner is interviewed about all the industry opposition, insisting this is ‘not a doomer bill’ or focused on ‘science fiction risks.’ He is respectful towards bill most opponents, but does not pretend that a16z isn’t running a profoundly dishonest campaign.

I appreciated this insightful take on VCs who oppose SB 1047.

Liron Shapira: > The anti-SB 1047 VCs aren't being clear and constructive in their rejection.

Have you ever tried to fundraise from a VC?

Indeed I have. At least here they tell you they’re saying no. Now you want them to tell you why and how you can change their minds? Good luck with that.

Lawrence Chan does an RTFB, concludes it is remarkably light touch and a good bill. He makes many of the usual common sense points - this covers zero existing models, will never cover anything academics do, and (he calls it a ‘spicy take’) if you cannot take reasonable care doing something then have you considered not doing it?

Mike Knoop, previously having opposed SB 1047 because he does not think AGI progress is progressing and that anything slowing down AGI progress would be bad, updates to believing it is a ‘no op’ that doesn’t do anything but it could reassure the worried and head off worse other actions. But if the bill actually did anything, he would oppose. This is a remarkably common position, that there is no cost-benefit analysis to be done when building things smarter than humans. They think this is a situation where no amount of safety is worth any amount of potentially slowing down if there was a safety issue, so they refuse to talk price. The implications are obvious.

Aidan McLau of Topology AI says:

Aiden McLau: As a capabilities researcher, accelerationist, libertarian, and ai founder... I'm coming out of the closet. I support sb 1047.

growing up, you realize we mandate seatbelts and licenses to de-risk outlawing cars. Light and early regulation is the path of optimal acceleration.

the bill realllllllllllllly isn't that bad

if you have $100m to train models (no startup does), you can probably afford some auditing. Llama will be fine.

But if CA proposes some hall monitor shit, I'll be the first to oppose them. Stay vigilant.

I think there's general stigma about supporting regulation as an ai founder, but i've talked to many anti-sb 1047 people who are smart, patient, and engage in fair discourse.

Daniel Eth: Props to Aidan for publicly supporting SB1047 while working in the industry. I know a bunch of you AI researchers out there quietly support the bill (lots of you guys at the big labs like my pro-SB1047 tweets) - being public about that support is commendable & brave.

Justin Harvey (co-founder AIVideo.com): I generally support SB 1047

I hate regulation. I want AI to go fast. I don’t trust the competency of the government.

But If you truly believe this will be the most powerful technology ever created, idk. This seems like a reasonable first step tbh.

Notice how much the online debate has always been between libertarians and more extreme libertarians. Everyone involved hates regulation. The public, alas, does not.

Witold Wnuk makes the case that the bill is sufficiently weak that it will de facto be moral license for the AI companies to go ahead and deal with the consequences later, and the blame when models go haywire will thus also be on those who passed this bill, and that this does nothing to solve the problem. As I explained in my guide, I very much disagree and think this is a good bill. And I don’t think this bill gives anone ‘moral license’ at all. But I understand the reasoning.

Stephen Casper notices that the main mechanism of SB 1047 is basic transparency, and that it does not bode well that industry is so vehemently against this and it is so controversial. I think he goes too far in terms of how he describes how difficult it would be to sue under the bill, he’s making generous (to the companies) assumptions, but the central point here seems right.

Postscript: AB 3211 RTFBC (Read the Bill Changes)

One thing California does well is show you how a bill has changed since last time. So rather than having to work from scratch, we can look at the diff.

We’ll start with a brief review of the old version (abridged a bit for length). Note that some of this was worded badly in ways that might backfire quite a lot.

Authentic content is created by humans.

Inauthentic content is created by AIs and could be mistaken for authentic.

The bill applies to every individual, no size thresholds at all.

Providers of any size must ‘to the extent possible’ place ‘imperceptible and maximally indelible’ watermarks on all content, along with watermark decoders.

Grandfathering in old systems requires a 99% accurate detector.

We now know that OpenAI thinks it knows how to do that.

No one else, to our knowledge, is close. Models would be banned.

Internet hosting platforms are responsible for ensuring indelible watermarks.

All failures must be reported within 24 hours.

All AI that could produce inauthentic content requires notification for each conversation, including audio notification for every audio interaction.

New cameras have to provide watermarks.

Large online platforms (1 million California users, not only social media but also e.g. texting systems) shall use labels on every piece of content to mark it as human or AI, or some specific mix of the two. For audio that means a notice at the beginning and another one at the end, every time, for all messages AI or human. Also you check a box on every upload.

Fines are up to $1 million or 5% of global annual revenue, EU style.

All existing open models are toast. New open models might or might not be toast. It doesn’t seem possible to comply with an open model, on the law’s face.

All right, let’s see what got changed and hopefully fixed, excluding stuff that seems to be for clarity or to improve grammar without changing the meaning.

There is a huge obvious change up front: Synthetic content now only includes images, videos and audio. The bill no longer cares about LLMs or text at all.

A bunch of definitions changed in ways that don’t alter my baseline understanding.

Large online platform no longer includes internet website, web application or digital application. It now has to be either a social media platform, messaging platform, advertising network or standalone search engine that displays content to viewers who are not the creator or collaborator, and the threshold is up to 2 million monthly unique California users.

Generative AI providers have to make available to the public a provenance detection tool or permit users to use one provided by a third party, based on industry standards, that detects generative AI content and how that content was created. There is no minimum size threshold for the provider before they must do this.

Summaries of testing procedures must be made available upon requests to academics, except when that would compromise the method.

A bunch of potentially crazy disclosure requirements got removed.

The thing about audio disclosures happening twice is gone.

Users of platforms need not label every piece of data now, the platform scans the data and reports any provenance data contained therein, or says it is unknown if none is found.

There are new disclosure rules around the artist, track and copyright information on sound recordings and music videos, requiring the information be displayed in text.

I think that’s the major changes, and they are indeed major. I am no longer worried AB 3211 is going to do anything too dramatic, since at worst it applies only to audio, video and images, and the annoyance levels involved are down a lot, and standards for compliance are lower, and compliance in these other formats seems easier than text.

My new take on the new AB 3211 is that this is a vast improvement. If nothing else, the blast radius is vastly diminished.

Is it now a good bill?

I wouldn’t go that far. It’s still not a great implementation. I don’t think deepfakes are a big enough issue to motivate this level of annoyance, or the tail risk that this is effectively a much broader burden than it appears. But the core thing it is attempting to do is no longer a crazy thing to attempt, and the worst dangers are gone. I think the costs exceed the benefits, but you could make a case, if you felt deepfake audio and video were a big short term deal, that this bill has more benefits than costs.

What you cannot reasonably do is support this bill, then turn around and say that California should not be regulating AI and should let the Federal government do it. That does not make any sense, and I have confidence the Federal government will if necessary deal with deepfakes, and that we could safely react after the problem gets worse and being modestly ‘too late’ to it would not be a big deal.

Thanks as always, Zvi! Your arguments have persuaded me to support SB 1047.

Regarding the AB 3211 discussion: as the creator of the “99% accurate” text watermarking method that OpenAI has had for two years, I can say with absolute certainty that there’s nothing there that any AI provider couldn’t easily replicate (and I and others have explained the algorithms publicly). Admittedly, no known watermarking method is robust against attacks like paraphrasing or translating the output, but that’s a different question. I still think creating more friction to the surreptitious use of LLMs, or at least getting real-world data about what happens when that’s tried, seems like a clear win. But I’m not sure what sort of coordination between AI companies and/or legislation will get us to that place that so many of the stakeholders seem to support in principle, but that’s so been frustratingly hard to put into practice. Above my pay grade! :-)

> So presumably that would mean they [OpenAI] oppose all state-level regulations.

Here's the text of the third to last paragraph:

> While we believe the federal government should lead in regulating frontier AI models to account for implications to national security and competitiveness, we recognize there is also a role for states to play. States can develop targeted AI policies to address issues like potential bias in hiring, deepfakes, and help build essential AI infrastructure, such as data centers and power plants, to drive economic growth and job creation. OpenAI is ready to engage with state lawmakers in California and elsewhere in the country who are working to craft this kind of AI-specific legislation and regulation.