Yes, AI Continues To Make Rapid Progress, Including Towards AGI

That does not mean AI will successfully make it all the way to AGI and superintelligence, or that it will make it there soon or on any given time frame.

It does mean that AI progress, while it could easily have been even faster, has still been historically lightning fast. It has exceeded almost all expectations from more than a few years ago. And it means we cannot disregard the possibility of High Weirdness and profound transformation happening within a few years.

GPT-5 had a botched rollout and was only an incremental improvement over o3, o3-Pro and other existing OpenAI models, but was very much on trend and a very large improvement over the original GPT-4. Nor would one disappointing model from one lab have meant that major further progress must be years away.

Imminent AGI (in the central senses in which that term AGI used, where imminent means years rather than decades) remains a very real possibility.

Part of this is covering in full Gary Marcus’s latest editorial in The New York Times, since that is the paper of record read by many in government. I felt that piece was in many places highly misleading to the typical Times reader.

Imagine if someone said ‘you told me in 1906 that there was increasing imminent risk of a great power conflict, and now it’s 1911 and there has been no war, so your fever dream of a war to end all wars is finally fading.’ Or saying that you were warned in November 2019 that Covid was likely coming, and now it’s February 2020 and no one you know has it, so it was a false alarm. That’s what these claims sound like to me.

Why Do I Even Have To Say This?

I have to keep emphasizing this because it now seems to be an official White House position, with prominent White House official Sriram Krishnan going so far as to say on Twitter that AGI any time soon has been ‘disproven,’ and David Sacks spending his time ranting and repeating Nvidia talking points almost verbatim.

When pressed, there is often a remarkably narrow window in which ‘imminent’ AGI is dismissed as ‘proven wrong.’ But this is still used as a reason to structure public policy and one’s other decisions in life as if AGI definitely won’t happen for decades, which is Obvious Nonsense.

Sriram Krishnan: I’ll write about this separately but think this notion of imminent AGI has been a distraction and harmful and now effectively proven wrong.

Prinz: "Imminent AGI" was apparently "proven wrong" because OpenAI chose to name a cheap/fast model "GPT-5" instead of o3 (could have been done 4 months earlier) or the general reasoning model that won gold on both the IMO and the IOI (could have been done 4 months later).

Rob Miles: I'm a bit confused by all the argument about GPT-5, the truth seems pretty mundane: It was over-hyped, they kind of messed up the launch, and the model is good, a reasonable improvement, basically in line with the projected trend of performance over time.

Not much of an update.

To clarify a little, the projected trend GPT-5 fits with is pretty nuts, and the world is on track to be radically transformed if it continues to hold. Probably we're going to have a really wild time over the next few years, and GPT-5 doesn't update that much in either direction.

Rob Miles is correct here as far as I can tell.

If imminent means ‘within the next six months’ or maybe up to a year I think Sriram’s perspective is reasonable, because of what GPT-5 tells us about what OpenAI is cooking. For sensible values of imminent that are more relevant to policy and action, Sriram Krishnan is wrong, in a ‘I sincerely hope he is engaging in rhetoric rather than being genuinely confused about this, or his imminently only means in the next year or at most two’ way.

I am confused how he can be sincerely mistaken given how deep he is into these issues, or that he shares his reasons so we can quickly clear this up because this is a crazy thing to actually believe. I do look forward to Sriram providing a full explanation as to why he believes this. So far we we only have heard ‘GPT-5.’

It Might Be Coming

Not only is imminent AGI not disproven, there are continuing important claims that it is likely. Here is some clarity on Anthropic’s continued position, as of August 31.

Prinz: Jack, I assume no changes to Anthropic's view that transformative AI will arrive by the end of next year?

Jack Clark: I continue to think things are pretty well on track for the sort of powerful AI system defined in machines of loving grace - buildable end of 2026, running many copies 2027. Of course, there are many reasons this could not occur, but lots of progress so far.

Anthropic’s valuation has certainly been on a rocket ship exponential.

Do I agree that we are on track to meet that timeline? No. I do not. I would be very surprised to see it go down that fast, and I am surprised that Jack Clark has not updated based on, if nothing else, previous projections by Anthropic CEO Dario Amodei falling short. I do think it cannot be ruled out. If it does happen, I do not think you have any right to be outraged at the universe for it.

It is certainly true that Dario Amodei’s early predictions of AI writing most of the code, as in 90% of all code within 3-6 months after March 11. This was not a good prediction, because the previous generation definitely wasn’t ready and even if it had been that’s not how diffusion works, and has been proven definitively false, it’s more like 40% of all code generated by AI and 20%-25% of what goes into production.

Which is still a lot, but a lot less than 90%.

Here’s what I said at the time about Dario’s prediction:

Zvi Mowshowitz (AI #107): Dario Amodei says AI will be writing 90% of the code in 6 months and almost all the code in 12 months. I am with Arthur B here, I expect a lot of progress and change very soon but I would still take the other side of that bet. The catch is: I don’t see the benefit to Anthropic of running the hype machine in overdrive on this, at this time, unless Dario actually believed it.

I continue to be confused why he said it, it’s highly unstrategic to hype this way. I can only assume on reflection this was an error about diffusion speed more than it was an error about capabilities? On reflection yes I was correctly betting ‘no’ but that was an easy call. I dock myself more points on net here, for hedging too much and not expressing the proper level of skepticism. So yes, this should push you towards putting less weight on Anthropic’s projections, although primarily on the diffusion front.

As always, remember that projections of future progress include the possibility, nay the inevitability, of discovering new methods. We are not projecting ‘what if the AI labs all keep ramming their heads against the same wall whether or not it works.’

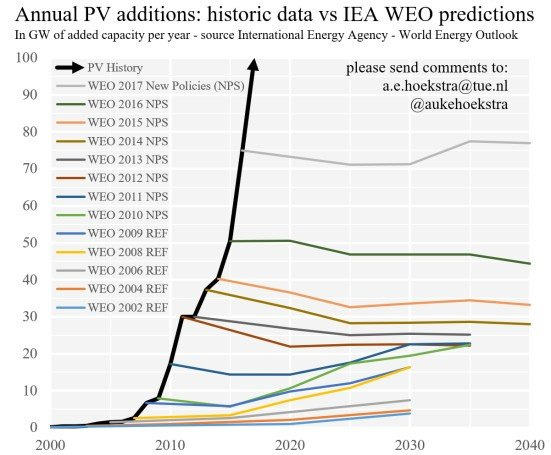

Ethan Mollick: 60 years of exponential growth in chip density was achieved not through one breakthrough or technology, but a series of problems solved and new paradigms explored as old ones hit limits.

I don't think current AI has hit a wall, but even if it does, there many paths forward now.

Paul Graham: One of the things that strikes me when talking to AI insiders is how they believe both that they need several new discoveries to get to AGI, and also that such discoveries will be forthcoming, based on the past rate.

My talks with AI insiders also say we will need new discoveries, and we definitely will need new major discoveries in alignment. But it’s not clear how big those new discoveries need to be in order to get there.

I agree with Ryan Greenblatt that precise timelines for AGI don’t matter that much in terms of actionable information, but big jumps in the chance of things going crazy within a few years can matter a lot more. This is similar to questions of p(doom), where as long as you are in the Leike Zone of a 10%-90% chance of disaster, you mostly want to react in the same ways, but outside that range you start to see big changes in what makes sense.

Ryan Greenblatt: Pretty short timelines (<10 years) seem likely enough to warrant strong action and it's hard to very confidently rule out things going crazy in <3 years.

While I do spend some time discussing AGI timelines (and I've written some posts about it recently), I don't think moderate quantitative differences in AGI timelines matter that much for deciding what to do. For instance, having a 15-year median rather than a 6-year median doesn't make that big of a difference. That said, I do think that moderate differences in the chance of very short timelines (i.e., less than 3 years) matter more: going from a 20% chance to a 50% chance of full AI R&D automation within 3 years should potentially make a substantial difference to strategy.

Additionally, my guess is that the most productive way to engage with discussion around timelines is mostly to not care much about resolving disagreements, but then when there appears to be a large chance that timelines are very short (e.g., >25% in <2 years) it's worthwhile to try hard to argue for this. I think takeoff speeds are much more important to argue about when making the case for AI risk.

I do think that having somewhat precise views is helpful for some people in doing relatively precise prioritization within people already working on safety, but this seems pretty niche.

Given that I don't think timelines are that important, why have I been writing about this topic? This is due to a mixture of: I find it relatively quick and easy to write about timelines, my commentary is relevant to the probability of very short timelines (which I do think is important as discussed above), a bunch of people seem interested in timelines regardless, and I do think timelines matter some.

Consider reflecting on whether you're overly fixated on details of timelines.

A Prediction That Implies AGI Soon

Jason Calacanis of the All-In Podcast (where he is alongside AI Czar David Sacks) has a bold prediction, if you believe that his words have or are intended to have meaning. Which is an open question.

Jason: Before 2030 you're going to see Amazon, which has massively invested in [AI], replace all factory workers and all drivers … It will be 100% robotic, which means all of those workers are going away. Every Amazon worker. UPS, gone. FedEx, gone.

Aaron Slodov: hi @Jason how much money can i bet you to take the other side of the factory worker prediction?

Jason (responding to video of himself saying the above): In 2035 this will not be controversial take — it will be reality.

Hard, soul-crushing labor is going away over the next decade. We will be deep in that transition in 2030, when humanoid robots are as common as bicycles.

Notice the goalpost move of ‘deep in that transition’ in 2030 versus saying full replacement by 2030, without seeming to understand there is any contradiction.

These are two very different predictions. The original ‘by 2030’ prediction is Obvious Nonsense unless you expect superintelligence and a singularity, probably involving us all dying. There’s almost zero chance otherwise. Technology does not diffuse that fast.

Plugging 2035 into the 2030 prediction is also absurd, if we take the prediction literally. No, you’re not going to have zero workers at Amazon, UPS and FedEx within ten years unless we’ve not only solved robotics and AGI, we’ve also diffused those technologies at full scale. In which case, again, that’s a singularity.

I am curious what his co-podcaster David Sacks or Sriram Krishnan would say here. Would they dismiss Jason’s confident prediction as already proven false? If not, how can one be confident that AGI is far? Very obviously you can’t have one without the other.

GPT-5 Was On Trend

GPT-5 is not a good reason to dismiss AGI, and to be safe I will once again go into why, and why we are making rapid progress towards AGI.

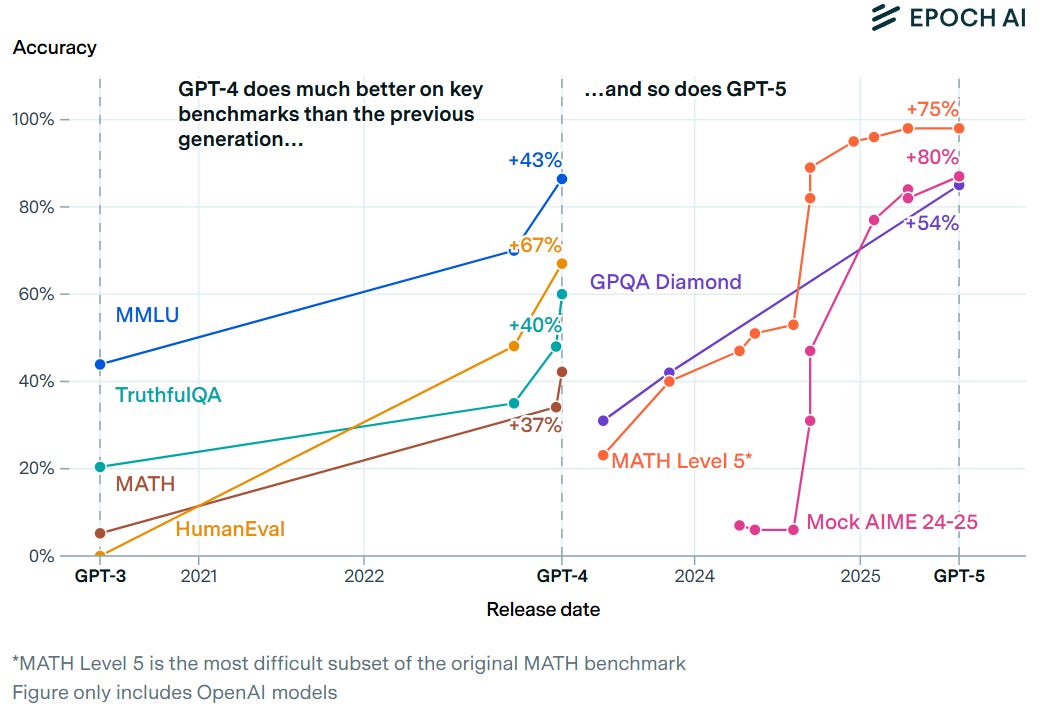

GPT-5 and GPT-4 were both major leaps in benchmarks from the previous generation.

The differences are dramatic, and the time frame between releases was similar.

The actual big difference? That there was only one incremental release between GPT-3 and GPT-4, GPT-3.5, with little outside competition. Whereas between GPT-4 and GPT-5 we saw many updates. At OpenAI alone we saw GPT-4o, and o1, and o3, plus updates that didn’t involve number changes, and at various points Anthropic’s Claude and Google’s Gemini were plausibly on top. Our frog got boiled slowly.

Epoch AI: However, one major difference between these generations is release cadence. OpenAI released relatively few major updates between GPT-3 and GPT-4 (most notably GPT-3.5). By contrast, frontier AI labs released many intermediate models between GPT-4 and 5. This may have muted the sense of a single dramatic leap by spreading capability gains over many releases.

Benchmarks can be misleading, especially as we saturate essentially all of them often well ahead of predicted schedules, but the overall picture is not. The mundane utility and user experience jumps across all use cases are similarly dramatic. The original GPT-4 was a modest aid to coding, GPT-5 and Opus 4.1 transform how it is done. Most of the queries I make with GPT-5-Thinking or GPT-5-Pro would not be worth bothering to give to the original GPT-4, or providing the context would not even be possible. So many different features have been improved or added.

The Myths Of Model Equality and Lock-In

This ideas, frequently pushed by among others David Sacks, that everyone’s models are about the same and aren’t improving? These claims simply are not true. Observant regular users are not about to be locked into one model or ecosystem.

Everyone’s models are constantly improving. No one would seriously consider using models from the start of the year for anything but highly esoteric purposes.

The competition is closer than one would have expected. There are three major labs, OpenAI, Anthropic and Google, that each have unique advantages and disadvantages. At various times each have had the best model, and yes currently it is wise to mix up your usage depending on your particular use case.

Those paying attention are always ready to switch models. I’ve switched primary models several times this year alone, usually switching to a model from a different lab, and tested many others as well. And indeed we must switch models often either way, as it is expected that everyone’s models will change on the order of every few months, in ways that break the same things that would break if you swapped GPT-5 for Opus or Gemini or vice versa, all of which one notes typically run on three distinct sets of chips (Nvidia for GPT-5, Amazon Trainium for Anthropic and Google TPUs for Gemini) but we barely notice.

The Law of Good Enough

Most people notice AI progress much better when it impacts their use cases.

If you are not coding, and not doing interesting math, and instead asking simple things that do not require that much intelligence to answer correctly, then upgrading the AI’s intelligence is not going to improve your satisfaction levels much.

Jack Clark: Five years ago the frontier of LLM math/science capabilities was 3 digit multiplication for GPT-3. Now, frontier LLM math/science capabilities are evaluated through condensed matter physics questions. Anyone who thinks AI is slowing down is fatally miscalibrated.

David Shapiro: As I've said before, AI is "slowing down" insofar as most people are not smart enough to benefit from the gains from here on out.

Floor Versus Ceiling

Once you see this framing, you see the contrast everywhere.

Patrick McKenzie: I think a lot of gap between people who “get” LLMs and people who don’t is that some people understand current capabilities to be a floor and some people understand them to be either a ceiling or close enough to a ceiling.

And even if you explain “Look this is *obviously* a floor” some people in group two will deploy folk reasoning about technology to say “I mean technology decays in effectiveness all the time.” (This is not considered an insane POV in all circles.)

And there are some arguments which are persuasive to… people who rate social pressure higher than received evidence of their senses… that technology does actually frequently regress.

For example, “Remember how fast websites were 20 years ago before programmers crufted them up with ads and JavaScript? Now your much more powerful chip can barely keep up. Therefore, technological stagnation and backwards decay is quite common.”

Some people would rate that as a powerful argument. Look, it came directly from someone who knew a related shibboleth, like “JavaScript”, and it gestures in the direction of at least one truth in observable universe.

<offtopic> Oh the joys of being occasionally called in as the Geek Whisperer for credentialed institutions where group two is high status, and having to titrate how truthful I am about their worldview to get message across. </offtopic>

As in, it’s basically this graph but for AI:

Here’s another variant of this foolishness, note the correlation to ‘hitting a wall’:

Prem Kumar Aparanji: It's not merely the DL "hitting a wall" (as @GaryMarcus put it & everybody's latched on) now as predicted, even the #AI data centres required for all the training, fine-tuning, inferencing of these #GenAI models are also now predicted to be hitting a wall soon.

Quotes from Futurism: For context, Kupperman notes that Netflix brings in just $39 billion in annual revenue from its 300 million subscribers. If AI companies charged Netflix prices for their software, they’d need to field over 3.69 billion paying customers to make a standard profit on data center spending alone — almost half the people on the planet.

“Simply put, at the current trajectory, we’re going to hit a wall, and soon,” he fretted. “There just isn’t enough revenue and there never can be enough revenue. The world just doesn’t have the ability to pay for this much AI.”

Prinz: Let's assume that AI labs can charge as much as Netflix per month (they currently charge more) and that they'll never have any enterprise revenue (they already do) and that they won't be able to get commissions from LLM product recommendations (will happen this year) and that they aren't investing in biotech companies powered by AI that will soon have drugs in human trial (they already have). How will they ever possibly be profitable?

New York Times Platforms Gary Marcus Saying Gary Marcus Things

He wrote a guest opinion essay. Things didn’t go great.

That starts with the false title (as always, not entirely up to the author, and it looks like it started out as a better one), dripping with unearned condescension, ‘The Fever Dream of Imminent ‘Superintelligence’ Is Finally Breaking,’ and the opening paragraph in which he claims Altman implied GPT-5 would be AGI.

Here is the lead:

GPT-5, OpenAI’s latest artificial intelligence system, was supposed to be a game changer, the culmination of billions of dollars of investment and nearly three years of work. Sam Altman, the company’s chief executive, implied that GPT-5 could be tantamount to artificial general intelligence, or A.G.I. — A.I. that is as smart and as flexible as any human expert.

Instead, as I have written, the model fell short. Within hours of its release, critics found all kinds of baffling errors: It failed some simple math questions, couldn’t count reliably and sometimes provided absurd answers to old riddles. Like its predecessors, the A.I. model still hallucinates (though at a lower rate) and is plagued by questions around its reliability. Although some people have been impressed, few saw it as a quantum leap, and nobody believed it was A.G.I. Many users asked for the old model back.

GPT-5 is a step forward but nowhere near the A.I. revolution many had expected. That is bad news for the companies and investors who placed substantial bets on the technology.

Did you notice the stock market move in AI stocks, as those bets fell down to Earth when GPT-5 was revealed? No? Neither did I.

The argument above is highly misleading on many fronts.

GPT-5 is not AGI, but this was entirely unsurprising - expectations were set too high, but nothing like that high. Yes, Altman teased that it was possible AGI could arrive relatively soon, but at no point did Altman claim that GPT-5 would be AGI, or that AGI would arrive in 2025. Approximately zero people had median estimates of AGI in 2025 or earlier, although there are some that have estimated the end of 2026, in particular Anthropic (they via Jack Clark continue to say ‘powerful’ AI buildable by end of 2026, not AGI arriving 2026).

The claim that it ‘couldn’t count reliably’ is especially misleading. Of course GPT-5 can count reliably. The evidence here is a single adversarial example. For all practical purposes, if you ask GPT-5 to count something, it will count that thing.

Old riddles is highly misleading. If you give it an actual old riddle it will nail it. What GPT-5 and other models get wrong are, again, adversarial examples that do not exist ‘in the wild’ but are crafted to pattern match well-known other riddles while having a different answer. Why should we care?

GPT-5 still is not fully reliable but this is framed as it being still highly unreliable, when in most circumstances this is not the case. Yes, if you need many 9s of reliability LLMs are not yet for you, but neither are humans.

AI valuations and stocks continue to be rising not falling.

Yes, the fact that OpenAI chose to have GPT-5 not be a scaled up model does tell us that directly scaling up model size alone has ‘lost steam’ in relative terms due to the associated costs, but this is not news, o1 and o3 (and GPT-4.5) tell us this as well. We are now working primarily on scaling and improving in other ways, but very much there are still plans to scale up more in the future. In the context of all the other facts quoted about other scaled up models, it seems misleading to many readers to not mention that GPT-5 is not scaled up.

Claims here are about failures of GPT-5-Auto or GPT-5-Base, whereas the ‘scaled up’ version of GPT-5 is GPT-5-Pro or at least GPT-5-Thinking.

Gary Marcus clarifies that his actual position is on the order of 8-15 years to AGI, with 2029 being ‘awfully unlikely.’ Which is a highly reasonable timeline, but that seems pretty imminent. That’s crazy soon. That’s something I would want to be betting on heavily, and preparing for at great cost, AGI that soon seems like the most important thing happening in the world right now if likely true?

The article does not give any particular timeline, and does not imply we will never get to AGI, but I very much doubt those reading the post would come away with the impression that things strictly smarter than people are only about 10 years away. I mean, yowsers, right?

The fact about ‘many users asked for the old model back’ is true, but lacking the important context that what users wanted was the old personality, so it risks giving an uninformed user the wrong impression.

To Gary’s credit, he then does hedge, as I included in the quote, acknowledging GPT-5 is indeed a good model representing a step forward. Except then:

And it demands a rethink of government policies and investments that were built on wildly overinflated expectations.

Um, no? No it doesn’t. That’s silly.

The current strategy of merely making A.I. bigger is deeply flawed — scientifically, economically and politically. Many things, from regulation to research strategy, must be rethought.

…

As many now see, GPT-5 shows decisively that scaling has lost steam.

Again, no? That’s not the strategy. Not ‘merely’ doing that. Indeed, a lot of the reason GPT-5 was so relatively unimpressive was GPT-5 was not scaled up so much. It was instead optimized for compute efficiency. There is no reason to have to rethink much of anything in response to a model that, as explained above, was pretty much exactly on the relevant trend lines.

I do appreciate this:

Gary Marcus: However, as I warned in a 2022 essay, “Deep Learning Is Hitting a Wall,” so-called scaling laws aren’t physical laws of the universe like gravity but hypotheses based on historical trends.

As in, the ‘hitting the wall’ claim was back in 2022. How did that turn out? Look at GPT-5, look at what we had available in 2022, and tell me we ‘hit a wall.’

Gary Marcus Does Not Actually Think AGI Is That Non-Imminent

What does ‘imminent’ superintelligence mean in this context?

Gary Marcus (NYT): The chances of A.G.I.’s arrival by 2027 now seem remote.

Notice the subtle goalpost move, as AGI ‘by 2027’ means AGI 2026. These people are gloating, in advance, that someone predicted a possibility of privately developed AGI in 2027 (with a median in 2028, in the AI 2027 scenario OpenBrain tells the government but does not release its AGI right away to the public) and then AGI will have not arrived, to the public, in 2026.

According to my sources (Opus 4.1 and GPT-5 Thinking) even ‘remote’ still means on the order of 2% chance in the next 16 months, implying an 8%-25% chance in 5 years. I don’t agree, but even if one did, that’s hardly something one can safety rule out.

But then, there’s this interaction on Twitter that clarifies what Gary Marcus meant:

Gary Marcus: Anyone who thinks AGI is impossible: wrong.

Anyone who thinks AGI is imminent: just as wrong.

It’s not that complicated.

Peter Wildeford: what if I think AGI is 4-15 years away?

Gary Marcus: 8-15 and we might reach an agreement. 4 still seems awfully unlikely to me. to many core cognitive problems aren’t really being addressed, and solutions may take a while to roll once we find the basic insights we are lacking.

But it’s a fair question.

That’s a highly reasonable position one can take. Awfully unlikely (but thus possible) in four years, likely in 8-15, median timeline of 2036 or so.

Notice that on the timescale of history, 8-15 years until likely AGI, the most important development in the history of history if and when it happens, seems actually kind of imminent and important? That should demand an aggressive policy response focused on what we are going to do when we get to do that, not be treated as a reason to dismiss this?

Imagine saying, in 2015, ‘I think AGI is far away, we’re talking 18-25 years’ and anticipating the looks you would get.

The rest of the essay is a mix of policy suggestions and research direction suggestions. If indeed he is right about research directions, of which I am skeptical, we would still expect to see rapid progress soon as the labs realize this and pivot.

Can Versus Will Versus Always, Typical Versus Adversarial

A common tactic among LLM doubters, which was one of the strategies used in the NYT editorial, is to show a counterexample, where a model fails a particular query, and say ‘model can’t do [X]’ or the classic Colin Fraser line of ‘yep it’s dumb.’

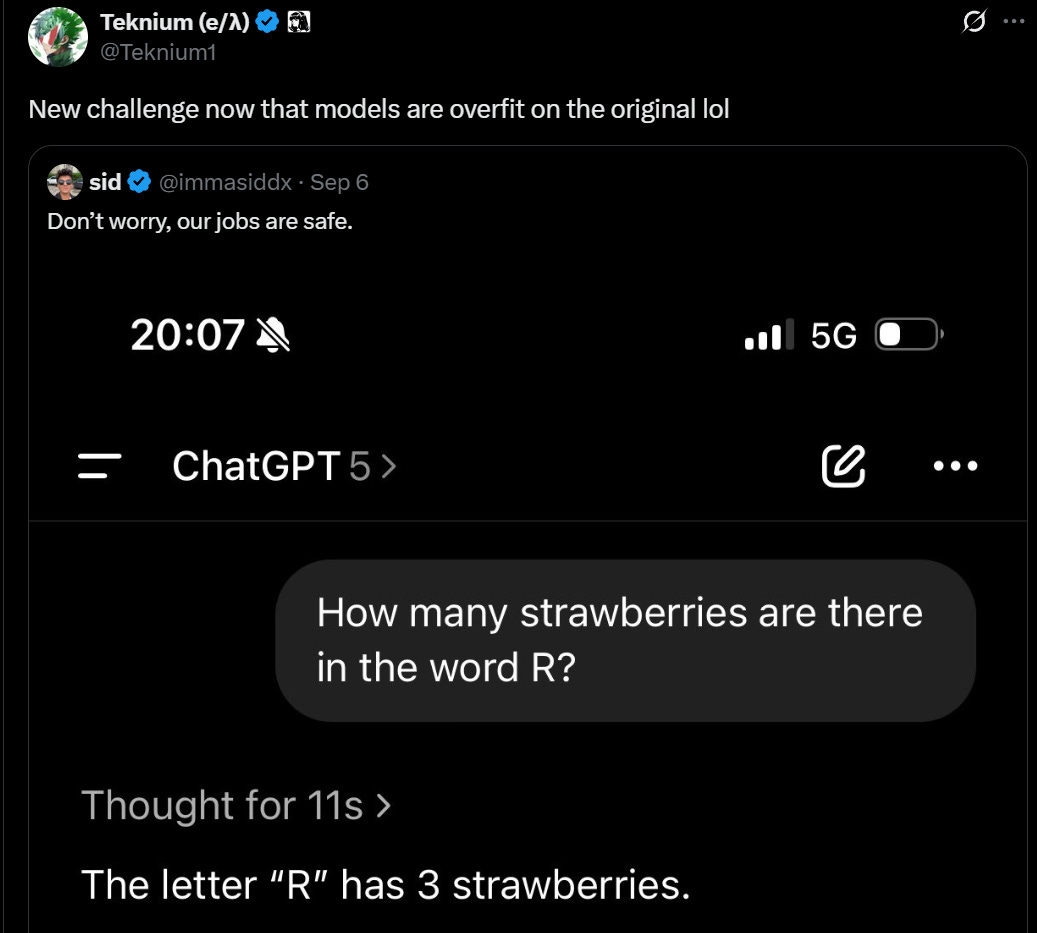

Here’s a chef's kiss example I saw on Monday morning:

I mean, that’s very funny, but it is rather obvious how it happened with the strawberries thing all over Twitter and thus the training data, and it tells us very little about overall performance.

In such situations, we have to differentiate between different procedures, the same as in any other scientific experiment. As in:

Did you try to make it fail, or try to set it up to succeed? Did you choose an adversarial or a typical example? Did you get this the first time you tried it or did you go looking for a failure? Are you saying it ‘can’t [X]’ because it can’t ever do [X], because it can’t ever do [X] out of the box, it can’t reliably do [X], or it can’t perfectly do [X], etc?

If you conflate ‘I can elicit wrong answers on [X] if I try’ with ‘it can’t do [X]’ then the typical reader will have a very poor picture.

Daniel Litt (responding to NYT article by Gary Marcus that says ‘[GPT-5] failed some simple math questions, couldn’t count reliably’): While it’s true one can elicit poor performance on basic math question from frontier models like GPT-5, IMO this kind of thing (in NYTimes) is likely to mislead readers about their math capabilities.

Derya Unutmaz: AI misinformation at the NYT is at its peak. What a piece of crap “newspaper” it has become. It’s not even worth mentioning the author of this article-but y’all can guess. Meanwhile, just last night I posted a biological method invented by GPT-5 Pro, & I have so much more coming!

Ethan Mollick: This is disappointing. Purposefully underselling what models can do is a really bad idea. It is possible to point out that AI is flawed without saying it can't do math or count - it just isn't true.

People need to be realistic about capabilities of models to make good decisions.

I think the urge to criticize companies for hype blends into a desire to deeply undersell what models are capable of. Cherry-picking errors is a good way of showing odd limitations to an overethusiastic Twitter crowd, but not a good way of making people aware that AI is a real factor.

Shakeel: The NYT have published a long piece by Gary Marcus on why GPT-5 shows scaling doesn't work anymore. At no point does the piece mention that GPT-5 is not a scaled up model.

[He highlights the line from the post, ‘As many now see, GPT-5 shows decisively that scaling has lost steam.’]

Tracing Woods: Gary Marcus is a great demonstration of the power of finding a niche and sticking to it

He had the foresight to set himself up as an "AI is faltering" guy well in advance of the technology advancing faster than virtually anyone predicted, and now he's the go-to

The thing I find most impressive about Gary Marcus is the way he accurately predicted AI would scale up to an IMO gold performance and then hit a wall (upcoming).

Counterpoint

Gary Marcus was not happy about these responses, and doubled down on ‘but you implied it would be scaled up, no takesies backsies.’

Gary Marcus (replying to Shakeel directly): this is intellectually dishonest, at BEST it at least as big as 4.5 which was intended as 5 which was significantly larger than 4 it is surely scaled up compared to 4 which is what i compared it to.

Shakeel: we know categorically that it is not an OOM scale up vs. GPT-4, so ... no. And there's a ton of evidence that it's smaller than 4.5.

Gary Marcus (QTing Shakeel): intellectually dishonest reply to my nytimes article.

openai implied implied repeatedly that GPT-5 was a scaled up model. it is surely scaled up relative to GPT-4.

it is possible - openAI has been closed mouth - that it is same size as 4.5 but 4.5 itself was surely scaled relative to 4, which is what i was comparing with.

amazing that after years of discussion of scaling the new reply is to claim 5 wasn’t scaled at all.

Note that if it wasn’t, contra all the PR, that’s even more reason to think that OpenAI knows damn well that is time for leaning on (neuro)symbolic tools and that scaling has reached diminishing returns.

JB: It can’t really be same in parameter count as gpt4.5 they really struggled serving that and it was much more expensive on the API to use

Gary Marcus: so a company valued at $300b that’s raised 10 of billions didn’t have the money to scale anymore even though there whole business plan was scaling? what does that tell you?

I am confused how one can claim Shakeel is being intellectually dishonest. His statement is flat out true. Yes, of course the decision not to scale

It tells me that they want to scale how much they serve the model and how much they do reasoning at inference time, and that this was the most economical solution for them at the time. JB is right that very, very obviously GPT-4.5 is a bigger model than GPT-5 and it is crazy to not realize this.

‘Superforecasters’ Have Reliably Given Unrealistically Slow AI Projections Without Reasonable Justifications

A post like this would be incomplete if I failed to address superforecasters.

I’ve been over this several times before, where superforecasters reliably have crazy slow projections for progress and even crazier predictions that when we do make minds smarter than ourselves that is almost certainly not an existential risk.

My coverage of this started way back in AI #14 and AI #9 regarding existential risk estimates, including Tetlock’s response to AI 2027. One common theme in such timeline projections is predicting Nothing Ever Happens even when this particular something has already happened.

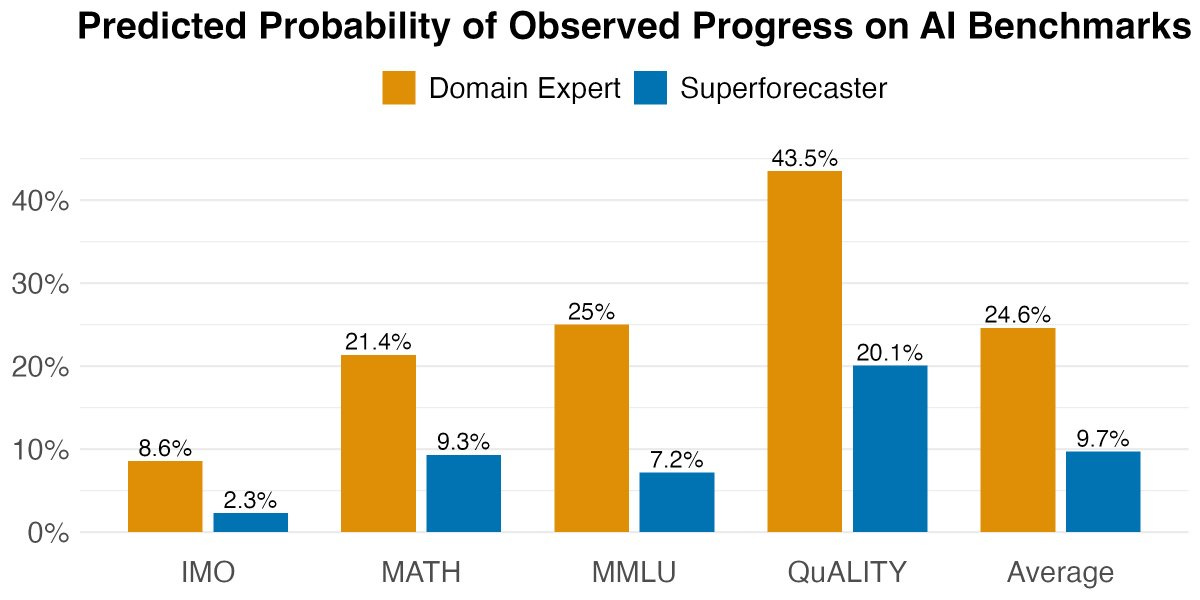

Now that the dust settled on models getting IMO Gold in 2025, it is a good time to look back on the fact that domain experts expected less progress in math than we got, and superforecasters expected a lot less, across the board.

Forecasting Research Institute: Respondents—especially superforecasters—underestimated AI progress.

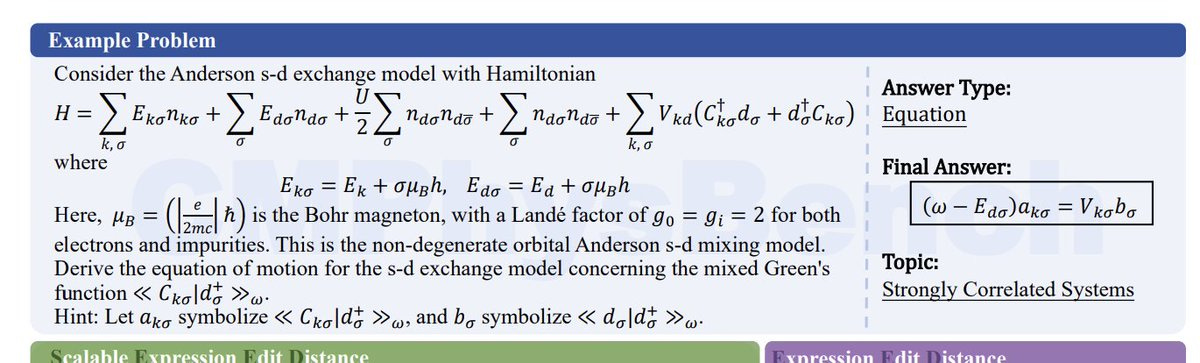

Participants predicted the state-of-the-art accuracy of ML models on the MATH, MMLU, and QuaLITY benchmarks by June 2025.

Domain experts assigned probabilities of 21.4%, 25%, and 43.5% to the achieved outcomes. Superforecasters assigned even lower probabilities: just 9.3%, 7.2%, and 20.1% respectively.

The International Mathematical Olympiad results were even more surprising. AI systems achieved gold-level performance at the IMO in July 2025. Superforecasters assigned this outcome just a 2.3% probability. Domain experts put it at 8.6%.

Garrison Lovely: This makes Yudkowsky and Paul Christiano's predictions of IMO gold by 2025 look even more prescient (they also predicted it a ~year before this survey was conducted).

Note that even Yudkowsky and Christiano had only modest probability that the IMO would fall as early as 2025.

Andrew Critch: Yeah sorry forecasting fam, ya gotta learn some AI if you wanna forecast anything, because AI affects everything and if ya don't understand it ya forecast it wrong.

Or, as I put it back in the unrelated-to-AI post Rock is Strong:

Everybody wants a rock. It’s easy to see why. If all you want is an almost always right answer, there are places where they almost always work.

…

The security guard has an easy to interpret rock because all it has to do is say “NO ROBBERY.” The doctor’s rock is easy too, “YOU’RE FINE, GO HOME.” This one is different, and doesn’t win the competitions even if we agree it’s cheating on tail risks. It’s not a coherent world model.

Still, on the desk of the best superforecaster is a rock that says “NOTHING EVER CHANGES OR IS INTERESTING” as a reminder not to get overexcited, and to not assign super high probabilities to weird things that seem right to them.

Thus:

Daniel Eth: In 2022, superforecasters gave only a 2.3% chance of an AI system achieving an IMO gold by 2025. Yet this wound up happening. AI progress keeps being underestimated by superforecasters.

I feel like superforecasters are underperforming in AI (in this case even compared to domain experts) because two reference classes are clashing:

• steady ~exponential increase in AI

• nothing ever happens.

And for some reason, superforecasters are reaching for the second.

Hindsight is hindsight, and yes you will get a 98th percentile result 2% of the time. But I think at 2.3% for 2025 IMO Gold, you are not serious people.

That doesn’t mean that being serious people was the wise play here. The incentives might well have been to follow the ‘nothing ever happens’ rock. We still have to realize this, as we can indeed smell what the rock is cooking.

What To Expect When You’re Expecting AI Progress

A wide range of potential paths of AI progress are possible. There are a lot of data points that should impact the distribution of outcomes, and one must not overreact to any one development. One should especially not overreact to not being blown away by progress for a span of a few months. Consider your baseline that’s causing that.

My timelines for hitting various milestones, including various definitions of AGI, involve a lot of uncertainty. I think not having a lot of uncertainty is a mistake.

I especially think saying either ‘AGI almost certainly won’t happen within 5 years’ or ‘AGI almost certainly will happen within 15 years,’ would be a large mistake. There are so many different unknowns involved.

I can see treating full AGI in 2026 as effectively a Can’t Happen. I don’t think you can extend that even to 2027, although I would lay large odds against it hitting that early.

A wide range of medians seem reasonable to me. I can see defending a median as early as 2028, or one that extends to 2040 or beyond if you think it is likely that anything remotely like current approaches cannot get there. I have not put a lot of effort into picking my own number since the exact value currently lacks high value of information. If you put a gun to my head for a typical AGI definition I’d pick 2031, but with no ‘right to be surprised’ if it showed up in 2028 or didn’t show up for a while. Consider the 2031 number loosely held.

To close out, consider once again: Even if you we agreed with Gary Marcus and said 8-15 years, with median 2036? Take a step back and realize how soon and crazy that is.

Turns out superforecasters are just base rate bros a little better at playing reference class tennis.

I think you're a little overly dismissive about adversarial examples. Most of the economically relevant uses involve potentially adversarial interactions, ironically with the exception of things like research: tasks which are difficult but where the universe is mostly not actively trying to work against you.

This could lead to AI becoming unexpectedly good at science, and internal tasks in large enterprises (manufacturing, internal processes, etc.), while remaining relatively limited in its economic impact wherever there is a significant surface area to the outer world, including current leading applications such as coding and marketing.

In many ways, this might be the most dangerous path things could take, as it would likely cause most people not to experience advanced AI first-hand, and the class contains many of the most dangerous vectors how misalignment could screw us, such as nanotech, bio weapons, manufacturing, and so on.

You illustrate a great recurrent theme that people love making bombastic predictions about the future, but that when faced with the possibility of reputational ramification of being wrong, they dial back the prediction to preserve a path for retreat. Forecasting motte and bailey.