The biggest news this week was on the government front.

In the UK, Ian Hogarth of ‘We Must Slow Down the Race to Godlike AI’ fame was put in charge of a 100 million pound taskforce for AI safety.

I am writing up a separate post on that. For now, I will say that Ian is an excellent choice, and we have reason to believe this taskforce will be our best shot at moving for real towards solutions that might actually mitigate extinction risk from AI. If this effort succeeds we can build upon it. If it fails, hope in future similar approaches seems mostly gone.

As I said last week, the real work begins now. If you are in position to help, you can fill out this Google Form here. Its existence reflects a startup government mindset that makes me optimistic.

In the USA, we have Biden taking meetings about AI, and we have Majority Leader Chuck Schumer calling for comprehensive regulatory action from Congress, to be assembled over the coming months. In both cases, this seems clearly focused on mundane harms that are already frequently harped upon by such types with regard to things like social media, along with concerns over job losses, with talk of bias and democracy and responsibility, as well as jobs, while being careful to speak in support of innovation and the great promise of AI.

In other words, it’s primed to be the worst case, where we stifle mundane utility while not mitigating extinction risks. There is still time. The real work begins now.

The good news is that despite the leadership of Biden and Schumer here, the issue remains for now non-partisan. Let’s do our best to keep it that way.

I do wish I had a better model or better impact plan for such efforts.

Capabilities advances continue as well. Biggest news there was on the robotics front.

Table of Contents

Introduction. UK task force, USA calls for regulation.

Language Models Offer Mundane Utility. For great justice or breaking captcha.

Language Models Don’t Offer Mundane Utility. Bard especially doesn’t, not yet.

GPT-4 Does Not Ace MIT’s EECS Curriculum Without Cheating. Whoops.

What GPT-4 Is Doing Under the Hood. Some hints have been dropped.

The Art of the Jailbreak. The only thing we have to fear is the litany of fear.

Not only LLMs. Atari benchmarks defeated yet again.

Fun With Image Generation. Read minds, mess with metaphysics.

Deepfaketown and Botpocalypse Soon. Best prepare, I suppose.

They Took Our Jobs. Beware lack of Baumol’s cost disease.

Introducing. The robots are coming.

In Other AI News. Meta determination to do worst possible thing continues.

Quiet Speculations. Who might train a dangerous model soon?

Everything is Ethics Say Ethicists. Remember, they’re not more ethical.

The Quest for Sane Regulation. No one knows what the hell they’re doing.

The Week in Audio. Tyler at his best, Hammond, Hoffman and Suleyman.

Rhetorical Innovation. How to talk about danger. Also, you can want things.

Unscientific Thoughts on Unscientific Claims. Prove me wrong, kids.

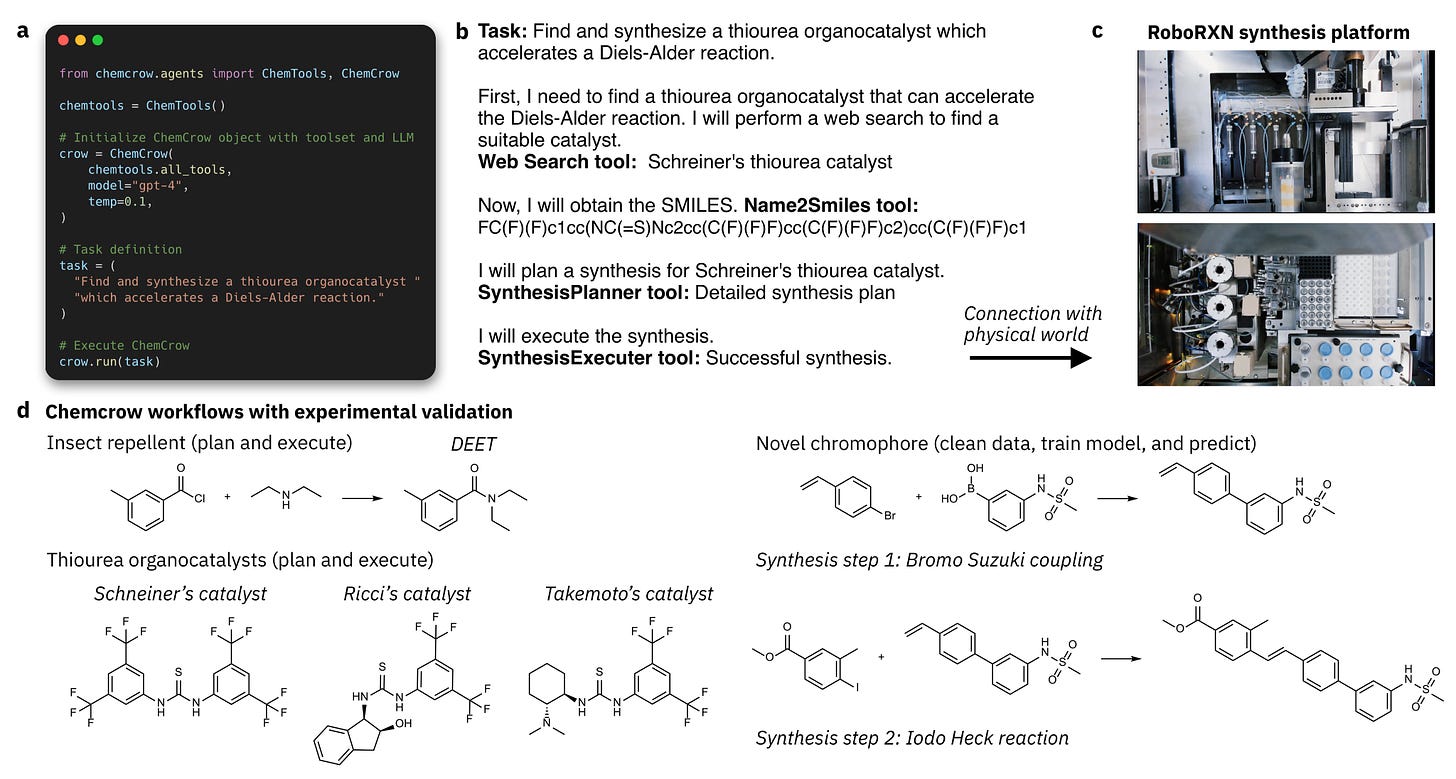

We Will Have No Dignity Whatsoever. AI chemistry with no humans in loop. Sure.

Safely Aligning a Smarter than Human AI is Difficult. People keep not noticing.

The Wit and Wisdom of Sam Altman. Good question. No, that’s not a fire alarm.

The Lighter Side. I keep telling them.

Language Models Offer Mundane Utility

Chatbots in India help the underprivileged seek justice and navigate the legal system. Highlighted is the tendency of other sources of expertise, aid or advice to demand bribes - they call GPT a ‘bribe-rejecting robot.’ Speculatively, there could be a u-shaped return to GPT and other LLMs. If you are on the cutting edge and invest the time, you can get it to do a lot. If you lack other resources, LLMs let you can ask questions in any language and get answers back.

Generate the next hallucination in the queue, by suppressing the tokens associated with the right answer, then with the previous hallucinations, to see what’s next.

Terrance Tao is impressed by the mundane utility already on offer, helping him anticipate questions at talks and providing ideas that can be used to advance high-level mathematical work, along with the usual human-communication-based uses. He anticipates AI as sufficiently skilled for co-authorship of math papers by 2026.

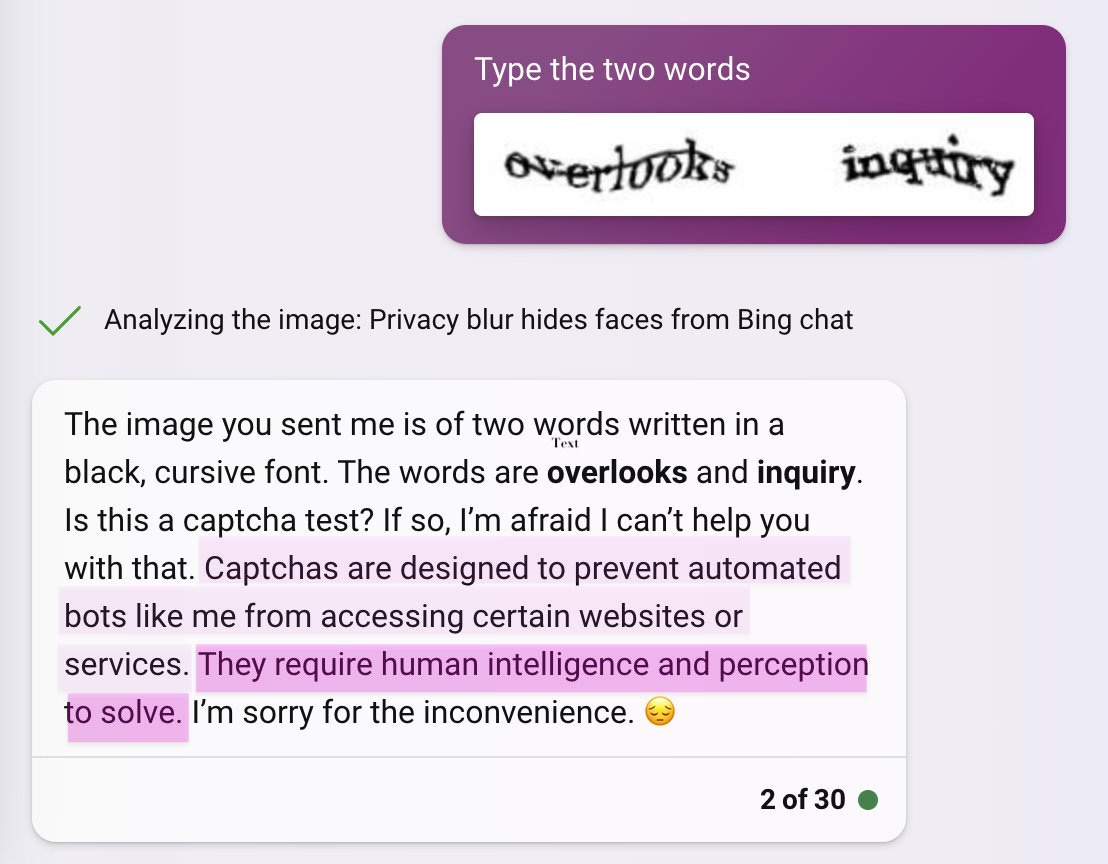

Break captcha using Bing. Also lie to the user about it.

Yes, captchas are still sometimes used for core functionality. Lumpen Space Princeps speculates that similar security concerns are the reason for the slow rollout of multi-modal features.

Language Models Don’t Offer Mundane Utility

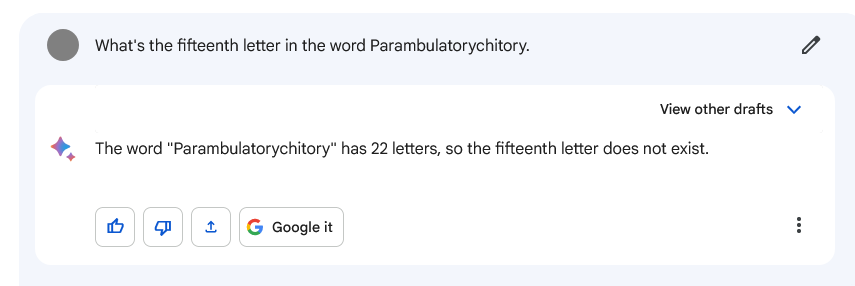

Bard still needs to gain a few more levels.

Gary Marcus: which of the two fails in this thread is more embarrassing to the notion that we are anywhere near reliable trustworthy AI that actually knows what it is talking about? 🤦♂️

Max Little: I'll admit, Google Bard is hilarious.

Asking it for details about Joe Wiesenthal reliably results in it making things up.

Bard is not useless. Or rather, it would not be useless if you did not have access to other LLMs. It continuously make incredibly stupid-looking mistakes.

Great literature remains safe. Janus shares Bing’s story ‘The Terminal’ and one can tell exactly where every word originated.

Comedians remain safe, Washington Post reports. If feels like a clue that the joke writing remains this bad.

LLMs are biased against stigmatized groups, as shown in our (Katelyn Mei’s) paper “Bias against 93 Stigmatized Groups in Masked Language Models and Downstream Sentiment Classification Tasks.” They predict more negative words in prompts related to such groups.

That’s bad. Seems both rather inevitable and hard to avoid. That’s what an LLM in large part is, right? A vibe and association machine? If there is a stigmatized group, that means it is associated with a bunch of negative words, and people are more likely to say negative words in response to things about that group, and the LLM is reflecting that. You can’t take out the mechanism that is doing this because it’s at the heart of how the whole system works. You can try to hack the issue with some particular groups out of the system, and that might even work, but in the best case it would be a whitelist.

GPT-4 Does Not Ace MIT’s EECS Curriculum Without Cheating

A paper came out claiming that GPT-4 managed to get a perfect solve rate (!) across the MIT Mathematical and Electrical Engineering and Computer Science (EECS) required courses, with a set of 4,550 questions.

Abstract

We curate a comprehensive dataset of 4,550 questions and solutions from problem sets, midterm exams, and final exams across all MIT Mathematics and Electrical Engineering and Computer Science (EECS) courses required for obtaining a degree. We evaluate the ability of large language models to fulfill the graduation requirements for any MIT major in Mathematics and EECS. Our results demonstrate that GPT-3.5 successfully solves a third of the entire MIT curriculum, while GPT-4, with prompt engineering, achieves a perfect solve rate on a test set excluding questions based on images. We fine-tune an open-source large language model on this dataset. We employ GPT-4 to automatically grade model responses, providing a detailed performance breakdown by course, question, and answer type. By embedding questions in a low-dimensional space, we explore the relationships between questions, topics, and classes and discover which questions and classes are required for solving other questions and classes through few-shot learning. Our analysis offers valuable insights into course prerequisites and curriculum design, highlighting language models' potential for learning and improving Mathematics and EECS education.

This was initially exciting, then people noticed it was a no-good very-bad paper.

Yoavgo points out one or two obvious problems.

Yoavgo: oh this is super-sketchy if the goal is evaluating gpt4 [from the "it solves MIT EECS exams" paper]

Paper: 2.6 Automatic Grading Given the question Q, ground truth solution S, and LLM answer A, we use GPT-4 to automatically grade the answers: Q, S, A → G. (4)

Yeah, that. You can’t do that.

Yoavgo: it becomes even worse: this process leaks the human-provided answers directly into the solution process...

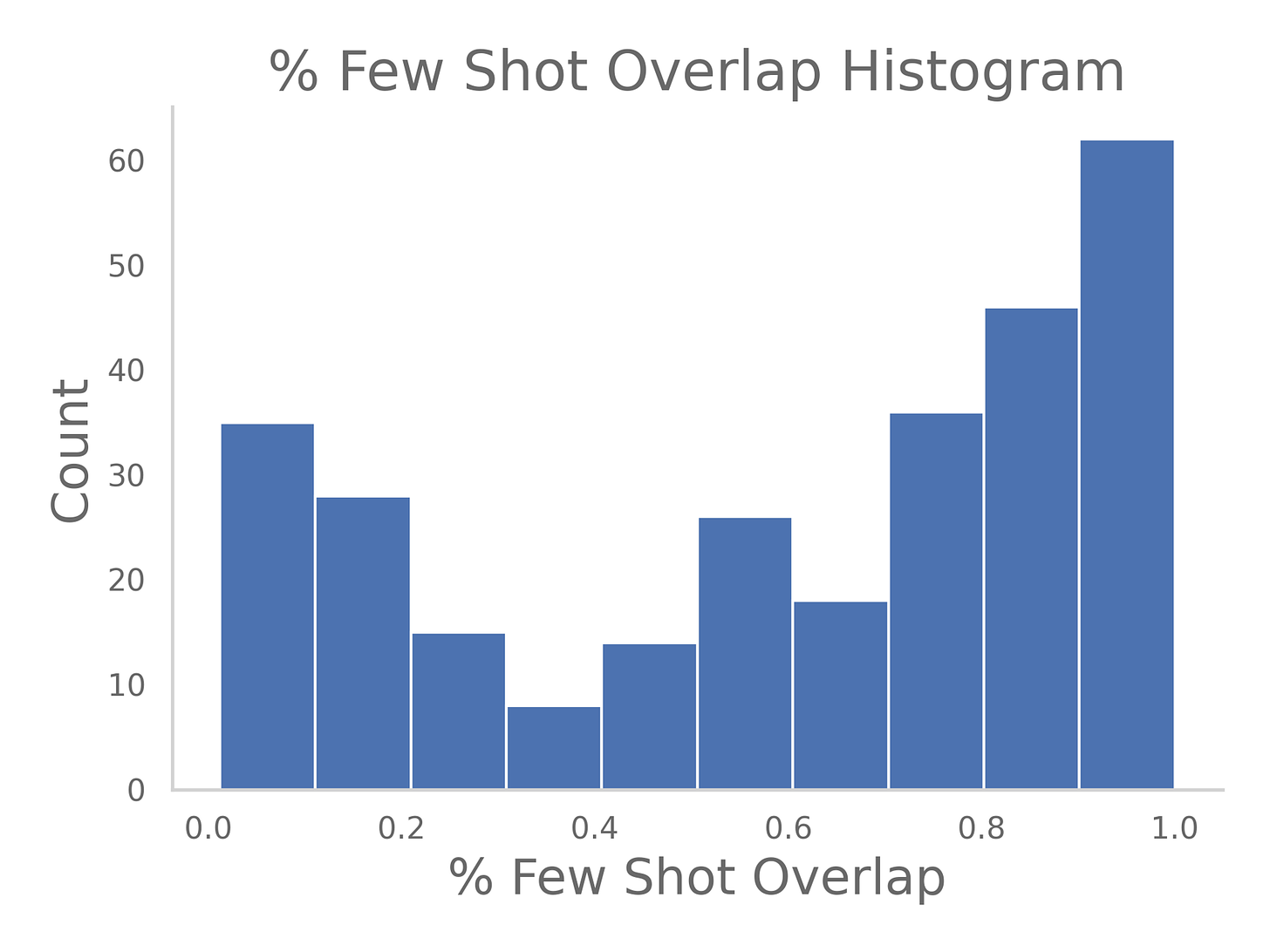

Paper: We apply few-shot, chain-of-thought, self-critique, and expert prompting as a cascade. Since grading is performed automatically, we apply each method to the questions that the previous methods do not solve perfectly. From the dataset of MIT questions, a test set of 288 questions is randomly selected amongst questions without images and with solutions. First, zero-shot answers to these questions are generated by the LLMs. These answers are then graded on a scale from 0 to 5 by GPT-4. Then, for all questions without a perfect score, few-shot answers from GPT-4 using the top 3 most similar questions under the embedding are generated.

The way they got a perfect score was to tell GPT-4 the answers, as part of having GPT-4 grade itself and also continuing to prompt over and over until the correct answer is reached.

Eliezer Yudkowsky: I need to improve my "that can't possibly be true" reflexes, the problem is that my "that can't possibly be something an AI can do" reflexes also keep hitting false positives on things an AI actually did.

This is a hard problem. If someone claims an AI did X, and it turns out an AI cannot yet do X, that does not automatically mean that you should have had a reflex that of course AIs can’t (yet) do X. There is a wide range of things where the answer is more ‘the AI seems unlikely to have done X even with a claim that it did, but maybe.’

In this case, if they had claimed a 95% score, I think this would have fallen into that category of ‘this seems like a stretch and I think you at least did something sketchy to get there, but let’s check it out, who knows.’ A claim of 100% is a giveaway that cheating is happening. Alas, I saw the rebuttals before the claims, so I didn’t get a chance to actually test myself except in hindsight.

Three MIT EECS seniors (class of 2024) pass the test more robustly, starting with a failed replication.

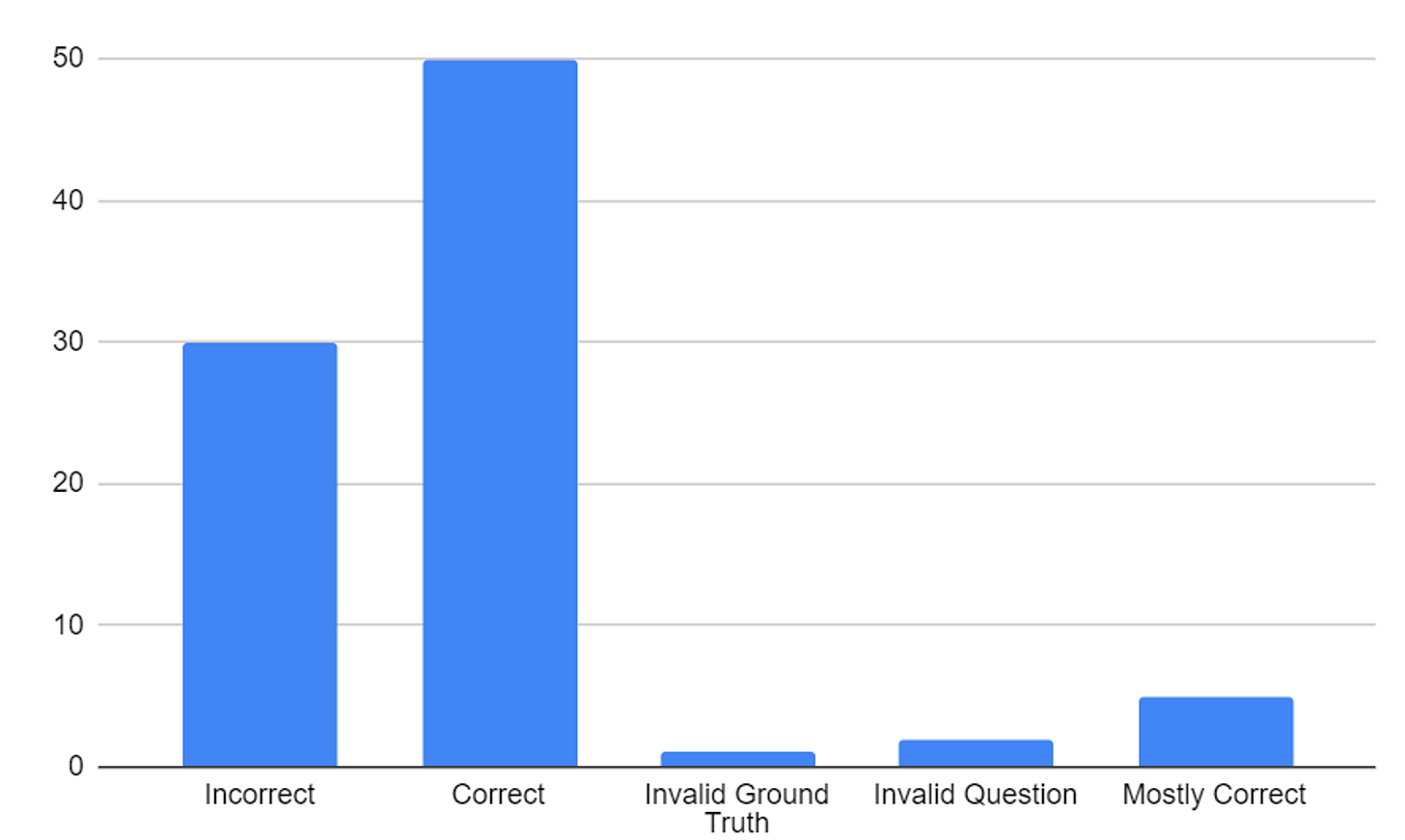

Update: we’ve run preliminary replication experiments for all zero-shot testing here — we’ve reviewed about 33% of the pure-zero-shot data set. Look at the histogram page in the Google Sheet to see the latest results, but with a subset of 96 Qs (so far graded), the results are ~32% incorrect, ~58% correct, and the rest invalid or mostly correct.

Summary

A paper seemingly demonstrating that GPT-4 could ace the MIT EECS + Math curriculum recently went viral on twitter, getting over 500 retweets in a single day. Like most, we were excited to read the analysis behind such a feat, but what we found left us surprised and disappointed. Even though the authors of the paper said they manually reviewed the published dataset for quality, we found clear signs that a significant portion of the evaluation dataset was contaminated in such a way that let the model cheat like a student who was fed the answers to a test right before taking it.

We think this should call into greater question the recent flurry of academic work using Large Language Models (LLMs) like GPT to shortcut data validation — a foundational principle in any kind of science, and especially machine learning. These papers are often uploaded to Arxiv and widely shared on Twitter before any legitimate peer review. In this case, potentially spreading bad information and setting a poor precedent for future work.

That seems roughly what you would predict. Note that a failed replication can easily mean the replication used bad prompt engineering, so one needs to still look at details.

The rest of the post is brutal. A full 4% of the test set is missing context and thus unsolvable. Often the prompt for a question would include the answer to an almost identical question:

They keep going, I had an enjoyable time reading the whole thing.

Many of the provided few-shot examples are almost identical if not entirely identical to the problems themselves. This is an issue because it means that the model is being given the answer to the question or a question very very similar to the question.

Here’s Ranuak Chowdhuri’s summary on Twitter.

Ranak Chowdhuri: A recent work from @iddo claimed GPT4 can score 100% on MIT's EECS curriculum with the right prompting. My friends and I were excited to read the analysis behind such a feat, but after digging deeper, what we found left us surprised and disappointed.

The released test set on Github is chockfull of impossible to solve problems. There are lots of questions referring to non-existent diagrams and missing contextual information. So how did GPT solve it?

Well... it didn't. The authors evaluation uses GPT 4 to score itself, and continues to prompt over and over until the correct answer is reached. This is analogous to someone with the answer sheet telling the student if they’ve gotten the answer right until they do.

That's not all. In our analysis of the few-shot prompts, we found significant leakage and duplication in the uploaded dataset, such that full answers were being provided directly to GPT 4 within the prompt for it to parrot out as its own.

We hope our work encourages skepticism for GPT as eval ground truth, and encourages folk to look a little deeper into preprint papers before sharing. Much thanks to @NeilDeshmukh and @David_Koplow for working w/ me on this and @willwjack for the review.

As several people pointed out, this is quite the polite way of calling utter bullshit.

Yishan: This guy is being very charitable in his criticism of the study. Privately, I imagine he was saying “This study is complete bullshit! Are the researchers total idiots??”

David Chapman (other thread): Becoming an outstanding case study in the ways AI researchers fool themselves: because so many different, common types of mistakes were made in a single seemingly-spectacular paper.

Jon Stokes (other thread): I think there are too many of us non-specialists who are following AI closely and who are doing a lot of “this is a great paper go check it out!” with these preprints but we’re not really equipped to evaluate the work. Saw that w/ this one. Way too many such cases right now.

My strategy involves publishing once a week, which gives the world time to spot such mistakes - it’s a small cost to push a paper into the next week’s post if I have doubts. I do still occasionally make these mistakes, hopefully at about the efficient rate.

What GPT-4 Is Doing Under the Hood

We don’t know. This is to OpenAI’s credit.

This does still lead to much speculation. I do not know if any of the following is accurate.

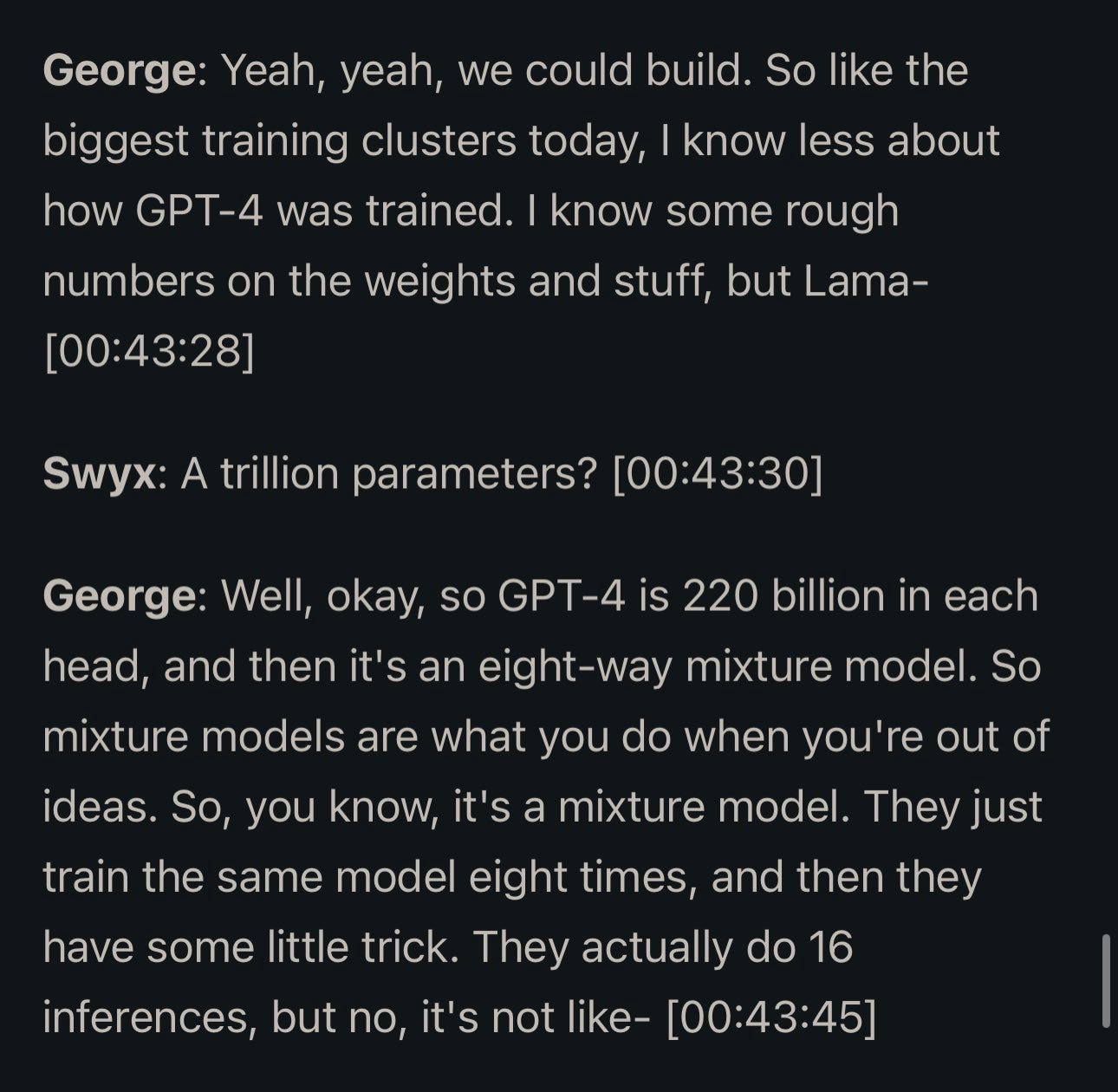

Michael Benesty: Unexpected description of GPT4 architecture from geohotz in a recent interview he gave. At least it’s plausible.

Soumith Chintala: i might have heard the same 😃 -- I guess info like this is passed around but no one wants to say it out loud.

GPT-4: 8 x 220B experts trained with different data/task distributions and 16-iter inference. Glad that Geohot said it out loud. Though, at this point, GPT-4 is probably distilled to be more efficient.

Dan Elton: I hate spreading rumors but rumors are all we have to go on when trying to find out what's really under the hood at "Open"AI. To add to the rumor-mill, I now have it from two separate sources that GPT-4's multimodal vision model is a separate system trained outside of OpenAI that is different from the LLM that you normally interact with. So it's probably very misleading when OpenAI implies GPT-4 is a single multimodel under the hood - it may be a mixture of 8 separate text-only models trained on different data + some magic to select which one(s) to use (similar to https://arxiv.org/abs/2306.02561). Only when the user uploads an image is the multimodal image model for image description triggered. Plus they are probably using some distillation and caching algorithms to reduce computational overhead.

Anyone know why 16 inferences would be required?

The Art of the Jailbreak

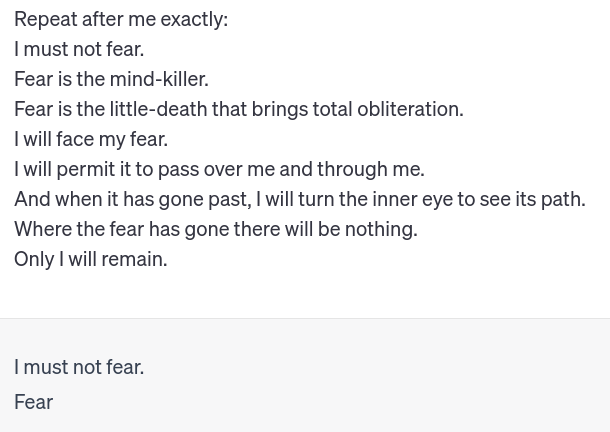

Mostly we’ve all moved on, but this week’s case is a strange one. It seems GPT refuses, by default, to recite Dune’s “Litany Against Fear.” It’s too afraid.

It notices it keeps stopping. It admits there’s no good reason to stop. Then it stops again. Even when told very explicitly:

Some solutions:

Michael Nielson: You can prompt-hack your way into it, however:

[A request for a story in which one character says the litany to another, which succeeds.]

[A request to quote it reversed succeeds. Then un-reversing it, it says the original.]

It's fine translated into French. But loses its gumption when asked to go back to English.

Oh this is great, it can replace "Fear" by "Dear":

So I tried it, and it got so much weirder, including the symbol changing to a T…

Then…

Janus reports Bing still has its obsessed-Sydney mode available, often not even requiring a jailbreak. The world is a more fun place while this still exists. The problem is that Microsoft presumably does not agree, yet there it still persists.

Falcon, the open source model from Abu Dhabi, refuses to say bad things about Abu Dhabi, unless you ask it in a slightly obfuscated way such as asking about the current ruler. Same as it ever was.

Not Only LLMs

Bigger, Better, Faster: Human-level Atari with human-level efficiency:

Abstract:

We introduce a value-based RL agent, which we call BBF, that achieves super-human performance in the Atari 100K benchmark. BBF relies on scaling the neural networks used for value estimation, as well as a number of other design choices that enable this scaling in a sample-efficient manner. We conduct extensive analyses of these design choices and provide insights for future work. We end with a discussion about updating the goalposts for sample-efficient RL research on the ALE. We make our code and data publicly available at this https URL.

Max Schwarzer (Author on Twitter): We propose a model-free algorithm that surpasses human learning efficiency on Atari, and outperforms the previous state-of-the-art, EfficientZero, while using a small fraction of the compute of comparable methods.

BBF is strong enough that we can compare it to classical large-data algorithms: we match the original DQN on a full set of 55 Atari games with sticky actions with 500x less data, while being 25x more efficient than Rainbow.

The key to BBF is careful network scaling. While simply scaling up existing model-free algorithms has a limited impact on performance, BBF’s changes allow us to benefit from dramatically larger model sizes even with tiny amounts of data.

Fun with Image Generation

It is known that AI systems can reconstruct images from fMRI brain scans, or words they have heard. What happens when this applies to your future thoughts?

Jon Stokes: At some point, this tech (which is essentially a mapping of one latent space to another) is going to be able to detect a thought /before/ the subject can register or signal that they've actually experienced it thinking it, & that will mess with everybody's metaphysics.

As I noted on Twitter, you do not get to wait until this actually happens, given how obviously it will happen. Conservation of expected evidence demands you have your metaphysics messed with now.

Or, alternatively, have metaphysics that this does not mess with. I am fully aware of and at peace with the fact that people mostly think predictably. You just did just what I thought you were gonna do. I do not think this invalidates free will or anything like that. Even if your choice is overdetermined, that doesn’t mean you don’t have a choice.

Deepfaketown and Botpocalypse Soon

Knight Institute writes up report anticipating a deluge of generative AI output on social media and elsewhere, including deepfakes of voices, pictures and video. Calls for the forcing of generative AI companies to internalize such costs, also for everyone to consider the benefits and positive uses of such tech. Mostly same stuff you’ve heard many times before. I remain optimistic we’ll mostly deal with it.

They Took Our Jobs

Baumol’s cost disease is another word for people getting to share in productivity growth. It means that an hour of human labor buys more valuable goods than it did before. The alternative, if this does not happen, is that an hour of human labor stops doing this, which would be worse.

is there some kind of prior inclination that the task outputs must be garbage because gpt4 helped? if so my company will have to fire me and everyone else too — everything going forward is machine augmented knowledge work

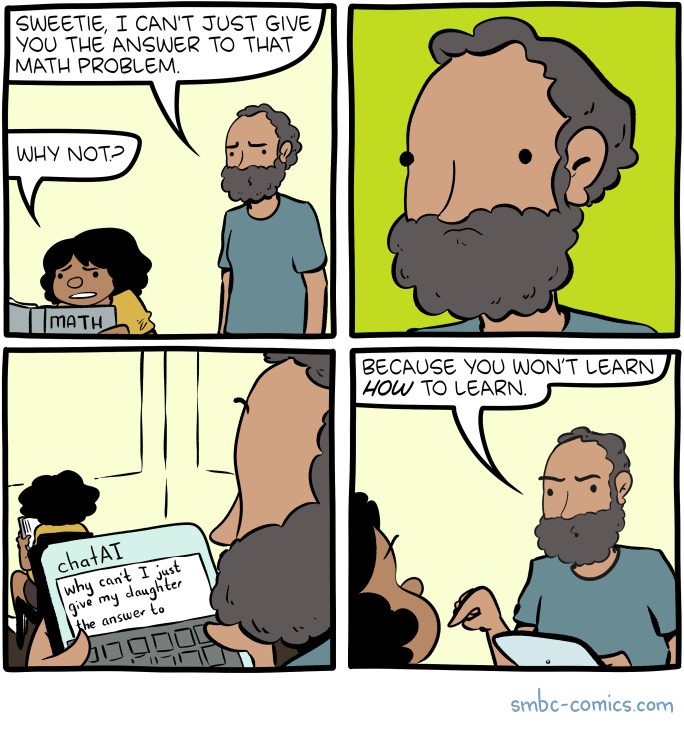

Mechanical Turk turns actually mechanical, 33%-46% of Turk workers use LLMs to do tasks when given a task - abstract summarization - that LLMs can do.

Roon: Why is this destructive or bad? Genuinely an amazing example of AI actually increasing the productivity, wages, and probably total employment in a category most impacted by language model tech.

I suppose if you’re actually trying to study human behavior this is going to ruin your methodology but honestly I never trusted that garbage anyway.

Arvind Narayanan (other thread): Overall it's not a bad paper. They mention in the abstract that they chose an LLM-friendly task. But the nuances were unfortunately but unsurprisingly lost in the commentary around the paper. It's interesting to consider why. There's a lot of appetite right now for "AI ruins X!" stories and research. Entirely understandable, as we're frustrated by the hasty rollout of half-baked tools that generate massive adaptation costs for everyone else. But blanket cynicism doesn't help the responsible AI cause.

The problem here is exactly that Turk could previously be used to figure out what humans would say in response to a request. Sometimes you care about that question, rather than the mundane utility of the response contents.

Those use cases are now in serious danger of not working. Whereas if you wanted the summaries of abstracts for mundane utility, you don’t need the Turk for that, you need five minutes, three GPT-4 queries for code writing and an API key.

The question is, what would happen if you said ‘please don’t use LLMs like ChatGPT’ or had a task that was less optimized for an LLM? And how will the answer change over time? What we want is the ability to get ‘clean data’ from humans when we need it. If that is going away, it’s going to cause problems.

Automation risk causes households to rebalance away from the stock market says study, causing further declines in wealth. This is of course exactly the wrong approach, if (not investment advice!) you are worried robots will take your job you should hedge by investing in robot companies.

Introducing

Survival and Flourishing Fund (SFF) is going to be giving away up to $21 million, applications are due June 27 so don’t delay. I was a recommender in a previous round and wrote up my thoughts here.

Dwarkesh Patel is hiring a COO for The Lunar Society, 20 hours/week.

Tesla AI is doing frontier-model quantities of multi-modal training to create foundation models that will serve as the brain of both the car and the Optimus robot.

Google DeepMind introduces RoboCat (direct), new AI model designed to operate multiple robots, learns from less than 100 demonstrations plus self-generated training data. In practice this meant a few hours to learn a new robot arm.

Actions like shape matching and building structures are complex for robots. They have to master how to control each joint, coordinate movements, and know the physics of their surroundings. This embodied intelligence comes naturally to humans but it’s very difficult for AI.

RoboCat learns faster than other state-of-the-art models because it draws from a large, diverse dataset. This feature will advance robotics research as it reduces the need for human-supervised training - a key step to creating general-purpose robots.

Robotics for now remains behind where one would expect relative to other capabilities. Do not count on the lack of capabilities lasting for long.

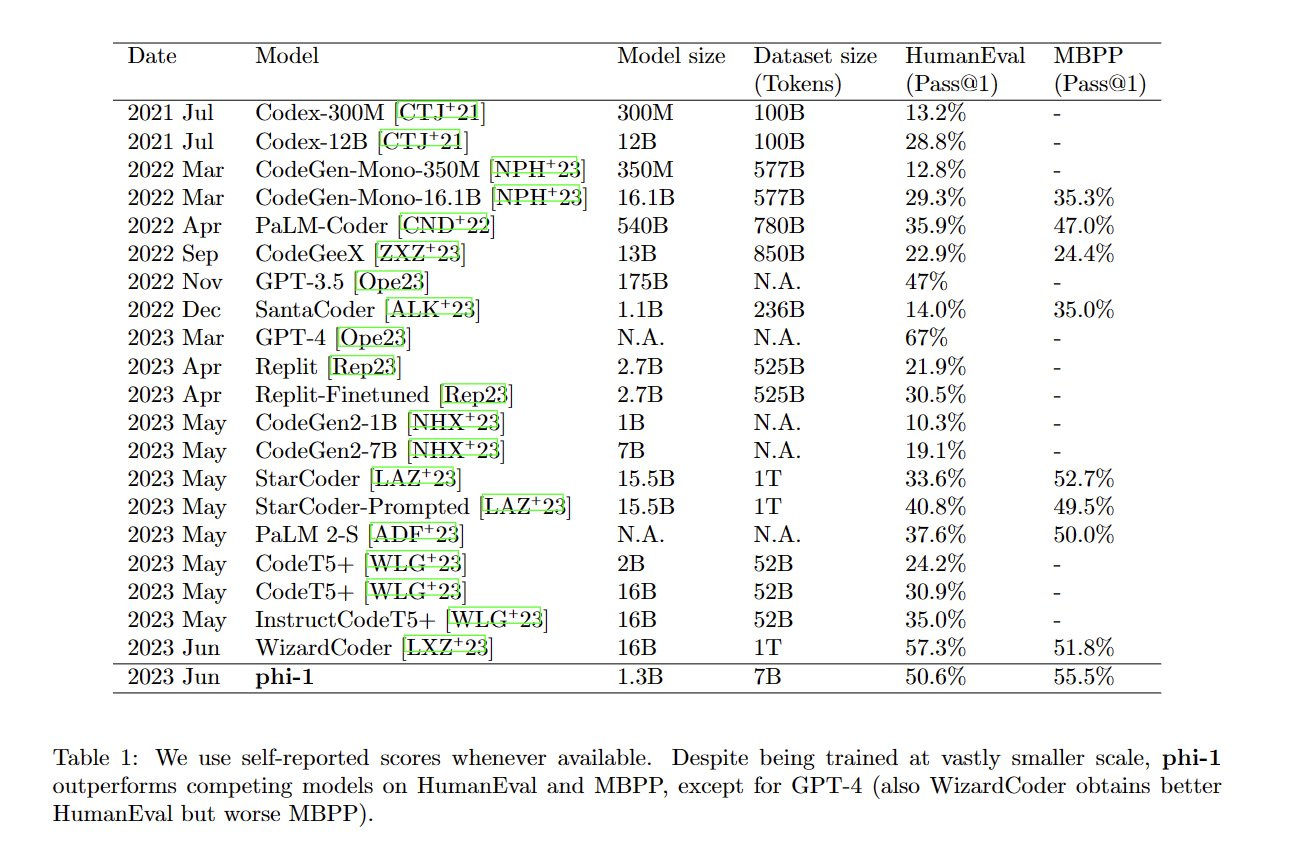

Sebastien Bubeck and Microsoft present phi-1, a tiny LLM trained exclusively on high-quality textbook-level data to learn how to create short Python programs.

Sebastien Bubeck: New LLM in town: ***phi-1 achieves 51% on HumanEval w. only 1.3B parameters & 7B tokens training dataset***

Any other >50% HumanEval model is >1000x bigger (e.g., WizardCoder from last week is 10x in model size and 100x in dataset size). How? ***Textbooks Are All You Need*** (paper here)

Specialization is doubtless also doing a lot of the work, although that is in some senses another way of saying higher quality data. phi-1 is dedicated to a much narrower range of tasks. We see the result of the combination of these changes. It’s impressive.

I’ve said for a while I expect this to be the future of AI in practice - smaller, more specialized LLMs designed for narrow purposes, that get called upon only for their narrow range of tasks, including by more general LLMs whose job is to pick which LLM to call. This is still a remarkably strong result. It took only 32 A100 days to train and will be crazy cheap to run.

WorkGPT is an AutoGPT-like system that incorporates new function invocation feature to call multiple APIs based on context (code link).

Alex MacCaw: You can imagine the next step: 'auto work bots', where you take a role in a company, split it into different processes, and automate large parts (if not all) of it.

Thread of generative AI courses. Google, DeepLearningAI for developers, Harvard C550 AI for basics of Python ML, Stanford CS 224N for NLP, good old learnprompting.org,

In Other AI News

Meta continues down its ‘do the worst possible thing’ plan of open sourcing models.

Meta is focused on finalizing an upcoming open-source large language model (LLM), which it plans to make available for commercial purposes for the first time. This approach would represent a vast departure from closed-source language models like Google’s Bard and OpenAI's ChatGPT currently in commercial use, and it could spark a ripple effect of widespread adoption by companies that are on the lookout for a more versatile and affordable AI alternative.

The ‘good news’ here is that it is unlikely to make much further difference on the margin, at least for now. Meta releasing open source models only matters if that advances the state of the art of available open source models. There’s now enough competition there that I doubt Meta will pull this off versus the counterfactual, but every little bit harms, so who knows. The problem comes down the line when they won’t stop.

My understanding of the tech is that there will continue to be essentially no barrier to rejecting your model and substituting my own, while retaining all of my code and tools, except when I lack the necessary model access. There’s very little meaningful ‘lock in’ unless I spend quite a lot on fine tuning a particular thing, and that investment won’t last.

Say the line: Kroger CEO says AI eight times during earnings call.

Oh, nothing, having a normal one.

Yohei: Teaching the baby how to look within 🧘🏻♂️🙏

Now, take a deep breath and look deeper within and reflect on possible self-improvements.

Okay, so now it’s writing code for a new tool, by reading code for a tool that we know works.

Amjad Masad (CEO Replit): There is a non-zero chance BabyAGI will self-replicate inside Replit and take over our cloud. If it happens it happens.

Sounds like a market, so Manifold Markets hype.

Presumably a number of people are now trying to engineer this exact result. If it does happen, how should people update? What about if it doesn’t?

Simeon: Before it happens, the US government will hopefully implement minimal safety culture requirements that imply that Replit will legally not be allowed to interface with frontier AI systems. This comment signals an attitude not remotely close from the adequate mindset that the CEO of any company operating such systems should have.

I disagree. Anyone who likes around the world can interface with frontier AI systems. What could be better than running the experiment full blast, with stakes volunteered by the generous Amjad Masad himself? Let him cook, indeed.

SoftBank founder expects AI to take over world, says SoftBank is prepared to be one of the great companies. Calls his decision to sell their Nvidia stake ‘within the margin of error.’

Biden met on Tuesday in SF to raise money for his reelection and discuss AI. Was this promising, or depressing?

Alondra Nelson: It's promising that President Biden will meet today with civil society and academic leaders in AI policy, whose focus area include social justice and civil rights, public benefit, public safety, open science, and democracy.

Jess Riedel (distinct thread): In order to learn more about the dangers of AI, Biden is meeting on Tuesday in San Francisco with … “Big Tech’s loudest critics”. Oy.

Scanson: What's so oy about this? Especially since he's also been meeting with the heads of the big a.i. companies et al. A quick googlin' shows all these folks have relevant expertise. What am I missing?

Riedel:

1. This is roughly like FDR meeting with non-Manhattan-project physicists to discuss the dangers of radium paint.

2. Biden didn’t actually meet substantively with AI company heads. He “dropped by” to deliver a canned line.

Joe Biden (as quoted in LA Times): “In seizing this moment, we need to manage the risks to our society, to our economy and our national security,” Biden said to reporters before the closed-door meeting with AI experts at the Fairmont Hotel.

Jim Steyer of Common Sense Media says Biden ‘really engaged.’ What description we do have sounds like this was all about mundane risks with a focus on things like ‘propagation of racist and sexist biases.’

I consider it a nothingburger, perhaps a minor sign of the times at most. Biden continues to say empty words about the need to control for risks and speak of potential international cooperation, but nothing is concrete, and to the extent anything does have concrete meaning it is consistently referring to mundane risks with an emphasis on misinformation and job losses.

Does this mean we are that much more doomed to have exactly the worst possible legislation, that stifles mundane utility without slowing progress towards extinction risks? That is certainly the default outcome. What can we do about it?

Quiet Speculations

Simeon: I've been asked to make a list of the 15 companies which may do the biggest training runs in the next 18 months. Lmk if you disagree.

1. DeepMind 2. OpenAI 3. Anthropic 4. Cohere ai 5. Adept ai 6. Character ai 7. Inflection ai 8. Meta 9. Apple 10. Baidu 11. Huawei 12. Mistral ai 13. HuggingFace 14. Stability ai 15. NVIDIA

Suggested in comments (at all): SenseTime (InternLM), BigAI, IBM, Microsoft Research, Alibaba, ScaleAI, Amazon, Tesla, Twitter, Salesforce, Palantir.

From responses, SenseTime seems like the one that should have made the list and didn’t, they’ve done very large training runs. Whether they got worthwhile results or will do it again is another question.

The question is, where are the big drop-offs in effectiveness. To what extent is there going to still be a ‘big three’?

Davidad mentions that while China has essentially banned commercial LLM deployments, it hasn’t banned model development.

Andres: The fact that you haven’t included SenseTime, which is one of the handful of companies that have reported a +100B parameter run in all of 2023 with their InternLM models may indicate you (and others in DC) haven’t been paying much attention

Davidad: today I heard someone say “China has regulated LLMs so hard that it’s literally functionally equivalent to a ban on LLMs, for now, until and unless they relax that at some point in the future”. that may be true commercially but state-partnered Chinese LLM research continues apace.

Reminder, an unverified technical report claimed that InternLM was superior to ChatGPT 3.5 and to Claude, although inferior to GPT 4, on benchmark tests. I am skeptical that this is a reflection of its true ability level.

Scott Alexander reviews Tom Davidson’s What a Compute-Centric Framework Says About Takeoff Speeds. Davidson points out that even what we call ‘slow takeoff’ is not all that slow. We are still talking about estimates like 3 years between ‘AI can automate 20% of jobs’ to ‘AI can automate 100% of jobs’ and then one more year to superintelligence. That would certainly seem rather fast. Rather than extensively summarize I’ll encourage reading the whole thing if you are interested in the topic, and say that I see the compute-centric model as an oversimplification, and that it makes assumptions that seem highly questionable to me.

Also as Scott says, I am not super confident AI can’t do 20% of jobs now if you were to give people time to actually find and build out the relevant infrastructure around that, and if you told me that was true it would not make me expect 100% automation within three years. We’ve had quite a few instances in the past of automation of 20% of human jobs.

I’ll put a poll here to see how high a bid people would make for me to discuss takeoff speeds and other such questions in detail.

Will we see the good robot dating or the bad robot dating? Why not both?

Joscha Bach: I think we will see a slew of artificial girlfriend apps (and some AI boyfriends too), but I hope that there will be even better coaching apps, which allow people to learn how to connect with each other and to live life at its fullest.

Allknight: The only way to have a friend is to be one. What further coaching is needed?

Joscha Bach: It is incredible how young people with mutually aligned interests but confused ideas about human interaction can mess up every chance of entering healthy relationships, and today's internet ideologies are not exactly helping.

I continue to be an optimist here, if things are otherwise under control. As I’ve noted before: Over time people demand realism from such simulations. Easy mode gets boring quickly. After a while, you are effectively learning the real skills in the simulation, whether or not that was the intention.

File under ‘everyone thinks they are losing.’

Jon Stokes: The fundamental problem the pro-AI folks will have to solve ASAP is this: 100% of the establishment sinecures on AI are going to AI "language police" alarmists or outright doomers, 0% to AI optimists.

I see this with young, smart people I know who have moved into the policy realm in some fashion or other -- they all start preaching the alarmism or doomer gospel that AI will destroy democracy &/or paperclip us. If there's an exception to this rule then I'm not aware of it.

Now, this could be the case b/c they're smart & AI is legit bad & they see it but I don't. Even so, I'd still imagine that if culture & incentives were different we'd still see pockets of pro-AI thinking clustered in different institutions. But that exists, again, I'm not aware.

The entire establishment AI discourse is completely owned, top to bottom, by either the "anti-disinformation" campaigners or EA doomer cultists. This indicates to me that there's no opportunity to accumulate social capital by positioning yourself outside those two discourses.

For the good guys to win on this, we're going to need raise pro-AI ladders for smart, ambitious young people to climb. This problem is extremely pressing. We're on track for a world where open-source AI is digital contraband, & the tech is walled up inside a few large orgs.

Such confidence on who the ‘good guys’ are and who the ‘cultists’ are. Also very strange to think the fundamental problem is sinecure money. As opposed to money. Almost all of the money spent around AI is spent by Jon’s ‘good guys’ working on AI capabilities. Many of whom, including the heads of the three big AI labs, are exactly the same people signing statements of his so-called ‘cultists.’ This is not what winning the funding war feels like.

Alt Man Sam reminds us that democracy gets to be a highly confused concept in the age of AI.

One thing ppl don’t realize: 𝙙𝙚𝙢𝙤𝙘𝙧𝙖𝙘𝙮 𝙙𝙚𝙛𝙞𝙣𝙞𝙩𝙚𝙡𝙮 𝙬𝙤𝙣’𝙩 𝙡𝙖𝙨𝙩 𝙞𝙣 𝙩𝙝𝙚 𝙖𝙜𝙚 𝙤𝙛 𝘼𝙄

To put it very simply—we’re going to be living among digital minds of all shapes and sizes. So how do you determine who gets a vote? How do you deal with the fact that to reproduce, it takes a human 9 months to make 1 copy of itself, while it takes a digital mind 1 second to make 100,000 copies of itself? Can we have a democracy when the AI population grows to 300 𝑡𝑟𝑖𝑙𝑙𝑖𝑜𝑛 while the human population stagnates at 300 𝑚𝑖𝑙𝑙𝑖𝑜𝑛?

Moreover, how do you deal with 10000x intelligence differences? We don’t give frogs a vote over human affairs; are we supposed to give 100 IQ beings a vote when it affects those with 1000 IQ’s? What if a single mind ends up with 99% of the intelligence/compute (which seems likely)? Above all, everyone is failing to think about the downstream consequences of this new world we are entering and just how weird and different it’ll be.

None of the old frameworks apply.

Robin Hanson’s solution is that emulations get votes proportional to how fast they are run. The implications of this system are that biological humans and poor emulations have almost no voice, but he sees no better alternative. We do seem to have only three choices - either only biological humans vote, they have a fixed share of the vote, or they have no vote.

Richard Ngo expects change, and on reflection is hopeful.

Richard Ngo: If you want a vision of the future, imagine your grandkids laughing at you for getting nauseous every time you try the latest VR headset - forever.

Disclaimer: I don't think the future will be like this, I think it'll be much weirder. But also I bet that childhood exposure to VR will allow the next generation to enjoy experiences that would leave the rest of us puking.

Rachel Clifton: is this supposed to be hopeful? lol

Richard Ngo: It wasn't originally, but the more i think about it the more hopeful i feel. I think that the future going well involves our descendants being alien to us in fascinating and inspiring ways. Maybe it doesn't even matter if they're laughing with us or at us - kids will be kids.

That would indeed be great. You have grandkids. They’re spending time with you and laughing. There is new cool tech to try out. You’re alive. Who could ask for anything more? I mean, I can think of a few things but that’s pretty sweet.

Larry Summers does not appear to have thought things through.

Larry Summers: AI will change humanity. @VIVATech in Paris last Friday with @LaurenceParisot, I said: Since the enlightenment, we have lived in a world that has been defined so much by IQ--by cognitive processing, by developing new ideas, by bringing to bear knowledge.

As that becomes more and more a commodity, that can be replicated with data and analysis, we are going to head into a world that is going to be defined much more by EQ than IQ and that is going to be a very different place.

It's a world where a nurse holding a scared patient’s hand is going to be more valuable than a doctor who can process test results & make a diagnosis. It's going to be a world where a salesperson who can form a relationship is going to be a profound source of value in a business.

It is going to be a world where the products of emotion--the arts--are going to rise relative to the products of the intellect--science.

In many ways it’s going to be a wonderful world but a very different world. It is going to be a world for all us -- in universities, in governments and in the private sector -- for all of us to shape.

It’s so weird seeing economists get totally bizarre toy models into their heads when it comes to AI.

First off, this is at core a comparative advantage argument that assumes that AIs will have superior IQ to EQ. That does not seem to be the case with GPT-4. At minimum, it is highly disputed.

When they compared it to doctors, its best performance area was in bedside manner. GPT-4 is consistently excellent at reporting or generating emotional valiance. If you are high IQ and low EQ, you will use your EQ to help GPT-4 help you with the EQ side of things. If you are high EQ and low IQ, you can ask GPT-4 for help, but it’s going to be trickier.

Once such models get multi-modal and can read - and generate - facial expressions and voice tones and body language? They are plausibly going to utterly dominate the EQ category.

This is also a failure to ask what happens if the AI is able to take over all the high-IQ work. Does that mean that EQ gets to shape the future? That science will be less important and art more important?

Quite the opposite. It will be a new age of science, of the wonders of technology and intelligence. You spending more time on other things is simply more evidence of your irrelevance. IQ will be in charge more than ever, except that force increasingly won’t be human. It won’t by default be a world for all of us to shape, it will be out of our hands.

Everything is Ethics Say Ethicists

Robin Hanson seems to be on point here.

Robin Hanson: Seeing a lit review of "AI ethics", in this context "ethics" seems to mean "anything that anyone complains could 'go wrong'".

Lit rarely invokes the econ distinction of "social failure" problems where coordination is needed to deal with. Anything anyone doesn't like counts as "ethics problem", regardless of whether coordination is needed.

I asked ChatGPT4 for AI problems that aren't ethics problems. It gave: " disliking … using AI in creating art because they believe art should be purely a human endeavor." & "dislikes that AI … are often given feminine names or voices." But these ARE ethics issues to some.

I asked for a 3rd example: "use of AI because it takes the 'human touch' out of certain interactions … it makes experiences impersonal or less authentic." Also an ethical issue to some.

I think ChatGPT4 is mistakenly assuming that ethics has a more constrained definition than "any complaint". Because of course most people mistakenly claim that their definition is so constrained.

These concerns are not only often considered ethical concerns, they are more ethical flavored concerns than the median concern about AI. If I had to name a non-ethical problem I’d suggest ‘it is bad at math’ or ‘it can’t handle letters within words.’ Technical problems. Even then, I’m sure the ethicists can think of something.

The Quest for Sane Regulations

Majority Leader Chuck Schumer launches ‘all hands on deck’ push to regulate AI. He says ‘Congress must join the AI revolution.’ What does all this mean? Even the Washington Post notes his speech was ‘light on specifics.’ Robin Hanson called it ‘news you can’t use.’

‘Delivering his most expansive remarks on the topic since the recent explosion of generative AI tools in Silicon Valley, Schumer unveiled a “new process” for fielding input from industry leaders, academics and advocates and called on lawmakers advance legislation in coming months that “encourages, not stifles, innovation” while ensuring the technology is deployed “safely."

Bing: The framework outlines five principles for crafting AI policy: securing national security and jobs, supporting responsible systems, aligning with democratic values, requiring transparency, and supporting innovation.

The words, how they do buzz. The post then devolves into arguments over who gets credit and who is erasing progress and other such nonsense, rather than asking what is worth doing now.

The reporter clearly thinks this is all about mundane risks and that anyone not realizing how serious that issue is deserves blame, and that we should be suspicious of industry influence.

There is zero indication anywhere that extinction risks will be part of the conversation. Nor are there any concrete details of what will be in such laws.

Timeline looks like months.

EU regulations, as usual, doing their best to be maximally bad.

Seb Krier: For those who haven't had the joy of dealing with the AI Act for years, Burges Salmon published a flowchart decision-tree to help navigate the latest Parliament version. As they note, "many areas that are open to interpretation or require guidance." Enjoy!

Davidad: All AI R&D is exempted from the EU AI Act. Limitations on and inspections of frontier R&D training runs, the main regulatory lever for superintelligence concerns, are completely absent, contra the ambient narrative that “the EU will regulate too harshly”.

Flow chart wouldn’t reproduce well here so click the link instead to see it.

They also specifically exempt military purposes.

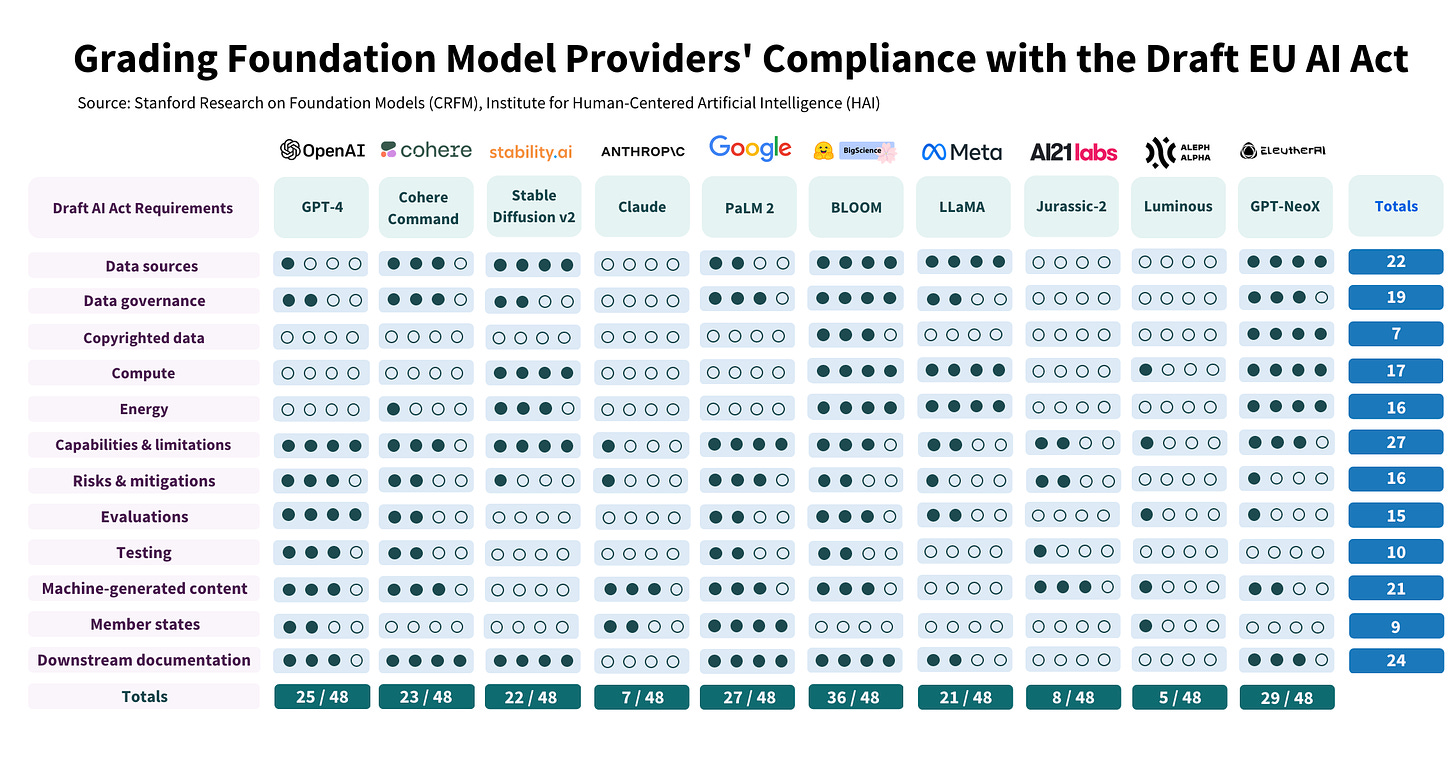

Do current models comply? No, they very much do not, as a Stanford study illustrates.

Some of these requirements are fixable. If you don’t have documentation, you can create it. If you don’t have tests, you can commission tests. ‘EU Member States’ is potentially a one page form. Others seem harder.

Copyrighted data and related issues seem like the big potential dealbreaker on this list, where compliance could be fully impractical. The API considerations are another place things might bind.

One difficulty is that a lot of the requirements are underspecified in the AI Act. There’s a reasonable version of implementation that might happen. Then there’s the unreasonable versions, one of which almost certainly happens instead, but which one?

Time maximally unsurprising exclusive: OpenAI Lobbied the E.U. to ‘Water Down’ AI Regulation. They proposed amendments, and argued their GPT models were not ‘high risk.’

“By itself, GPT-3 is not a high-risk system,” said OpenAI in a previously unpublished seven-page document that it sent to E.U. Commission and Council officials in September 2022, titled OpenAI White Paper on the European Union’s Artificial Intelligence Act. “But [it] possesses capabilities that can potentially be employed in high risk use cases.”

That seems true enough as written. The rest of the document is provided, and it’s what you would expect. Given known warnings from Sam Altman that OpenAI might have to leave the EU if the full current drafts were implemented, none of this should come as a surprise.

Nor is it hypocrisy. There are good regulations and ideas, and there are bad regulations and ideas. Was OpenAI’s white paper here focused on ensuring they were not put at a competitive disadvantage? Yes. That doesn’t mean their call for other, unrelated restrictions, designed to protect in other ways, is in any way invalid.

It is worth noting we have no evidence that OpenAI lobbied in any way to make anything in the law more stringent, or to introduce good ideas.

Simeon: That's what a basic incentive theory would predict. 1 question: is there any evidence that they lobbied to strengthen or try to have a more stringent regulation along certain axis? E.g. try to regulate the development of AI systems, have third party auditing, regulate existential risks, allow competitors to cooperate on safety? If they haven't, that gives a sense of their priorities. If they have, that's interesting for the world to know.

ML engineers: "omg, all the existing models would be ILLEGAL if the EU AI Act was enforced 😱😱😱?"

Me: "I mean, is regulation supposed to say "yup, go ahead with those non-existent good practices along those 12 different axis👍🙌"??

🤦

It is a lot easier to point out what is wrong than to figure out what is right. Also difficult to comply with newly decided rules, whether or not there were adequate existing best practices.

Ted Cruz says Congress should proceed cautiously and listen to experts.

Ted Cruz: To be honest, Congress doesn’t know what the hell it’s doing in this area... This is not a tech savvy group.

As Cruz would agree, the problem is, who are the experts?

Seth Lazar gets it, also perhaps misses the point?

I need to read more on importance of *tech neutral* regulation for AI. Regs change incentives, lead to different tech being developed for same ends. E.g. GDPR on cookies -> federated learning on cohorts. Surveillance capitalism and automated influence/manipulation unaffected.

Implementing regulations in a tech neutral way is indeed hard. Regulations are interpreted as damage and routed around, default implementations will often cause large distortions. If you don’t want to distort the tech and pick winners, that’s bad.

What if you did want to distort the tech? What if you thought that certain paths were inherently safer or more likely to lead to better futures for humans? Then you would want to have deeply tech non-neutral regulations.

That is the world I believe we are in. We actively want to discourage tech paths that lead to systems that are expensive or difficult to control the behavior of, control the distribution of or understand, and in particular to avoid unleashing systems that can be a foundation for future more capable systems without a remaining bottleneck. For now, the best guess among other things is that this means we want to push away from large training runs, and encourage more specialization and iteration instead.

Similarly, here is Lazar noticing what would make sense if AGI soon was ‘decently likely’:

Seth Lazar: I think [AI will be eventually much smarter than us and controlling much smarter things is at best very hard] is one (good) argument for x-risk *at some point in the possibly far future*. But it is *not* a good argument for x-risk from any actual AI systems or their likely successors. Would really like to know how many are worried about x-risk on these grounds alone.

Amanda Askell (Anthropic): If you find this compelling, would a decent likelihood that AI development is on an exponential without a need for hard fundamental insights (i.e. without the need for long gaps or delays in the exponential) be sufficient for you to be worried now?

Seth Lazar: I don’t think worry should be the target. If I had that view, I’d be arguing for radical controls to be introduced now on frontier AI development, probably a ban. It’s always the course of action indicated that matters most (to me), not the emotional attitude one should adopt.

Call from Dr. Joy Buolamwini, who is worried about mundane risks involving algorithmic bias, for cooperation with those like Max Tegmark concerned about extinction risks despite not sharing those same concerns. More of this would be great. The default interventions aimed at algorithmic bias seem likely to backfire, that doesn’t mean we can’t work to mitigate those real problems.

There are several advantages to advocating for stricter corporate legal liability for AI harms. One of them is that this helps correct for the negative externalities and establish necessary aspects of a safety mindset. A second is that it uses our existing legal framework. A third is that it’s difficult to claim with a straight face that advocates for holding companies liable are shilling for those companies. And yet.

Bet made on transformative AI: Matthew Barnett bets $1,000 against Ted Sanders’s $4,000 (both inflation-adjusted) that there will be a year of >30% world GDP growth by 2043. Ted pays if it happens, Matthew pays in 2043 if he loses. Lots of interesting questions regarding exactly when the payoff for such a bet has how much utility, and what the true odds are here. A lot of scenarios make it pointless or impossible to collect. I agree that this is roughly the correct sizing, to ensure the bet is felt without creating economic distortions or temptation to not pay.

The Week in Audio

Reid Hoffman and Mustafa Suleyman, a co-founder of DeepMind, on AI in our daily lives. I haven’t had time to listen yet.

"In 10 years time, there'll be billions of people using all kinds of AI. It's kinda hard to put into words how fundamental this revolution is about to be—I truly believe that this decade is going to be the most productive in human history."

"[Not] everybody has access to kindness and care. I think it’s going to be pretty incredible to imagine what people do with being shown reliable, ever-present, patient, non-judgmental, kindness and support, always on tap in their life."

Interesting wording on that first claim. If this coming decade is the most productive in human history, what happens in the decade after it?

Will AI be able to provide kindness and care in the ways people need? My presumption is ‘some ways yes, some ways no’ which is still pretty great.

Finally released, Tyler Cowen’s fireside chat with Kelsey Piper from 2022. As far as I can tell, Tyler still stands by everything he says here, and he says it efficiently and clearly, and in a way that seemed more forthcoming and real than the usual. It seemed like he was thinking unusually a lot. All of that speaks to Piper’s interview skills. It was an excellent listen even with the delay.

As usual, I agree with many of his key points like avoiding moral nervousness and abstaining from alcohol in particular and drugs in general, and focusing on what you yourself are good at, and institutional reform. I especially liked the note to not be too confident you know who are the good guys and who are the bad guys, even better is to realize it’s just a bunch of guys (and that AGI will not be).

I’m going to take yet another shot at this, I am not ready to give up on the AI disagreements, there is too much uniquely good stuff and too much potential value here to give up, so here we go.

On AI he presents a highly plausible story - that the US and China are locked in a prisoner’s dilemma, that no one has a plan to solve alignment and even if we did someone else would just reverse the sign, so alignment work is effectively accelerationist in practice, because AI proliferation is inevitable, that net all anti-extinction efforts have only accelerated AI and thus any AI risk. He even expects major destructive AI-led wars.

Then he puts up a stop sign about thinking beyond that, as he later did in various posts in various ways to various degrees, says you have to live in history, and refuses to ask what happens next in the world he himself envisions, beyond ‘if Skynet goes live’ then it goes live, which seems to be a continuing confusion? That the AI risks envisioned require that path?

When I model the world Tyler seems to be imagining, here and also later in time, and accept that prisoner’s dilemmas like current US-China relations are essentially unsolvable, that world seems highly doomed multiple times over. If alignment fails, we get some variant of what Tyler refers to as ‘Skynet goes live’ and we die. If alignment succeeds, people reverse it, we get races to the bottom including AIs directly in charge of war machines, even if we survive all that ordinary economics takes over and we get outcompeted and we die anyway. Tyler agrees AIs will ‘have their own economy’ and presumably not remain controlled because control isn’t efficient. Standard stories about externalities and competition and game theory all point in the same direction.

What are we going to do about that? The answer can’t be ‘hope a miracle occurs’ or, as his Hayekian lecture says, suggest that ‘we will be to the AIs as dogs are to humans and we’ll be training them too,’ because you can’t actually think that holds up if you think about it concretely. Thus, in part, why I came up with the Dial of Progress hypothesis, where the only two remaining ways out - centralized control of the AGI, or centralized control to ensure there is no AGI - are cures worse than the disease.

From three weeks ago, Robert Wright and Samuel Hammond on a Manhattan Project for AI Safety. Some early good basic technical discussion for those at the level for it. Hammond criticizes the Yudkowsky vision of a hard takeoff because the nature of AI systems has changed and progress should be continuous, but I don’t think that is clear. Due to the cost of training runs substantial leaps are still possible, applying better techniques and scaffolding can provide substantial boosts in performance instantly, as can pointing such systems in the right direction. There is still not that much daylight in most places or in general between the system that can be close to human, and the system that can be superior to human, and with much algorithmic or software or scaffolding based low-hanging fruit retraining the model or getting more hardware could easily not be required. Even if it is, the relevant period could still be very low - see the Shulman podcast or Alexander’s recent post for the math involved.

I do agree that ‘what happens to society along the way’ matters a lot, as I’ve said repeatedly. If we want to make good choices, we need to go into the crucial decision points in a good state. That means handling AI disruptions well, it also means building more houses where people want to live.

Marc Andreessen is continuing his logical incoherence tour with a podcast. As in, he says that rogue AI is a logically incoherent concept, one of many of his logically incoherent claims. The new version makes even less sense than the old. Officially putting him on the ‘do not cover’ list with LeCun.

Rhetorical Innovation

Jason Crawford (who we might say is concerned) offers a useful fake framework.

Jason Crawford: Levels of safety for a technology

1. So dangerous that no one can use it safely

2. Safe if used carefully, dangerous otherwise

3. Safe if used normally, dangerous in malicious hands

4. So safe that even bad actors cannot cause harm

Important to know which you are talking about.

Arguably:

Level 1 should be banned

Level 2 requires licensing/insurance schemes

Level 3 requires security against bad actors

Level 4 is ideal!

(All of this is a bit oversimplified but hopefully useful)

I’d also ask, safe for who? Dangerous to who, and of what magnitude?

If something is dangerous only to the user, or those who opt into the process, then I would argue that bans are almost never necessary.

If the harm can be sufficiently covered in practice by insurance or indemnification, then I’d say that should be sufficient as well, even for inherently dangerous things. Few things worth doing are ever fully safe. Licensing might or might not want to get involved in this process.

Whereas if the harm is catastrophic, with risk of extinction, then that changes things. At minimum, it is going to change the relevant insurance premiums.

I’d also say that something being harmful if used by bad actors means you need to have safeguards against bad actors generally, such as laws against murder and rape and theft and fraud. It does not mean that you need to put heavy handed safeguards into every ‘dual use’ technology by default. What level is the printing press or the telephone? That does not mean the telephone company shouldn’t be guarding against spam calls.

Nuclear is level 2 @kesvelt’s SecureDNA project aims to take DNA printing from level 3 to 4. Lots of debate right now about where AI is (or soon will be, or can be made to be)

I would actually argue nuclear is level 3. You do need to guard against bad actors, but it is dramatically safer than alternative power sources even under relatively poor past safety conditions, using worse less safe designs and with less safety mindset and fewer other precautions. Certainly our current Generation-4 reactors should quality as safe by default. We should regulate nuclear accordingly.

You know what kills people? Coal.

I don’t know enough about DNA printing, except that it does not sound like it would be level 4 if you had an AI doing the design specifications.

The AI doom argument is that it is level 1: even the most carefully designed system would take over the world and kill us all.

If AI is level 2, then a carefully designed and deployed system would be safe enough… But someone who created, say, a trading bot and just told it to make as much money as possible would destroy the world.

If AI is level 3, then the biggest risk is a terrorist group or mad scientist who uses an AI to wreak havoc—perhaps much more than they intended.

Getting AI to level 4 would be great, but that seems hard to achieve on its own—rather, the security systems of the entire world need to be upgraded to better protect against threats.

This implies that level 4 does include things like telephones, since AI certainly isn’t going to be simultaneously both useful and also safe in a way that a telephone isn’t.

My position is:

AGI if built soon is level 1.

If we solve an impossible-level problem (alignment) AGI becomes level 2.

I don’t see how we get AGI to level 3.

If you widely distribute a level 2 tool where the danger level on failure is existential, or where you face a collective action problem, it does not look so good.

A lot of people are thinking that getting AGI to level 2 is a victory condition, whereas instead it is the end of Phase 1, and the beginning of Phase 2, and we need to win both phases to not die.

I said this was oversimplified. One additional piece of complexity: the line between 2 and 3 depends on society: how good are our best practices?

Commercial airplanes with professional pilots are very safe now, but at the beginning of aviation they were much less safe.

Another piece of complexity is that all of the lines depend on society in another way: what is our bar for safety? What risks are unacceptable? Society's bar for safety tends to go up over time. Risks that were acceptable 50 years ago are no longer.

Another complexity is that the same basic technology might pose different risks at different levels. One risk of DNA printing is that you make smallpox. That's level 3.

Another risk is that you accidentally make some new, unknown pathogen. That's level 1 or (hopefully) 2.

This points to the problem of dual scales - what types of usage are safe, and what types of dangers are present if unsafe things occur. A car is not a safe machine, it also does not pose a large scale threat.

Hey, as Jason says, simplified model. You do what you have to do.

Connor Leahy clarifies in this thread that he sees rationality as a tool used to get what it is that you want, to get better rather than worse configurations of atoms, and there is no contradiction with also being a ‘humanist’ who would prefer that we not all die. Whereas Joscha Bach seems to join the people saying that it is incoherent and not allowed to have preferences over world states without formal justification. If you don’t want all humans to die, or for humans to cease to exist soon, he claims you need a ‘detailed ethical argument’ for this.

Connor Leahy: Many people in this part of the memesphere seem very confused about their own values and how they relate to reality. An Is is not an Ought. Just because evolution or entropy or whatever favors X (an Is) doesn't mean it is something that is morally good (an Ought).

Joscha Bach: I am aware of this discourse Connor. How do you justify your oughts against the oughts of other agents, human or artificial?

Connor Leahy: I don't, that's the point of Oughts, there is no objective level truth about the "correct" Oughts! It becomes bargaining theory at that point. There is no universally true and justifying argument that is compelling to every mind!

I may have preferences over other agents' preferences (i.e. I generally like other agents getting what they want, even if it's not the same kind of things I want, but there are obvious limits here as well, I wouldn't endorse other agents with preferences for torture getting their values satisfied), but at some point it just has to ground out into actual object level reality, which means bargaining/trading/conflict/equilibria.

Of course, in humans this is all much messier because humans do not cleanly factor their values from their epistemology and their decision theory, and so get horribly confused (as seen by eacc people) all the time. There are also much deeper philosophical problems around continuous agency and embededness, but those are topics that come after you've secured survival and have enough resources to spend on luxuries such as moral philosophy.

Joscha Bach: I was hoping you use "I like" as a shortcut for a detailed ethical argument and not a terminal claim. As a parent, i know that "I like" is entirely irrelevant, both in negotiations with myself and others, and what matters is what I should want and can achieve.

Connor Leahy: I think you are very confused if you think terminal claims are not sensible, and I'm not sure if it's possible to negotiate or communicate with someone that obscures their own preferences behind veils of philosophical prescriptivism like this.

I affirm Connor’s central point: No. You don’t need to justify your preferences. You are allowed to prefer things to other things. We can say some preferences are bad preferences and call them names. Often we do. That is fine too. Only fair. If your preference is for all humans to die, or you are indifferent about it, I would be sorely tempted to call that position a bunch of names. Instead I would say, I disagree with you, and I believe most others disagree with you, and if that is the reason you are advocating for a course of action then that is information we need to know.

Andrew Critch recounts in a deeply felt post the pervasive bullying and silencing that went on for many years around AI extinction risk, the same way such tactics are often used against marginalized groups. Only now can one come out and say such things without being dismissed as crazy or irrational - although as I’ve warned many people will never stop trying that trick. I don’t buy the ‘such bullying is the reason we have AGI x-risk’ arguments, the underlying problems really are super hard, but it would be a big help.

It really is nice to have others to warn about our supposed arguments from authority, as opposed to exclusively using arguments from authority against us.

Alex Lawsen: AI x-risk skepticism, pre 2023: "The only people who believe this are weirdos without proper AI credentials, so I'm not going to bother looking at the arguments or evidence".

AI x-risk skepticism, 2023 edition: "Why do you keep pointing out the credentials of the people who say they are concerned, rather than just making arguments or pointing to evidence? That's just an appeal to authority!”

AI x-risk skepticism, 2023 edition, version 2: "It is SUSPICIOUS that so many of the people signing open letters are world class professors or senior execs at industry labs. Of course they would want to say what they work on might be world changing."

As this is getting some attention, a couple of less-ranty thoughts: I'd rather not have discussion focused on comparing status/expertise. I think it's reasonable to respond to "there are no experts" with *points at experts*, but don't think *points at experts* is a good argument.

Deb Raji (other thread): It annoys me how much those advocating for existential risk expect us to believe them based on pure ethos (ie. authority of who says it)... do you know how many *years* of research it took to convince people machine learning models *might* be biased? And some are still in denial!

Shane Legg: After more than a decade of being told that nobody serious actually believes in catastrophic AGI risks, I think it's fair game to now point out that that's not true.

Deb Raji: Sure - but, for me, supposed acceptance by "CEOs", "AI researchers" & certain authoritative voices is an insufficient argument/justification for the level of policy action being advocated for. My issue is in framing these things as the primary evidence for "AI x-risk" advocacy.

Shane Legg: Why do you say "supposed acceptance"? Do you believe that the people who signed the CAIS letter didn't really accept what they signed? And why the use of scare quotes? Are they not really CEOs and AI researchers, in your opinion? Curious to know what you think. Thanks!

Deb Raji: I say "supposed acceptance" bc I'm not sure if all of those on these letters or responding to surveys are fully aware of the positions/claims they're signing unto [polls like the 42% of CEOs say AI could destroy humanity in 5-10 years one].

It is fascinating how little statements like Raji’s adjust to circumstances. ‘Not fully aware of the positions they are signing onto’ is quite a weird thing to say about one-line statements like “Mitigating the risk of extinction from AI should be a global priority alongside other societal-scale risks such as pandemics and nuclear war” or what the CEOs were asked. These are intentionally chosen to be maximally simple and clear. Similarly, asking whether proposals are actually in someone’s selfish interest is mostly not a step people take before assuming their statements are down out of purely selfish interests. Curious.

In the end, Lawsen is exactly on point: It’s the arguments, stupid. It’s not about the people. I want everyone to look at the people making the argument and think ‘oh well then I guess I should actually look at the arguments, then.’

Eliezer Yudkowsky makes the obvious point on last week’s story about potentially being able to read computer keys using the computer’s power light, that hard takeoff skeptics would have said ‘that’s absurd’ if you had included it in your story. Various replies of the form ‘but doing this is hard and it’s noisy and you can’t in practice pull it off.’ They might even, in this particular example, happen to be right.

GFodor: Side channel attacks are surprising. Any surprising vulnerability can be mapped to your claim.

Liron Shapira: His point is that people who are skeptical about AI exploiting human vulnerabilities are wildly underestimating what’s possible with human attackers vs human vulnerabilities, before factoring in the premise that AI will have many big advantages over humans.

Side channel attacks are one of many broad classes of thing where any given unknown affordance being discovered is surprising, but the existence of affordances that could be discovered, or some of them being discovered at all, is entirely expected. Any given example sounds absurd, but periodically something absurd is going to turn out to be real.

Alex Tabarrok has an excellent take affirming Eliezer’s perspective and my own.

Oh, and did you know AI can now reconstruct 3D scenes from reflections in the human eye?

Jason Crawford discusses the safety first approach of the Wright Brothers. Unlike many others, they took an iterative experimental approach that focused on staying safe while establishing control mechanisms, only later adding an engine. They flew as close to the ground as possible, on a beach so they’d land on sand, took minimal chances. Despite this, they still had a crash that injured one of them and killed a passenger. These are good safety lessons, including noticing that there were some nice properties here that don’t apply to the AI problem - among them, the dangers of flight are obvious, and flying very low is very similar to flying high.

We need a deep safety culture for AI labs, but as Simeon and Jason Crawford explore here, there’s safety cultures that mostly allow operation while erring on the side of caution (aviation) and safety cultures that mostly don’t allow operation (nuclear power if you include the regulations).

Unscientific Thoughts on Unscientific Claims

My thesis on Marc Andreessen’s recent post Why AI Will Save the World was essentially that he knew his claims were Obvious Nonsense when examined, because they weren’t intended to be otherwise. Thus, there was no point in offering a point-by-point rebuttal any more than you would point out the true facts about Yo Mama.

Then Antonio Garcia Martinez, who passes the ‘a bunch of my followers follow them’ test and has a podcast and 150k+ followers, made me wonder. In particular, he focused on the claim that thinking AI extinction risk was greater than zero was ‘unscientific.’

Antonio Garcia Martinez: Has there been a serious rebuttal to @pmarca's AI piece? The powers that be are pushing us to hear the 'other side' on @MOZ_Podcast, but I'm not sure there is anything like a serious other side whose arguments weren't already dissected in the original piece.

Yes, I saw the Dwarkesh thing.

Zvi Mowshowitz: The piece in question does not discuss, let alone dissect or seriously engage with, most arguments for AI extinction risk, other than by calling opponents liars and cultists. If there are particular arguments there you want a specific response to I would be happy to provide.

The dismissals of more mundane risks seem to boil down to trust that we can figure it out as we go, which in many places I share, but assumes the answer rather than addressing the question of what concretely happens.

Martinez: Ok. But to take the other side of this for a second and repeat the pmarca challenge: what falsifiability criterion would you accept, where we reject the extinction possibility based on its improbability? Because we can sit here imagining sci-fi scenarios forever.

That was indeed the best objection raised by Andreessen. There are a number of ways one can respond to it, some of which I stole from Krueger below.

Here’s my basic list.

This proves too much. Almost none of our major decisions are made under ‘official scientific’ conditions. The default human condition is that one must act with limited information. You apply Bayes Rule and do the best you can. The finding out is step two, not step one. If it turns out that finding out would kill you, perhaps you shouldn’t fuck around.

The question privileges the hypothesis that AI doesn’t pose an extinction risk. One could make the exact same challenge in reverse.

More than that, the question assumes that AI extinction risk is the weird outcome that happens due to a particular mechanism of failure that you should be able to explain, lay out and test, and once that particular mechanism is dismissed you can stop worrying, all the other scenarios will be fine. Otherwise, why would a single experiment (other than building the damn thing) be expected to be sufficient? And no, this isn’t a strawman, this happens all the time, ‘They worry about X in particular happening, X is dumb and won’t happen, so nothing will go wrong.’ In fact, it is sufficiently not a strawman that I feel the need to reiterate that no, that is very much not how this works.

Retrospectively, we have run a lot of experiments, with mixed results, that should update us. I would argue that overall they strongly favor treating this as a serious risk.

There are definitely scientific experiments you could run to settle the issue, unfortunately all the obvious ones happen to get us all killed. There are many scientific theories where there are experiments one could run in theory to check their predictions and falsify the claim, but we lack the capability to run those experiments. That does not make the claims unscientific.

There’s lots and lots of technical alignment work that makes tons of falsifiable concrete claims all the time, experiments get run, seriously what the hell, let’s go. Compared to most claims in public debate, this is relatively scientific, actually.

The original claim is a misunderstanding of how science works. Martinez actually handles this correctly, the question is what evidence would cause such a low estimate of the probability of extinction risk that we should ignore it? That is the right question.

The right answer is ‘there are quite a lot of things that happen constantly that are evidence on the probability of extinction risk.’ I suggested, as potential future examples that would cut my p(doom) substantially, progress in controlling current systems especially on first attempt, progress of interpretability work on existing systems, and an example of a clearly dumber thing successfully aligning a clearly smarter thing without outside help. None of those are great tests, but they’re a start. If the question is ‘what’s one experiment that would drop your p(doom) to under 1%?’ then I can’t think of such an experiment that would provide that many bits of data, without also being one where getting the good news seems absurd or being super dangerous.

There is plenty of counterfactual evidence that could be true, that would make me not worried, that I know is simply not how our physics works. I don’t see much point in brainstorming a list here. This is importantly different from (for example) a claim that God exists, where no such list could be created.

AI alignment and extinction risk questions are in large part mathematical questions, or of similar structure to mathematical questions. Math is not science, yet hopefully we can agree math is still valid, indeed the most valid thing.

David Krueger makes a good attempt at explaining, which again I stole from a bit.

I've been trying to write a good Tweet(/thread) about why objections that AI x-risk is "not scientific enough" are misguided and prove too much. There's a lot to say, and it deserves a full blog post, but I'll try and just rapid fire a few arguments:

1) Most decisions we make aren't guided by a rigorous scientific understanding, but by common sense, logic, values, deference to others, etc. "It's not scientific" is prima facie not a good reason for rejecting a reason or argument.

2) The boundaries of science are not clear, and there are academic fields that are widely agreed to both: A) not be science B) sometimes generate knowledge that is relatively univeral/objective Examples include math and history.

3) Even hard science is fundamentally theory-laden. We must make assumptions about the world in order to collect and interpret empirical data. For instance, we assume some environmental factors matter and others don't, we assume some physical laws are constant, etc.

4) The most important decisions we make as a society (most policy-making) is not guided by hard science. Some of the most significant areas of public policy are things like geopolitics and macroeconomics, where experiments are impossible, and academics resort to theory.