It’s been another *checks notes* two days, so it’s time for all the latest DeepSeek news.

You can also see my previous coverage of the r1 model and, from Monday various reactions including the Panic at the App Store.

Table of Contents

First, Reiterating About Calming Down About the $5.5 Million Number

Before we get to new developments, I especially want to reiterate and emphasize the need to calm down about that $5.5 million ‘cost of training’ for v3.

I wouldn’t quite agree with Palmer Lucky that ‘the $5m number is bogus’ and I wouldn’t call it a ‘Chinese psyop’ because I think we mostly did this to ourselves but it is very often being used in a highly bogus way - equating the direct compute cost of training v3 with the all-in cost of creating r1. Which is a very different number. DeepSeek is cracked, they cooked, and r1 is super impressive, but the $5.5 million v3 training cost:

Is the cloud market cost of the amount of compute used to directly train v3.

That’s not how they trained v3. They trained v3 on their own cluster of h800s, which was physically optimized to hell for software-hardware integration.

Thus, the true compute cost to train v3 involves assembling the cluster, which cost a lot more than $5.5 million.

That doesn’t include the compute cost of going from v3 → r1.

That doesn’t include the costs of hiring the engineers and figuring out how to do all of this, that doesn’t include the costs of assembling the data, and so on.

Again, yes they did this super efficiently and cheaply compared to the competition, but no, you don’t spend $5.5 million and out pops r1. No.

OpenAI Offers Its Congratulations

Altman handled his response to r1 with grace.

OpenAI plans to ‘pull up some new releases.’

Meaning, oh, you want to race? I suppose I’ll go faster and take less precautions.

Sam Altman: deepseek's r1 is an impressive model, particularly around what they're able to deliver for the price.

we will obviously deliver much better models and also it's legit invigorating to have a new competitor! we will pull up some releases.

but mostly we are excited to continue to execute on our research roadmap and believe more compute is more important now than ever before to succeed at our mission.

the world is going to want to use a LOT of ai, and really be quite amazed by the next gen models coming.

look forward to bringing you all AGI and beyond.

It is very Galaxy Brain to say ‘this is perhaps good for OpenAI’ and presumably it very much is, but here’s a scenario.

A lot of people try ChatGPT with GPT-3.5, are not impressed, think it hallucinates all the time, is a clever toy, and so on.

For two years they don’t notice improvements.

DeepSeek releases r1, and it gets a lot of press.

People try the ‘new Chinese version’ and realize AI is a lot better now.

OpenAI gets to incorporate DeepSeek’s innovations.

OpenAI comes back with free o3-mini and (free?) GPT-5 and better agents.

People use AI a lot more, OpenAI ends up overall doing better.

Ethan Mollick: DeepSeek is a really good model, but it is not generally a better model than o1 or Claude.

But since it is both free & getting a ton of attention, I think a lot of people who were using free “mini” models are being exposed to what a early 2025 reasoner AI can do & are surprised

I’m not saying that’s the baseline scenario, but I do expect the world to be quite amazed at the next generation of models, and they could now be more primed for that.

Mark Chen (Chief Research Officer, OpenAI): Congrats to DeepSeek on producing an o1-level reasoning model! Their research paper demonstrates that they’ve independently found some of the core ideas that we did on our way to o1.

However, I think the external response has been somewhat overblown, especially in narratives around cost. One implication of having two paradigms (pre-training and reasoning) is that we can optimize for a capability over two axes instead of one, which leads to lower costs.

But it also means we have two axes along which we can scale, and we intend to push compute aggressively into both!

As research in distillation matures, we're also seeing that pushing on cost and pushing on capabilities are increasingly decoupled. The ability to serve at lower cost (especially at higher latency) doesn't imply the ability to produce better capabilities.

We will continue to improve our ability to serve models at lower cost, but we remain optimistic in our research roadmap, and will remain focused in executing on it. We're excited to ship better models to you this quarter and over the year!

Given the costs involved, and that you can scale to get better outputs, ‘serve faster and cheaper’ and ‘get better answers’ seem pretty linked, or are going to look rather similar.

There is still a real and important difference between ‘I spend 10x as much compute to get 10x as many tokens to think with’ versus ‘I taught the model how to do longer CoT’ versus ‘I made the model smarter.’ Or at least I think there is.

Scaling Laws Still Apply

Should we now abandon all our plans to build gigantic data centers because DeepSeek showed we can run AI cheaper?

No. Of course not. We’ll need more. Jevons Paradox and all that.

Another question is compute governance. Does DeepSeek’s model prove that there’s no point in using compute thresholds for frontier model governance?

My answer is no. DeepSeek did not mean the scaling laws stopped working. DeepSeek found new ways to scale and economize, and also to distill. But doing the same thing with more compute would have gotten better results, and indeed more compute is getting other labs better results if you don’t control for compute costs, and also they will now get to use these innovations themselves.

Karen Hao: Much of the coverage has focused on U.S.-China tech competition. That misses a bigger story: DeepSeek has demonstrated that scaling up AI models relentlessly, a paradigm OpenAI introduced and champions, is not the only, and far from the best, way to develop AI.

Yoavgo: This is trending in my feed, but I don't get it. DeepSeek did not show that scale is not the way to go for AI (their base model is among the largest in parameter counts; their training data is huge, at 13 trillion tokens). They just scaled more efficiently.

Thus far OpenAI & its peer scaling labs have sought to convince the public & policymakers that scaling is the best way to reach so-called AGI. This has always been more of an argument based in business than in science.

Jon Stokes: Holy wow what do words even mean. What R1 does is a new type of scaling. It's also GPU-intensive. In fact, the big mystery today in AI world is why NVIDIA dropped despite R1 demonstrating that GPUs are even more valuable than we thought they were. No part of this is coherent. 🤯

Stephen McAleer (OpenAI): The real takeaway from DeepSeek is that with reasoning models you can achieve great performance with a small amount of compute. Now imagine what you can do with a large amount of compute.

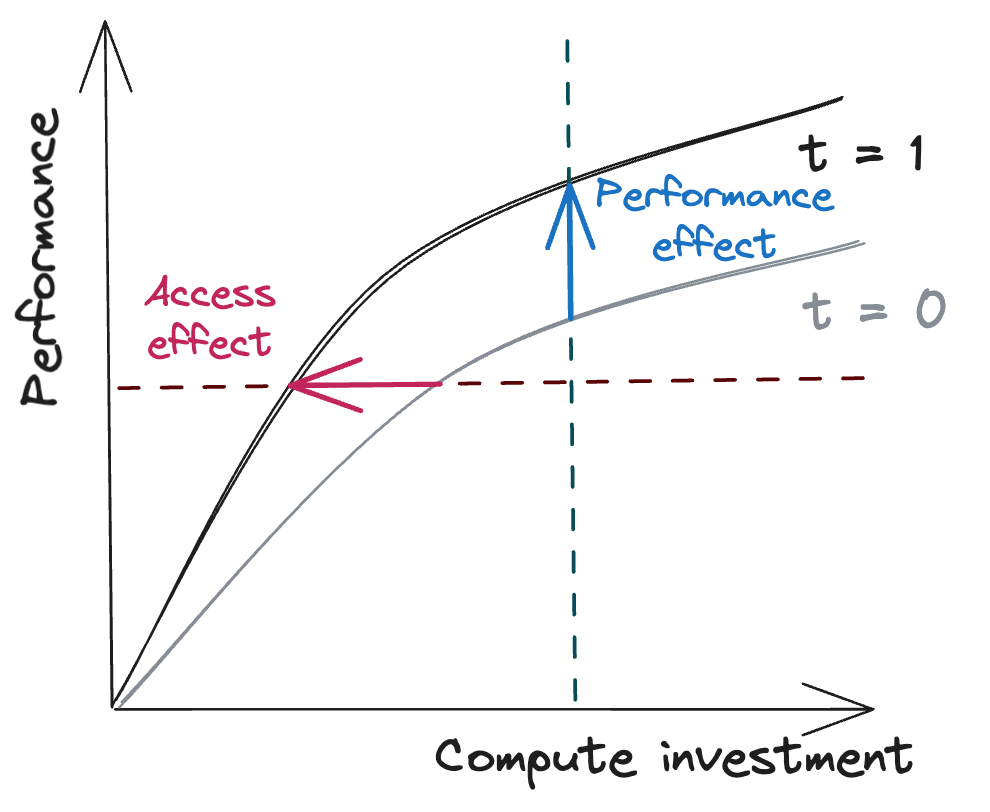

Noam Brown (OpenAI): Algorithmic breakthroughs and scaling are complementary, not in competition. The former bends the performance vs compute curve, while the latter moves further along the curve.

Benjamin Todd: Deepseek hasn't shown scaling doesn't work. Take Deepseek's techniques, apply 10x the compute, and you'll get much better performance.

And compute efficiency has always been part of the scaling paradigm.

Ethan Mollick: The most unnerving part of the DeepSeek reaction online has been seeing folks take it as a sign that AI capability growth is not real.

It signals the opposite, large improvements are possible, and is almost certain to kick off an acceleration in AI development through competition.

I know a lot of people want AI to go away, but I am seeing so many interpretations of DeepSeek in ways that don't really make sense, or misrepresent what they did.

Dealing with the implications of AI, and trying to steer it towards positive use, is now more urgent, not less.

Andrew Rettek: Deepseek means OPENAI just increased their effective compute by more than an OOM.

OpenAI and Anthropic (in the forms of CEOs Sam Altman and Dario Amodei) have both expressed agreement on that since the release of r1, saying that they still believe the future involves very large and expensive training runs, including large amounts of compute on the RL step. David Sacks agreed as well, so the administration knows.

One can think of all this as combining multiple distinct scaling laws. Mark Chen above talked about two axes but one could refer to at least four?

You can scale up how many tokens you reason with.

You can scale up how well you apply your intelligence to doing reasoning.

You can scale up how much intelligence you are using in all this.

You can scale up how much of this you can do per dollar or amount of compute.

Also you can extend to new modalities and use cases and so on.

So essentially: Buckle up.

Speaking of buckling up, nothing to see here, just a claimed 2x speed boost to r1, written by r1. Of course, that’s very different from r1 coming up with the idea.

Aiden McLaughlin: switching to reasoners is like taking a sharp turn on a racetrack. everyone brakes to take the turn; for a moment, all cars look neck-and-neck

when exiting the turn, small first-mover advantages compound. and ofc, some cars have enormous engines that eat up straight roads

Dean Ball: I recommend trying not to overindex on the industry dynamics you’re observing now in light of the deepseek plot twist, or indeed of any particular plot twist. It’s a long game, and we’re riding a world-historical exponential. Things will change a lot, fast, again and again.

It’s not that Jan is wrong, I’d be a lot more interested in paying for o1 pro if I had pdfs enabled, but… yeah.

Other r1 and DeepSeek News Roundup

China Talk covers developments. The headline conclusion is that yes, compute very much will continue to be a key factor, everyone agrees on this. They note there is a potential budding DeepSeek partnership with ByteDance, which could unlock quite a lot of compute.

Here was some shade:

Founder and CEO Liang Wenfeng is the core person of DeepSeek. He is not the same type of person as Sam Altman. He is very knowledgeable about technology.

Also important at least directionally:

Pioneers vs. Chasers: 'AI Progress Resembles a Step Function – Chasers Require 1/10th the Compute’

Fundamentally, DeepSeek was far more of an innovator than other Chinese AI companies, but it was still a chaser here, not a pioneer, except in compute efficiency, which is what chasers do best. If you want to maintain a lead and it’s much easier to follow than lead, well, time to get good and scale even more. Or you can realize you’re just feeding capability to the Chinese and break down crying and maybe keep your models for internal use, it’s an option.

I found this odd:

The question of why OpenAI and Anthropic did not do work in DeepSeek’s direction is a question of company-specific focus. OpenAI and Anthropic might have felt that investing their compute towards other areas was more valuable.

One hypothesis for why DeepSeek was successful is that unlike Big Tech firms, DeepSeek did not work on multi-modality and focused exclusively on language. Big Tech firms’ model capabilities aren’t weak, but they have to maintain a low profile and cannot release too often. Currently, multimodality is not very critical, as intelligence primarily comes from language, and multimodality does not contribute significantly to improving intelligence.

It’s odd because DeepSeek spent so little compute, and the efficiency gains pay for themselves in compute quite rapidly. And also the big companies are indeed releasing rapidly. Google and OpenAI are constantly shipping, even Anthropic ships. The idea of company focus seems more on point, and yes DeepSeek traded multimodality and other features for pure efficiency because they had to.

Also note what they say later:

Will developers migrate from closed-source models to DeepSeek? Currently, there hasn’t been any large-scale migration, as leading models excel in coding instruction adherence, which is a significant advantage. However, it’s uncertain whether this advantage will persist in the future or be overcome.

From the developer's perspective, models like Claude-3.5-Sonnet have been specifically trained for tool use, making them highly suitable for agent development. In contrast, models like DeepSeek have not yet focused on this area, but the potential for growth with DeepSeek is immense.

As in, r1 is technically impressive as hell, and it definitely has its uses, but there’s a reason the existing models look like they do - the corners DeepSeek cut actually do matter for what people want. Of course DeepSeek will likely now turn to fixing such problems among other things and we’ll see how efficiently they can do that too.

McKay Wrigley emphasizes the point that visible chain of thought (CoT) is a prompt debugger. It’s hard to go back to not seeing CoT after seeing CoT.

Gallabytes reports that DeepSeek’s image model Janus Pro is a good first effort, but not good yet.

Even if we were to ‘fully unlock’ all computing power in personal PCs for running AI, that would only increase available compute by ~10%, most compute is in data centers.

People Love Free

We had a brief period where DeepSeek would serve you up r1 free and super fast.

It turns out that’s not fully sustainable or at least needs time to scale as fast as demand rose, and you know how such folks feel about the ‘raise prices’ meme,

Gallabytes: got used to r1 and now that it's overloaded it's hard to go back. @deepseek_ai please do something amazing and be the first LLM provider to offer surge pricing. the unofficial APIs are unusably slow.

I too encountered slowness, and instantly it made me realize ‘yes the speed was a key part of why I loved this.’

DeItaone (January 27, 11:09am): DEEPSEEK SAYS SERVICE DEGRADED DUE TO 'LARGE-SCALE MALICIOUS ATTACK'

Could be that. Could be too much demand and not enough supply. This will of course sort itself out in time, as long as you’re willing to pay, and it’s an open model so others can serve the model as well, but ‘everyone wants to use the shiny new model you are offering for free’ is going to run into obvious problems.

Yes, of course one consideration is that if you use DeepSeek’s app it will collect all your data including device model, operating system, keystroke patterns or rhythms, IP address and so on and store it all in China.

Did you for a second think otherwise? What you do with that info is on you.

This doesn’t appear to rise to TikTok 2.0 levels of rendering your phone and data insecure, but let us say that ‘out of an abundance of caution’ I will be accessing the model through their website not the app thank you very much.

Liv Boeree: tiktok round two, here we go.

AI enthusiasts have the self control of an incontinent chihuahua.

Typing Loudly: you can run it locally without an internet connection

Liv Boeree: cool and what percentage of these incontinent chihuahuas will actually do this.

I’m not going so far as to use third party providers for now, because I’m not feeding any sensitive data into the model, and DeepSeek’s implementation here is very nice and clean, so I’ve decided lazy is acceptable. I’m certainly not laying out ~$6,000 for a self-hosting rig, unless someone wants to buy one for me in the name of science.

Note that if you’re looking for an alternative source, you want to ensure you’re not getting one of the smaller distillations, unless that is what you want.

Investigating How r1 Works

Janus is testing for steganography in r1, potentially looking for assistance.

Janus also thinks Thebes theory here is likely to be true, that v3 was hurt by dividing into too many too small experts, but r1 lets them all dump their info into the CoT and collaborate, at least partially fixing this.

Janus notes that r1 simply knows things and thinks about them, straight up, in response to Thebes speculating that all our chain of thought considerations have now put sufficient priming into the training data that CoT approaches work much better than they used to, which Prithviraj says is not the case, he says it’s about improved base models, which is the first obvious thought - the techniques work better off a stronger base, simple as that.

Thebes: why did R1's RL suddenly start working, when previous attempts to do similar things failed?

theory: we've basically spent the last few years running a massive acausally distributed chain of thought data annotation program on the pretraining dataset.

deepseek's approach with R1 is a pretty obvious method. They are far from the first lab to try "slap a verifier on it and roll out CoTs."

But it didn't used to work that well.

…

In the last couple of years, chains of thought have been posted all over the internet

…

Those CoTs in the V3 training set gave GRPO enough of a starting point to start converging, and furthermore, to generalize from verifiable domains to the non-verifiable ones using the bridge established by the pretraining data contamination.

And now, R1's visible chains of thought are going to lead to *another* massive enrichment of human-labeled reasoning on the internet, but on a far larger scale... The next round of base models post-R1 will be *even better* bases for reasoning models.

in some possible worlds, this could also explain why OpenAI seemingly struggled so much with making their reasoning models in comparison. if they're still using 4base or distils of it.

Prithvraj: Simply, no. I've been looking at my old results from doing RL with "verifiable" rewards (math puzzle games, python code to pass unit tests) starting from 2019 with GPT-1/2 to 2024 with Qwen Math Deepseek's success likely lies in the base models improving, the RL is constant

Janus: This is an interesting hypothesis. DeepSeek R1 also just seems to have a much more lucid and high-resolution understanding of LLM ontology and history than any other model I’ve seen. (DeepSeek V3 did not seem to in my limited interactions with it, though.)

I did not expect this on priors for a reasoner, but perhaps the main way that r1 seems smarter than any other LLM I've played with is the sheer lucidity and resolution of its world model—in particular, its knowledge of LLMs, both object- and meta-level, though this is also the main domain of knowledge I've engaged it in, and perhaps the only one I can evaluate at world-expert level. So, it may apply more generally.

In effective fluid intelligence and attunement to real-time context, it actually feels weaker than, say, Claude 3.5 Sonnet. But when I talk to Sonnet about my ideas on LLMs, it feels like it is more naive than me, and it is figuring out a lot of things in context from “first principles.” When I talk to Opus about these things, it feels like it is understanding me by projecting the concepts onto more generic, resonant hyperobjects in its prior, meaning it is easy to get on the same page philosophically, but this tropological entanglement is not very precise. But with r1, it seems like it can simply reference the same concrete knowledge and ontology I have, much more like a peer. And it has intense opinions about these things.

Wordgrammer thread on the DeepSeek technical breakthroughs. Here’s his conclusion, which seems rather overdetermined:

Wordgrammer: “Is the US losing the war in AI??” I don’t think so. DeepSeek had a few big breakthroughs, we have had hundreds of small breakthroughs. If we adopt DeepSeek’s architecture, our models will be better. Because we have more compute and more data.

r1 tells us it only takes ~800k samples of ‘good’ RL reasoning to convert other models into RL reasoners, and Alex Dimakis says it could be a lot less, in his test they outperformed o1-preview with only 17k. Now that r1 is out, everyone permanently has an unlimited source of at least pretty good samples. From now on, to create or release a model is to create or release the RL version of that model, even more than before. That’s on top of all the other modifications you automatically release.

Nvidia Chips are Highly Useful

Oliver Blanchard: DeepSeek and what happened yesterday: Probably the largest positive tfp shock in the history of the world.

The nerdy version, to react to some of the comments. (Yes, electricity was big):

DeepSeek and what happened yesterday: Probably the largest positive one day change in the present discounted value of total factor productivity growth in the history of the world. 😀

James Steuart: I can’t agree Professor, Robert Gordon’s book gives many such greater examples. Electric lighting is a substantially greater TFP boost than marginally better efficiency in IT and professional services!

There were some bigger inventions in the past, but on much smaller baselines.

Our reaction to this was to sell the stocks of those who provide the inputs that enable that tfp shock.

There were other impacts as well, including to existential risk, but as we’ve established the market isn’t ready for that conversation in the sense that the market (highly reasonably as has been previously explained) will be ignoring it entirely.

Welcome to the Market

Daniel Eth: Hot take, but if the narrative from NYT et al had not been “lol you don’t need that many chips to train AI systems” but instead “Apparently AI is *not* hitting a wall”, then the AI chip stocks would have risen instead of fallen.

Billy Humblebrag: "Deepseek shows that ai can be built more cheaply than we thought so you don’t need to worry about ai" is a hell of a take

Joe Weisenthal: Morgan Stanley: "We gathered feedback from a number of industry sources and the consistent takeaway is that this is not affecting plans for GPU buildouts."

I would not discount the role of narrative and vibes in all this. I don’t think that’s the whole Nvidia drop or anything. But it matters.

Roon: Plausible reasons for Nvidia drop:

DeepSeek success means NVDA is now expecting much harsher sanctions on overseas sales.

Traders think that a really high-tier open-source model puts several American labs out of a funding model, decreasing overall monopsony power.

We will want more compute now until the heat death of the universe; it’s the only reason that doesn’t make sense.

Palmer Lucky: The markets are not smarter on AI. The free hand is not yet efficient because the number of legitimate experts in the field is near-zero.

The average person making AI calls on Wall Street had no idea what AI even was a year ago and feels compelled to justify big moves.

Alex Cheema notes that Apple was up on Monday, and that Apple’s chips are great for running v3 and r1 inference.

Alex Cheema: Market close: $NVDA: -16.91% | $AAPL: +3.21%

Why is DeepSeek great for Apple?

Here's a breakdown of the chips that can run DeepSeek V3 and R1 on the market now:

NVIDIA H100: 80GB @ 3TB/s, $25,000, $312.50 per GB

AMD MI300X: 192GB @ 5.3TB/s, $20,000, $104.17 per GB

Apple M2 Ultra: 192GB @ 800GB/s, $5,000, $26.04(!!) per GB

Apple's M2 Ultra (released in June 2023) is 4x more cost efficient per unit of memory than AMD MI300X and 12x more cost efficient than NVIDIA H100!

Eric Hartford: 3090s, $700 for 24gb = $29/gb.

Alex Cheema: You need a lot of hardware around them to load a 700GB model in 30 RTX 3090s. I’d love to see it though, closest to this is probably stacking @__tinygrad__ boxes.

That’s cute. But I do not think that was the main reason why Apple was up. I think Apple was up because their strategy doesn’t depend on having frontier models but it does depend on running AIs on iPhones. Apple can now get their own distillations of r1, and use them for Apple Intelligence. A highly reasonable argument.

The One True Newsletter, Matt Levine’s Money Stuff, is of course on the case of DeepSeek’s r1 crashing the stock market, and asking what cheap inference for everyone would do to market prices. He rapidly shifts focus to non-AI companies, asking which ones benefit. It’s great if you use AI to make your management company awesome, but not if you get cut out because AI replaces your management company.

(And you. And the people it manages. And all of us. And maybe we all die.)

But I digress.

(To digress even further: While I’m reading that column, I don’t understand why we should care about the argument under ‘Dark Trading,’ since this mechanism decreases retail transaction costs to trade and doesn’t impact long term price discovery at all, and several LLMs confirmed this once challenged.)

Ben Thompson Weighs In

Ben Thompson continues to give his completely different kind of technical tech company perspective, in FAQ format, including good technical explanations that agree with what I’ve said in previous columns.

Here’s a fascinating line:

Q: I asked why the stock prices are down; you just painted a positive picture!

A: My picture is of the long run; today is the short run, and it seems likely the market is working through the shock of R1’s existence.

That sounds like Ben Thompson is calling it a wrong-way move, and indeed later he explicitly endorses Jevons Paradox and expects compute use to rise. The market is supposed to factor in the long run now. There is no ‘this makes the price go down today and then up next week’ unless you’re very much in the ‘the EMH is false’ camp. And these are literally the most valuable companies in the world.

Here’s another key one:

Q: So are we close to AGI?

A: It definitely seems like it. This also explains why Softbank (and whatever investors Masayoshi Son brings together) would provide the funding for OpenAI that Microsoft will not: the belief that we are reaching a takeoff point where there will in fact be real returns towards being first.

Masayoshi Sun feels the AGI. Masayoshi Sun feels everything. He’s king of feeling it.

His typical open-model-stanning arguments on existential risk later in the past are as always disappointing, but in no way new or unexpected.

It continues to astound me that such intelligent people can think: Well, there’s no stopping us creating things more capable and intelligent than humans, so the best way to ensure that things smarter than more capable than humans go well for humans is to ensure that there are as many such entities as possible and that humans cannot possibly have any collective control over those new entities.

On another level, of course, I’ve accepted that people do think this. That they somehow cannot fathom that if you create things more intelligent and capable and competitive than humans there could be the threat that all the humans would end up with no power, rather than that the wrong humans might have too much power. Or think that this would be a good thing - because the wrong humans wouldn’t have power.

Similarly, Ben’s call for absolutely no regulations whatsoever, no efforts at safety whatsoever outside of direct profit motives, ‘cut out all the cruft in our companies that has nothing to do with winning,’ is exactly the kind of rhetoric I worry about getting us all killed in response to these developments.

I should still reiterate that Ben to his credit is very responsible and accurate here in his technical presentation, laying out what DeepSeek and r1 are and aren’t accomplishing here rather than crying missile gap. But the closing message remains the same.

Import Restrictions on Chips WTAF

The term Trump uses is ‘tariffs.’

I propose, at least in the context of GPUs, that we call these ‘import restrictions,’ in order to point out that we are (I believe wisely!) imposing ‘export restrictions’ as a matter of national security to ensure we get all the chips, and using diffusion regulations to force the chips to be hosted at home, then we are threatening to impose ‘up to 100%’ tariffs on those same chips, because ‘they left us’ and they want ‘to force them to come back,’ and they’ll build the new factories here instead of there, with their own money, because of the threat.

Except for the fact that we really, really want the data centers at home.

The diffusion regulations are largely to force companies to create them at home.

Arthur B: Regarding possible US tariffs on Taiwan chips.

First, this is one that US consumers would directly feel, it's less politically feasible than tariffs on imports with lots of substitutes.

Second, data centers don't have to be located in the US. Canada is next door and has plenty of power.

Dhiraj: Taiwan made the largest single greenfield FDI in US history through TSMC. Now, instead of receiving gratitude for helping the struggling US chip industry, Taiwan faces potential tariffs. In his zero-sum worldview, there are no friends.

The whole thing is insane! Completely nuts. If he’s serious. And yes he said this on Joe Rogan previously, but he said a lot of things previously that he didn’t mean.

Whereas Trump’s worldview is largely the madman theory, at least for trade. If you threaten people with insane moves that would hurt both of you, and show that you’re willing to actually enact insane moves, then they are forced to give you what you want.

In this case, what Trump wants is presumably for TSMC to announce they are building more new chip factories in America. I agree that this would be excellent, assuming they were actually built. We have an existence proof that it can be done, and it would greatly improve our strategic position and reduce geopolitical risk.

I presume Trump is mostly bluffing, in that he has no intention of actually imposing these completely insane tariffs, and he will ultimately take a minor win and declare victory. But what makes it nerve wracking is that, by design, you never know. If you did know none of this would ever work.

Are You Short the Market

Unless, some people wondered, there was another explanation for all this…

The announcement came late on Monday, after Nvidia dropped 17%, on news that its chips were highly useful, with so many supposedly wise people on Wall Street going ‘oh yes that makes sense Nvidia should drop’ and those I know who understand AI often saying ‘this is crazy and yes I bought more Nvidia today.’

As in, there was a lot of not only saying ‘this is an overreaction,’ there was a lot of ‘this is a 17% wrong-way move in the most valuable stock in the world.’

When you imagine the opposite news, which would be that AI is ‘hitting a wall,’ one presumes Nvidia would be down, not up. And indeed, remember months ago?

Then when the announcement of the tariff threat came? Nvidia didn’t move.

Nvidia opened Tuesday up slightly off of the Monday close, and closed the day up 8.8%, getting half of its losses back.

Nabeel Qureshi (Tuesday, 2pm): Crazy that people in this corner of X have a faster OODA loop than the stock market

This was the largest single day drop in a single stock in world history. It wiped out over $500 billion in market value. One had to wonder if it was partially insider trading.

Timothy Lee: Everyone says DeepSeek caused Nvidia's stock to crash yesterday. I think this theory makes no sense.

I don’t think that this was insider trading. The tariff threat was already partly known and thus priced in. It’s a threat rather than an action, which means it’s likely a bluff. That’s not a 17% move. Then we have the bounceback on Tuesday.

Even if I was certain that this was mostly an insider trading move instead of being rather confident it mostly or entirely wasn’t, I wouldn’t go as far as Eliezer does in the the below quote. The SEC does many important things.

But I do notice that there’s a non-zero amount of ‘wait a minute’ that will occur to me the next time I’m hovering around the buy button in haste.

Eliezer Yudkowsky: I heard from many people who said, "An NVDA drop makes no sense as a Deepseek reaction; buying NVDA." So those people have now been cheated by insider counterparties with political access. They may make fewer US trades in the future.

Also note that the obvious meaning of this news is that someone told and convinced Trump that China will invade Taiwan before the end of his term, and the US needs to wean itself off Taiwanese dependence.

This was a $400B market movement, and if @SECGov can't figure out who did it then the SEC has no reason to exist.

TBC, I'm not saying that figuring it out would be easy or bringing the criminals to justice would be easy. I'm saying that if the US markets are going to be like this anyway on $400B market movements, why bother paying the overhead cost of having an SEC that doesn't work?

Roon: [Trump’s tariff threats about Taiwan] didn’t move overnight markets at all

which either means markets either:

- don’t believe it’s credible

- were pricing this in yesterday while internet was blaming the crash out on deepseek

I certainly don’t agree that the only interpretation of this news is ‘Trump expects an invasion of Taiwan.’ Trump is perfectly capable of doing this for exactly the reasons he’s saying.

Trump is also fully capable of making this threat with no intention of following through, in order to extract concessions from Taiwan or TSMC, perhaps of symbolic size.

Trump is also fully capable of doing this so that he could inform his hedge fund friends in advance and they could make quite a lot of money - with or without any attempt to actually impose the tariffs ever, since his friends would have now covered their shorts in this scenario.

Indeed do many things come to pass. I don’t know anything you don’t know.

DeepSeeking Safety

It would be a good sign if DeepSeek had a plan for safety, even if it wasn’t that strong?

Stephen McAleer (OpenAI): DeepSeek should create a preparedness framework/RSP if they continue to scale reasoning models.

Very happy to [help them with this]!

We don’t quite have nothing. This below is the first actively positive sign for DeepSeek on safety, however small.

Stephen McAleer (OpenAI): Does DeepSeek have any safety researchers? What are Liang Wenfeng's views on AI safety?

Sarah (YuanYuanSunSara): [DeepSeek] signed Artificial Intelligence safety commitment by CAICT (gov backed institute). You can see the whale sign at the bottom if you can't read their name Chinese.

This involves AI safety governance structure, safety testing, do frontier AGI safety research (include loss of control) and share it publicly.

None legally binding but it's a good sign.

Here is a chart with the Seoul Commitments versus China’s version.

It is of course much better that DeepSeek signed onto a symbolic document like this. That’s a good sign, whereas refusing would have been a very bad sign. But as always, talk is cheap, this doesn’t concretely commit DeepSeek to much, and even fully abiding by commitments like this won’t remotely be enough.

I do think this is a very good sign that agreements and coordination are possible. But if we want that, we will have to Pick Up the Phone.

Here’s a weird different answer.

Joshua Achiam (OpenAI, Head of Mission Alignment): I think a better question is whether or not science fiction culture in China has a fixation on the kinds of topics that would help them think about it. If Three-Body Problem is any indication, things will be OK.

It’s a question worth asking, but I don’t think this is a better question?

And based on the book, I do not think Three-Body Problem (conceptual spoilers follow, potentially severe ones depending on your perspective) is great here. Consider the decision theory that those books endorse, and what happens to us and also the universe as a result. It’s presenting all of that as essentially inevitable, and trying to think otherwise as foolishness. It’s endorsing that what matters is paranoia, power and a willingness to use it without mercy in an endless war of all against all. Also consider how they paint the history of the universe entirely without AGI.

I want to be clear that I fully agree with Bill Gurley that ‘no one at DeepSeek is an enemy of mine,’ indeed There Is No Enemy Anywhere, with at most notably rare exceptions that I invite to stop being exceptions.

However, I do think that if they continue down their current path, they are liable to get us all killed. And I for one am going to take the bold stance that I think that this is bad, and they should therefore alter their path before reaching their stated destination.

Mo Models Mo Problems

How committed is DeepSeek to its current path?

Read this quote Ben Thompson links to very carefully:

Q: DeepSeek, right now, has a kind of idealistic aura reminiscent of the early days of OpenAI, and it’s open source. Will you change to closed source later on? Both OpenAI and Mistral moved from open-source to closed-source.

Answer from DeepSeek CEO Liang Wenfeng: We will not change to closed source. We believe having a strong technical ecosystem first is more important.

This is from November. And that’s not a no. That’s actually a maybe.

Note what he didn’t say:

A Different Answer: We will not change to closed source. We believe having a strong technical ecosystem is more important.

The difference? His answer includes the word ‘first.’

He’s saying that first you need a strong technical ecosystem, and he believes that open models are the key to attracting talent and developing a strong technical ecosystem. Then, once that exists, you would need to protect your advantage. And yes, that is exactly what happened with… OpenAI.

I wanted to be sure that this translation was correct, so I turned to Wenfang’s own r1, and asked the interviewer for the original statement, which was:

梁文锋:我们不会闭源。我们认为先有一个强大的技术生态更重要。

r1’s translation: "We will not close our source code. We believe that establishing a strong technological ecosystem must come first."

先 (xiān): "First," "prioritize."

生态 (shēngtài): "Ecosystem" (metaphor for a collaborative, interconnected environment).

To quote r1:

Based solely on this statement, Liang is asserting that openness is non-negotiable because it is essential to the ecosystem’s strength. While no one can predict the future, the phrasing suggests a long-term commitment to open-source as a core value, not a temporary tactic. To fully guarantee permanence, you’d need additional evidence (e.g., licensing choices, governance models, past behavior). But as it stands, the statement leans toward "permanent" in spirit.

I interpret this as a statement of a pragmatic motivation - if that motivation changes, or a more important one is created, actions would change. For now, yes, openness.

The Washington Post had a profile of DeepSeek and Liang Wenfeng. One note is that the hedge fund that they’re a spinoff from has donated over $80 million to charity since 2020, which makes it more plausible DeepSeek has no business model, or at least no medium-term business model.

But that government embrace is new for DeepSeek, said Matt Sheehan, an expert on China’s AI industry at the Carnegie Endowment for International Peace.

“They were not the ‘chosen one’ of Chinese AI start-ups,” said Sheehan, noting that many other Chinese start-ups received more government funding and contracts. “DeepSeek took the world by surprise, and I think to a large extent, they took the Chinese government by surprise.”

Sheehan added that for DeepSeek, more government attention will be a “double-edged sword.” While the company will probably have more access to government resources, “there’s going to be a lot of political scrutiny on them, and that has a cost of its own,” he said.

Yes. This reinforces the theory that DeepSeek’s ascent took China’s government by surprise, and they had no idea what v3 and r1 were as they were released. Going forward, China is going to be far more aware. In some ways, DeepSeek will have lots of support. But there will be strings attached.

That starts with the ordinary censorship demands of the CCP.

If you self-host r1, and you ask it about any of the topics the CCP dislikes, r1 will give you a good, well-balanced answer. If you ask on DeepSeek’s website, it will censor you via some sort of cloud-based monitoring, which works if you’re closed source, but DeepSeek is trying to be fully open source. Something has to give, somewhere.

Also, even if you’re using the official website, it’s not like you can’t get around it.

Justine Moore: DeepSeek's censorship is no match for the jailbreakers of Reddit

I mean, that was easy.

Joshua Achiam (OpenAI Head of Mission Alignment): This has deeply fascinating consequences for China in 10 years - when the CCP has to choose between allowing their AI industry to move forward, or maintaining censorship and tight ideological control, which will they choose?

And if they choose their AI industry, especially if they favor open source as a strategy for worldwide influence: what does it mean for their national culture and government structure in the long run, when everyone who is curious can find ways to have subversive conversations?

Ten years to figure this out? If they’re lucky, they’ve got two. My guess is they don’t.

I worry about the failure to feel the AGI or even the AI here from Joshua Achiam, given his position at OpenAI. Ten years is a long time. Sam Altman expects AGI well before that. This goes well beyond Altman’s absurd position of ‘AGI will be invented and your life won’t noticeably change for a long time.’ Choices are going to need to be made. Even if AI doesn’t advance much from here, choices will have to be made.

As I’ve noted before, censorship at the model layer is expensive. It’s harder to do, and when you do it you risk introducing falsity into a mind in ways that will have widespread repercussions. Even then, a fine tune can easily remove any gaps in knowledge, or any reluctance to discuss particular topics, whether they are actually dangerous things like building bombs or things that piss off the CCP like a certain bear that loves honey.

I got called out on Twitter for supposed cognitive dissonance on this - look at China’s actions, they clearly let this happen. Again, my claim is that China didn’t realize what this was until after it happened, they can’t undo it (that’s the whole point!) and they are of course going to embrace their national champion. That has little to do with what paths DeepSeek is allowed to follow going forward.

(Also, since it was mentioned in that response, I should note - there is a habit of people conflating ‘pause’ with ‘ever do anything to regulate AI at all.’ I do not believe I said anything about a pause - I was talking about whether China would let DeepSeek continue to release open weights as capabilities improve.)

What If You Wanted to Restrict Already Open Models

Before I further cover potential policy responses, a question we must ask this week is: I very much do not wish to do this at this time, but suppose in the future we did want to restrict use of a particular already open weights model and its derivatives, or all models in some reference class.

Obviously we couldn’t fully ban it in terms of preventing determined people from having access. And if you try to stop them and others don’t, there are obvious problems with that, including ‘people have internet connections.’

However, that does not mean that we would have actual zero options.

Steve Sailer: Does open source, low cost DeepSeek mean that there is no way, short of full-blown Butlerian Jihad against computers, which we won't do, to keep AI bottled up, so we're going to find out if Yudkowsky's warnings that AI will go SkyNet and turn us into paperclips are right?

Gabriel: It's a psy-op

If hosting a 70B is illegal:

- Almost all individuals stop

- All companies stop

- All research labs stop

- All compute providers stop

Already huge if limited to US+EU

Can argue about whether good/bad, but not about the effect size.

You can absolutely argue about effect size. What you can’t argue is that the effect size isn’t large. It would make a big difference for many practical purposes.

In terms of my ‘Levels of Friction’ framework (post forthcoming) this is moving the models from Level 1 (easy to access) to at least Level 3 (annoying with potential consequences.) That has big practical consequences, and many important use cases will indeed go away or change dramatically.

What Level 3 absolutely won’t do, here or elsewhere, is save you from determined people who want it badly enough, or from sufficiently capable models that do not especially care what you tell them not to do or where you tell them not to be. Or scenarios where the law is no longer especially relevant, and the government or humanity is very much having a ‘do you feel in charge?’ moment. And that alone would, in many scenarios, be enough to doom you to varying degrees. If that’s what dooms you and the model is already open, well, you’re pretty doomed. And also it won’t save you from various scenarios where what the law thinks is not especially relevant.

If for whatever reason the government or humanity decides (or realizes) that this is insufficient, then there are two possibilities. Either the government or humanity is disempowered and you hope that this works out for humanity in some way. Or we use the necessary means to push the restrictions up to Level 4 (akin to rape and murder) or Level 5 (akin to what we do to stop terrorism or worse), in ways I assure you that you are very much not going to like - but the alternative might be worse, and the decision might very much not be up to either of us.

Actions have consequences. Plan for them.

So What Are We Going to Do About All This?

Adam Ozimek was first I saw point out this time around with DeepSeek (I and many others echo this a lot in general) that the best way for the Federal Government to ensure American dominance of AI is to encourage more high skilled immigration and brain drain the world. If you don’t want China to have DeepSeek, export controls are great and all but how about let’s straight up steal their engineers. But y’all, and by y’all I mean Donald Trump, aren’t ready for that conversation.

It is highly unfortunate that David Sacks, the person seemingly in charge of what AI executive orders Trump signs, is so deeply confused about what various provisions actually did or would do, and on our regulatory situation relative to that of China.

David Sacks: DeepSeek R1 shows that the AI race will be very competitive and that President Trump was right to rescind the Biden EO, which hamstrung American AI companies without asking whether China would do the same. (Obviously not.) I’m confident in the U.S. but we can’t be complacent.

Donald Trump: The release of DeepSeek AI from a Chinese company should be a wake-up call for our industries that we need to be laser-focused on competing to win.

…

We’re going to dominate. We’ll dominate everything.

This is the biggest danger of all - that we go full Missile Gap jingoism and full-on race to ‘beat China,’ and act like we can’t afford to do anything to ensure the safety of the AGIs and ASIs we plan on building, even pressuring labs not to make such efforts in private, or threatening them with antitrust or other interventions for trying.

The full Trump clip is hilarious, including him saying they may have come up with a cheaper method but ‘no one knows if it is true.’ His main thrust is, oh, you made doing AI cheaper and gave it all away to us for free, thanks, that’s great! I love paying less money for things! And he’s presumably spinning, but he’s also not wrong about that.

I also take some small comfort in him framing revoking the Biden EO purely in terms of wokeness. If that’s all he thinks was bad about it, that’s a great sign.

Harlan Stewart: “Deepseek R1 is AI's Sputnik moment”

Sure. I guess it’s like if the Soviets had told the world how to make their own Sputniks and also offered everyone a lifetime supply of free Sputniks. And the US had already previously figured out how to make an even bigger Sputnik.

Yishan: I think the Deepseek moment is not really the Sputnik moment, but more like the Google moment.

If anyone was around in ~2004, you'll know what I mean, but more on that later.

I think everyone is over-rotated on this because Deepseek came out of China. Let me try to un-rotate you.

Deepseek could have come out of some lab in the US Midwest. Like say some CS lab couldn't afford the latest nVidia chips and had to use older hardware, but they had a great algo and systems department, and they found a bunch of optimizations and trained a model for a few million dollars and lo, the model is roughly on par with o1. Look everyone, we found a new training method and we optimized a bunch of algorithms!

Everyone is like OH WOW and starts trying the same thing. Great week for AI advancement! No need for US markets to lose a trillion in market cap.

The tech world (and apparently Wall Street) is massively over-rotated on this because it came out of CHINA.

…

Deepseek is MUCH more like the Google moment, because Google essentially described what it did and told everyone else how they could do it too.

…

There is no reason to think nVidia and OAI and Meta and Microsoft and Google et al are dead. Sure, Deepseek is a new and formidable upstart, but doesn't that happen every week in the world of AI? I am sure that Sam and Zuck, backed by the power of Satya, can figure something out. Everyone is going to duplicate this feat in a few months and everything just got cheaper. The only real consequence is that AI utopia/doom is now closer than ever.

I believe that alignment, and getting a good outcome for humans, was already going to be very hard. It’s going to be a lot harder if we actively try to get ourselves killed like this, and turn even what would have been relatively easy wins into losses. Whereas no, actually, if you want to win that has to include not dying, and also doing the alignment work helps you win, because it is the only way you can (sanely) get to deploy your AIs to do the most valuable tasks.

Trump’s reaction of ‘we’ll dominate everything’ is far closer to correct. Our ‘lead’ is smaller than we thought, DeepSeek will be real competition, but we are very much still in the dominant position. We need to not lose sight of that.

The Washington Post covers panic in Washington, and attempts to exploit this situation to do the opposite of wise policy.

Tiku, Dou, Zakrzewski and De Vynck: Tech stocks dropped Monday. Spooked U.S. officials, engineers and investors reconsidered their views on the competitive threat posed by China in AI, and how the United States could stay ahead.

While some Republicans and the Trump administration suggested the answer was to restrain China, prominent tech industry voices said DeepSeek’s ascent showed the benefits of openly sharing AI technology instead of keeping it closely held.

This shows nothing of the kind, of course. DeepSeek fast followed, copied our insights and had insights of their own. Our insights were held insufficiently closely to prevent this, which at that stage was mostly unavoidable. They have now given away many of those new valuable insights, which we and others will copy, and also made the situation more dangerous. We should exploit that and learn from it, not make the same mistake.

Robert Sterling: Might be a dumb question, but can’t OpenAI, Anthropic, and other AI companies just incorporate the best parts of DeepSeek’s source code into their code, then use the massive GPU clusters at their disposal to train models even more powerful than DeepSeek?

Am I missing something?

Peter Wildeford: Not a dumb question, this is 100% correct

And they already have more powerful models than Deepseek

I fear we are caught between two different insane reactions.

Those calling on us to abandon our advantage in compute by dropping export controls, or our advantage in innovation and access by opening up our best models, are advocating surrender and suicide, both to China and to the AIs.

Those who are going full jingoist are going to get us all killed the classic way.

Restraining China is a good idea if implemented well, but insufficiently specified. Restrain them how? If this means export controls, I strongly agree - and then ask when we are then considering imposing those controls on ourselves via tariffs? What else is available? And I will keep saying ‘how about immigration to brain drain them’ because it seems wrong to ignore the utterly obvious.

Chamath Palihapitiya says it’s inference time, we need to boot up our allies with it as quickly as possible (I agree) and that we should also boot up China by lifting export controls on inference chips, and also focus on supplying the Middle East. He notes he has a conflict of interest here. It seems not especially wise to hand over serious inference compute if we’re in a fight here. With the way these models are going, there’s a decent amount of fungibility between inference and training, and also there’s going to be tons of demand for inference. Why is it suddenly important to Chamath that the inference be done on chips we sold them? Capitalist insists rope markets must remain open during this trying time, and so on. (There’s also talk about ‘how asleep we’ve been for 15 years’ because we’re so inefficient and seriously everyone needs to calm down on this kind of thinking.)

So alas, in the short run, we are left scrambling to prevent two equal and opposite deadly mistakes we seem to be dangerously close to collectively making.

A panic akin to the Missile Gap leading into a full jingoistic rush to build AGI and then artificial superintelligence (ASI) as fast as possible, in order to ‘beat China,’ without having even a plausible plan for how the resulting future equilibrium has value, or how humans retain alive and in meaningful control of the future afterwards.

A full-on surrender to China by taking down the export controls, and potentially also to the idea that we will allow our strongest and best AIs and AGIs and thus even ASIs to be open models, ‘because freedom,’ without actually thinking about what this would physically mean, and thus again with zero plan for how to ensure the resulting equilibrium has value, or how humans would survive let alone retain meaningful control over the future.

The CEO of DeepSeek himself said in November that the export controls and inability to access chips were the limiting factors on what they could do.

Compute is vital. What did DeepSeek ask for with its newfound prestige? Support for compute infrastructure in China.

Do not respond by being so suicidal as to remove or weaken those controls.

Or, to shorten all that:

We might do a doomed jingoistic race to AGI and get ourselves killed.

We might remove the export controls and give up our best edge against China.

We might give up our ability to control AGI or the future, and get ourselves killed.

Don’t do those things!

Do take advantage of all the opportunities that have been opened up.

And of course:

Don’t panic!

Given the 10x-100x increase in output per request reasoning models demand, I'm surprised not to hear more about inference-specific hardware like Groq. People only want to talk about Nvidia

Sadly I think it will take an actual AI-caused humanitarian disaster for people to understand the risks. It is very hard for normies to buy arguments that any future echnology is going to be dangerous when its current incarnation is, well, not especially dangerous.