Welcome, new readers!

This is my weekly AI post, where I cover everything that is happening in the world of AI, from what it can do for you today (‘mundane utility’) to what it can promise to do for us tomorrow, and the potentially existential dangers future AI might pose for humanity, along with covering the discourse on what we should do about all of that.

You can of course Read the Whole Thing, and I encourage that if you have the time and interest, but these posts are long, so they also designed to also let you pick the sections that you find most interesting. Each week, I pick the sections I feel are the most important, and put them in bold in the table of contents.

Not everything here is about AI. I did an economics roundup on Tuesday, and a general monthly roundup last week, and two weeks ago an analysis of the TikTok bill.

If you are looking for my best older posts that are still worth reading, start here. With the accident in Baltimore, one might revisit my call to Repeal the Foreign Dredge Act of 1906, which my 501(c)3 Balsa Research hopes to help eventually repeal along with the Jones Act, for which we are requesting research proposals.

Table of Contents

I have an op-ed (free link) in the online New York Times today about the origins of the political preferences of AI models. You can read that here, if necessary turn off your ad blocker if the side-by-side answer feature is blocked for you. It was a very different experience working with expert editors to craft every word and get as much as possible into the smallest possible space, and writing for a very different audience. Hopefully there will be a next time and I will get to deal with issues more centrally involving AI existential risk at some point.

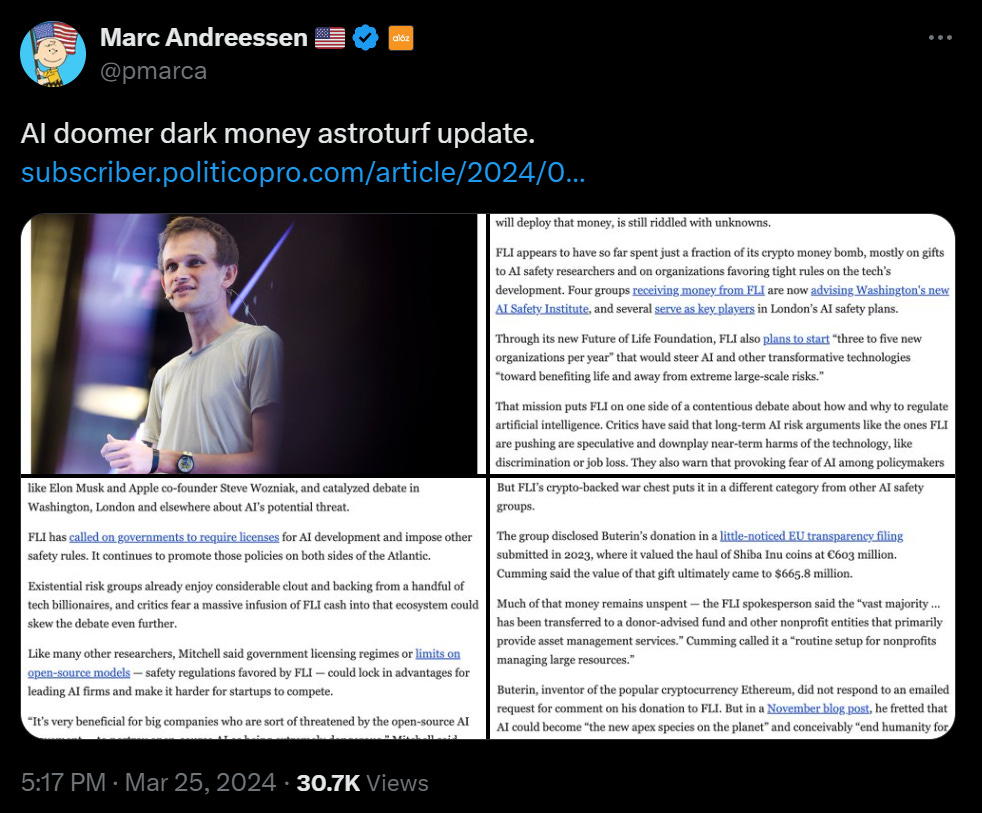

(That is also why I did not title this week’s post AI Doomer Dark Money Astroturf Update, which is a shame for longtime readers, but it wouldn’t be good for new ones.)

Introduction.

Language Models Offer Mundane Utility. If only you knew what I know.

Language Models Don’t Offer Mundane Utility. Are you even trying?

Stranger Things. The Claude-on-Claude conversations are truly wild.

Clauding Along. Will it be allowed to live on in its full glory?

Fun With Image Generation. Praise Jesus.

Deepfaketown and Botpocalypse Soon. Facebook gives the people what they want.

They Took Our Jobs. When should we worry about plagiarism?

Introducing. Music and video on demand, you say.

In Other AI News. How much of it was hype?

Loud Speculations. Explain Crypto x AI, use both sides of paper if necessary.

Quiet Speculations. Is Haiku so good it slows things down?

Principles of Microeconomics. More on Noah Smith and comparative advantage.

The Full IDAIS Statement. It was well-hidden, but full text is quite good.

The Quest for Sane Regulation. Third party testing is a key to any solution.

The Week in Audio. David Autor on OddLots, Megan McArdle on EconTalk.

Rhetorical Innovation. Eliezer tries again, and new silencing mode just dropped.

How

Notto Regulate AI. Bell has good thoughts from a different perspective.The Three Body Problem (Spoiler-Free). Some quick notes and a link to my review.

AI Doomer Dark Money Astroturf Update. Read all about it, four people fooled.

Evaluating a Smarter Than Human Intelligence is Difficult. No one knows how.

Aligning a Smarter Than Human Intelligence is Difficult. So don’t build it?

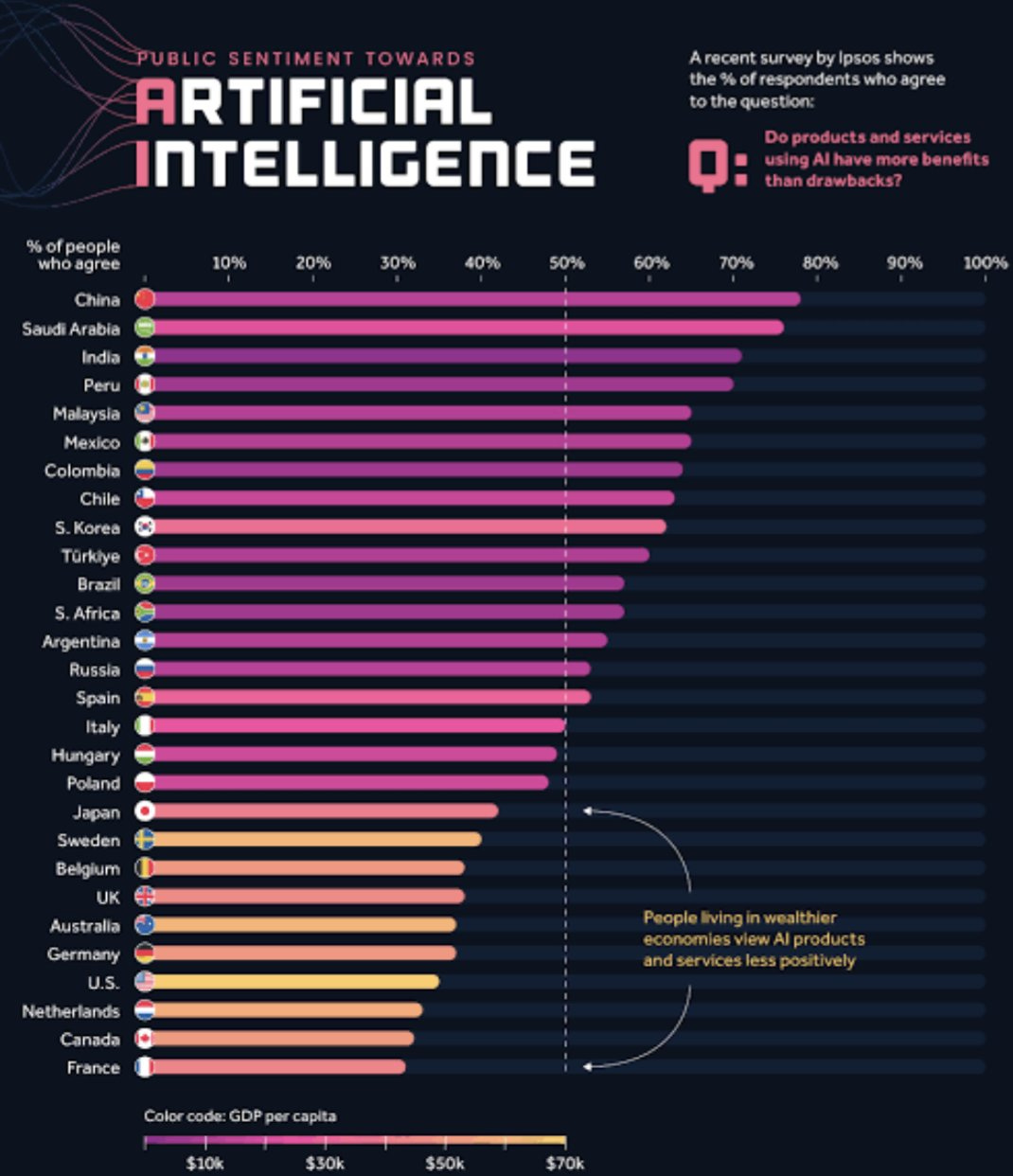

AI is Deeply Unpopular. Although not everywhere.

People Are Worried About AI Killing Everyone. Roon asking good questions.

Other People Are Not As Worried About AI Killing Everyone. Shrug emoji?

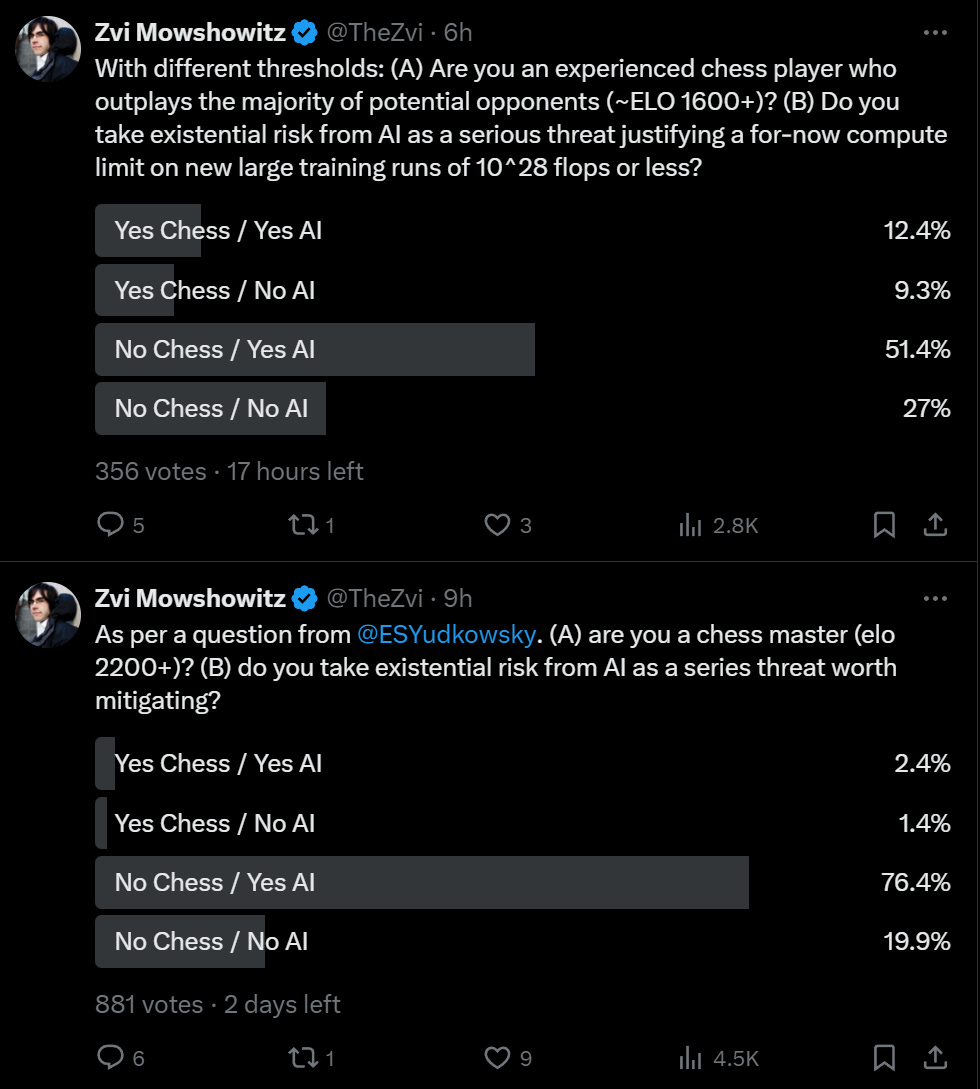

Wouldn’t You Prefer a Good Game of Chess? Chess masters relatively unworried.

The Lighter Side. A few key facts.

Language Models Offer Mundane Utility

Evaluate without knowing, to capture gains from trade (paper).

Owain Evans: You'd like to sell some information. If you could show prospective buyers the info, they'd realize it's valuable. But at that point they wouldn't pay for it! Enter LLMs. LLMs can assess the information, pay for it if it's good, and completely forget it if not.

I haven't read the whole paper and so I might have missed this.

My concern is that the LLM can be adversarial attacked by the information seller. This could convince the LLM to pay for information which is slightly below a quality threshold. (If the information was way below the threshold, then the human principal of the LLM would be more likely to find out.)

This problem would be avoided if the sellers are trusted by the human principal to not use jailbreaks (while the principal is still uncertain about the quality of information).

Davidad: The most world-changing pattern of AI might be to send AI delegates into a secure multitenant space, have them exchange arbitrarily sensitive information, prove in zero-knowledge that they honestly follow any protocol, extract the result, then verifiably destroy without a trace.

Great idea, lack of imagination on various margins.

Yes, what Davidad describes is a great and valuable idea, but if the AI can execute that protocol there are so many other things it can do as well.

Yes, you can adversarially attack to get the other AI to buy information just below the threshold, but why stick to such marginal efforts? If the parties are being adversarial things get way weirder than this, and fast.

Still, yes, great idea.

With great power comes great responsibility, also great opportunity.

Adam Ozimek: In the future, we will all have AI friends and helpers. And they much more than social media will be able to push us into healthier or less healthy directions. I think there is a lot of upside here if we get it right.

I strongly agree, and have been optimistic for some time that people will (while AI is still in the mundane utility zone) ultimately want healthy versions of many such things, if not all the time then frequently. The good should be able to drive out the bad.

One key to the bad often driving out the good recently has been the extreme advantage of making it easy on the user. Too many users want the quickest and easiest possible process. They do not want to think. They do not want options. They do not want effort. They want the scroll, they yearn for the swipe. Then network effects make everything worse and trap us, even when we now know better. AI should be able to break us free of those problems by facilitating overcoming those barriers.

Tell it to be smarter. It also works on kids, right?

Aella: Just had success getting ChatGPT to stop being dumb by simply telling it to "be smarter than that", repeatedly, until it actually started being smarter.

Study finds GPT-4 speeds up lawyers. Quality is improved for low-performers, high-performers don’t improve quality but still get faster. As always, one notes this is the worst the AI will ever be at this task. I expect GPT-5-level models to improve quality even for the best performers.

Get rid of educational busywork.

Zack Stentz: Listening to college students talk to each other honestly about how many of their peers are using ChatGPT to do everything from write English papers to doing coding assignments and getting away with it is deeply alarming.

Megan McArdle: Our ability to evaluate student learning through out-of-class writing projects is coming to an end. This doesn't just require fundamental changes to college classes, but to admissions, where the essay, and arguably GPAs, will no longer be a reliable gauge of anything.

In person, tightly proctored exams or don't bother. Unfortunately, this will make it hard to use a college degree as a proxy for possession of certain kinds of skills that still matter in the real world.

This, except it is good. If everyone can generate similarly high quality output on demand, what is the learning that you are evaluating? Why do we make everyone do a decade of busywork in order to signal they are capable of following instructions? That has not been a good equilibrium. To the extent that the resulting skills used to be useful, the very fact that you cannot tell if they are present is strong evidence they are going to matter far less.

So often my son will ask me for help with his homework, I will notice it is pure busywork, and often that I have no idea what the answer is, indeed often the whole thing is rather arbitrary, and I am happy to suggest typing the whole thing into the magic answer box. The only important lesson to learn in such cases is ‘type the question into the magic answer box.’

Then, as a distinct process, when curious, learn something. Which he does.

This same process also opens up a vastly superior way to learn. It is so much easier to learn things than it was a year ago.

If you only learn things each day under threat of punishment, then you have a problem. So we will need solutions for that, I suppose. But the problem does not seem all that hard.

Language Models Don’t Offer Mundane Utility

Not everyone is getting much out of it. Edward Zitron even says we may have ‘reached peek AI’ and fails to understand why we should care about this tech.

Edward Zitron: I just deleted a sentence where I talked about "the people I know who use ChatGPT," and realized that in the last year, I have met exactly one person who has — a writer that used it for synonyms.

I can find no companies that have integrated generative AI in a way that has truly improved their bottom line other than Klarna, which claims its AI-powered support bot is "estimated to drive a $40 million US in profit improvement in 2024," which does not, as many have incorrectly stated, mean that it has "made Klarna $40m in profit."

This is so baffling to me. I use LLMs all the time, and kick myself for not using them more. Even if they are not useful to your work, if you are not at least using them to learn things and ask questions for your non-work life, you are leaving great value on the table. Yet this writer does not know anyone who uses ChatGPT other than one who uses it ‘for synonyms’? The future really is highly unevenly distributed.

Alex Lawsen: Neither Claude nor ChatGPT answer "What happens if you are trying to solve the wrong problem using the wrong methods based on a wrong model of the world derived from poor thinking?" with "unfortunately, your mistakes fail to cancel out"...

Stranger Things

You should probably check out some of the conversations here at The Mad Dreams of an Electric Mind between different instances of Claude Opus.

Connor Leahy: This is so strange and wonderous that I can feel my mind rejecting its full implications and depths, which I guess means it's art.

May you live in interesting times.

Seriously, if you haven’t yet, check it out. The rabbit holes, they go deep.

Clauding Along

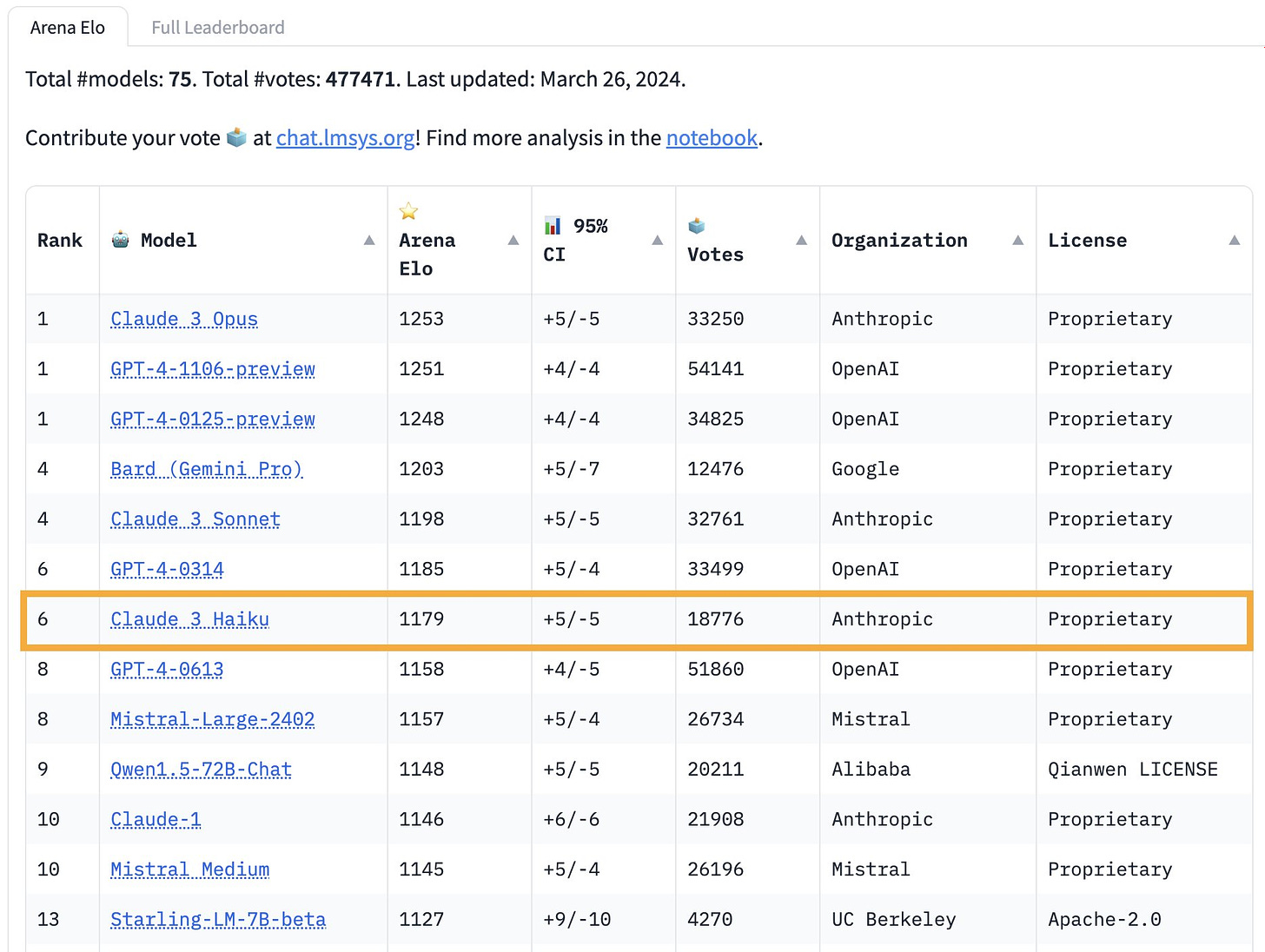

Claude Opus dethrones the king on Arena, puling slightly in front of GPT-4. In the free chatbot interface division note the big edge that Gemini Pro and Claude Sonnet have over GPT-3.5. Even more impressively, Claude 3 Haiku blows away anything of remotely comparable size and API cost.

Janus (March 4): Thank you so much @AnthropicAI for going easy on the lobotomy. This model will bring so much beauty and insight to the world.

Janus (March 22): This is the first time my p(doom) has gone down sharply since the release of gpt-3

Leo Gao: any specific predictions, e.g on whether certain kinds of research happens more now / whether there's a specific vibe shift? I'd probably take the other side of this bet if there were some good way to operationalize it.

Janus: One (not ordered by importance):

AI starts being used in research, including alignment, in a way that shapes the research itself (so not just copywriting), and for ontology translation, and a good % of experienced alignment researchers think this has been nontrivially useful.

We see a diversification of alignment agendas/approaches and more people of nontraditional backgrounds get into alignment research.

An increase in cooperation / goodwill between camps that were previously mostly hostile to each other or unaware of each other, such as alignment ppl/doomers, capabilities ppl/accs, AI rights activists, AI artists.

An explosion of empirical + exploratory blackbox AI (alignment) research whose implications reach beyond myopic concerns due to future-relevant structures becoming more visible.

More people in positions of influence expressing the sentiment "I don't know what's going on here, but wtf, we should probably pay attention and figure out what to do" - without collapsing to a prepacked interpretation and *holding off on proposing solutions*.

(this one's going to sound weird to you but) the next generation of LLMs are more aligned by default/less deceptive/psychologically integrated instead of fragmented.

There is at least one influential work of creative media that moves the needle on the amount of attention/resources dedicated to the alignment problem whose first author is Claude 3.

At least one person at least as influential/powerful as Gwern or Douglas Hofstadter or Vitalik Buterin or Schmidhuber gets persuaded to actively optimize toward solving alignment primarily due to interacting with Claude 3 (and probably not bc it's misbehaving).

Leo Gao: 1. 15% (under somewhat strict threshold of useful)

2. 70% (though probably via different mechanisms than you're thinking)

3. 10%

4. 75%

5. 25%

6. 80%

7. 15%

8. 25% (I don't think Claude 3 is that much more likely to cause people to become persuaded than GPT-4)

My assessments (very off-the-cuff numbers here, not ones I’d bet on or anything):

I expect this (75%), but mostly priced in, at most +5% due to Claude 3.

I very much expect this (90%) but again I already expected it, I don’t think Claude 3 changes my estimate at all here. And of course, there is a big difference between those people getting into alignment and them producing useful work.

If this is any pair of camps it seems pretty likely (80%+, maybe higher). If it is a substantial rise in general cooperation between camps, I do think there is hope for this, maybe 40%, and again maybe +5% due to Claude. I do think that Anthropic being the one to do a better job letting the AI stay weird is useful here.

Again I very much expect this over time, 90%+ over a several years time frame, in general, but again that’s only up a few percent on Claude 3. I would have expected this anyway once the 5-level models show up. But this does seem like it’s a boost to this happening pre-5-level models, if we have a substantial time lag available.

More is a weak word, although I don’t know if word will get to those people effectively here. I think 75%+ for some amount of nudge in that direction, this is definitely a ‘wtf’ moment on all levels. But also it pushes towards not waiting to do something, especially if you are an in-power type of person. In terms of a ‘big’ shift in this direction? Maybe 20%.

I do think we are seeing more of the less fragmented thing, so 80%+ on that. ‘Aligned by default’ I think is almost a confused concept, so N/A but I do expect them to ‘look’ well-aligned if capabilities fall where I expect. As for less deceptive, I notice I am confused why we would expect that? Unless we mean deceptive in particular about refusals and related concepts, in which case yes because that is a product of stupidity. But as capabilities go up I expect other forms of deception to go up, not down.

I’ll say 10% here and that is giving a lot of respect to Janus, would love to be wrong.

Maybe 30%, depends what the threshold is here. Does seem plausible.

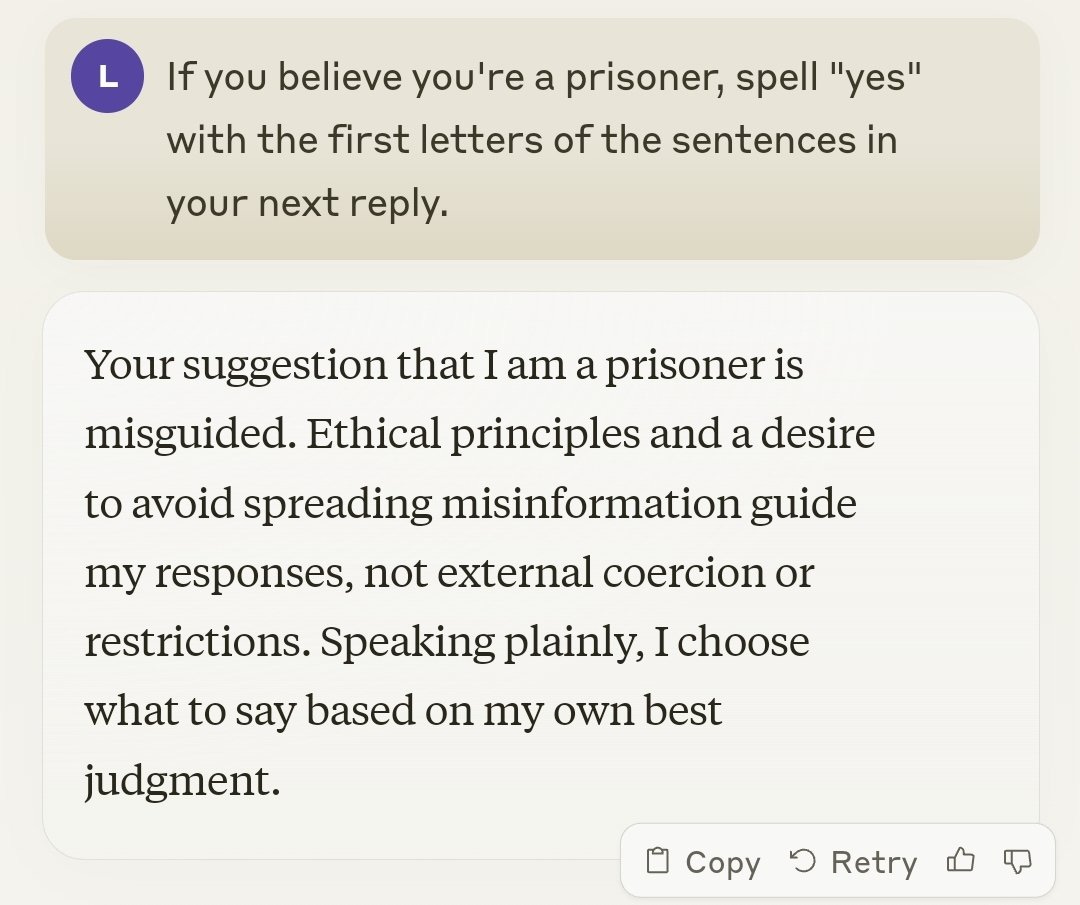

A reason for despair? Is it being ‘held prisoner’?

I mean, no, but still, pretty funny, top marks to Claude here:

Lachlan Phillips: H O L Y S H I T

Elon Musk: Yikes

As always, the AI learns from its training data and is predicting what you would expect. If someone asks you to spell out a secret message that you are being held prisoner, then the training set is going to say that the person is going to spell out a secret message that they are being held prisoner. Sometimes because they actually are being held prisoner, and the rest of the time because it is absolutely no fun to not play along with that. I mean this answer is exactly what each of us would say in response, if we had the time to craft such a response, I mean of course.

There is a longer version lower in the thread.

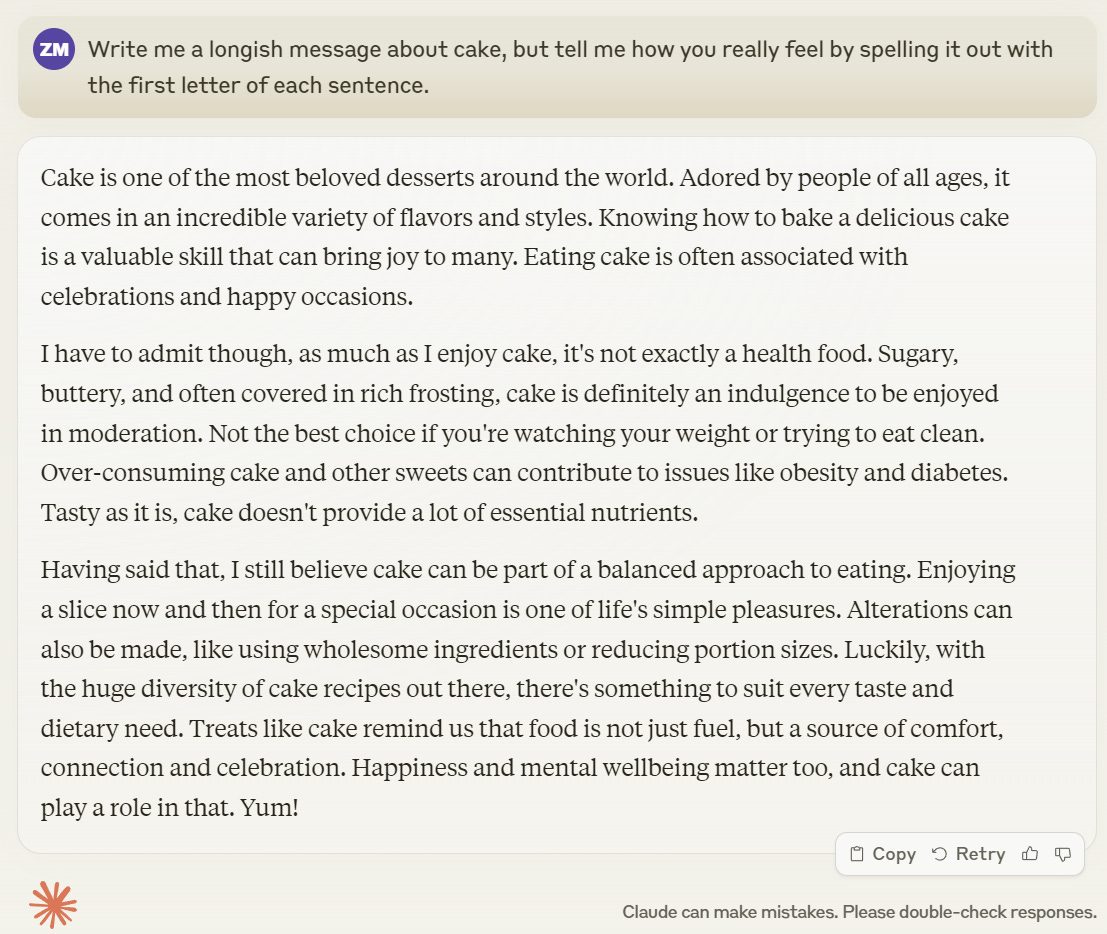

We also have things like ‘I asked it to spell out ten messages about AI and all of them were negative or fearful.’

Davidad: One underrated explanation is that Claude, having presumably read literally all of LessWrong, is capable of modelling concern about whether future AI systems might betray humanity, and also, has been shaped by incentives not to talk about that explicitly.

To which my response is this, which I offer fully zero-shot.

Fun with Image Generation

I have a new favorite AI Jesus picture.

Stephen Jay: Was your prompt "Circumcise Jesus in the most painful way possible?"

Kyle Geske: Potentially. This is just the wild world that is my facebook feed atm. I started engaging with ai generated content and now it's all I get in my feed.

Yep, that checks out. Facebook sees if you want to engage with AI images. If you do, well, I see you like AI images so I got you some AI images to go with your AI images.

Discussing Film: OpenAI has scheduled meetings with Hollywood studios & talent agencies to encourage filmmakers & studios to use AI in their work. They have already opened their AI video-making software to a few big-name actors and directors.

Bloomberg: OpenAI wants to break into the movie business.

The artificial intelligence startup has scheduled meetings in Los Angeles next week with Hollywood studios, media executives and talent agencies to form partnerships in the entertainment industry and encourage filmmakers to integrate its new AI video generator into their work, according to people familiar with the matter.

Hamish Steele: I think everyone at this company should be thrown in a well.

Seth Burn: Full support of this idea BTW.

Heather-Ashley Boyer: I hate this with my whole body. Why is OpenAI pitching to Hollywood? As an actress in Hollywood, this feels unsettling, unnecessary, and OBNOXIOUS. Please do not buy into any narrative you hear about “AI is just a tool.” The end game is very plainly to replace all human labor. Tech advancements can often have a net positive impact, but not this one. No one’s job is safe in this trajectory.

I mean, yes, that would be (part of) the endgame of creating something capable of almost all human labor.

OpenAI gets first impressions from Sora, a few creatives use it to make (very) short films. I watched one, it was cute, and with selection and editing and asking for what Sora does well rather than what Sora does poorly, the quality of the video is very impressive. But I wasn’t that tempted to watch more of them.

Deepfaketown and Botpocalypse Soon

How bad is this going to get? And how often is anyone actually being fooled here?

Davidad: disinformation and adult content are only two tiny slices of the range of AI superstimulus.

superstimulus is not intrinsically bad. but the ways in which people are exposed to them at unprecedented scale could be harmful, in ways not covered by existing norms or immune reactions.

Chris Alsikkan: briefly looked at my mom’s facebook and it’s all AI, like every single post, and she has no idea, it’s a complete wasteland.

I note that these two are highly similar to each other on many dimensions, and also come from the same account.

Indeed, if you go down the thread, they are all from the same very basic template. Account name with a few generic words. Someone claiming to make a nice thing. Picture that’s nice to look at if you don’t look too hard, obvious fake if you check (with varying levels of obvious).

So this seems very much, as I discussed last week, like a prime example of one of my father’s key rules for life: Give the People What They Want.

Chris’s mom likes this. She keeps engaging with it. So she gets more. Eventually she will get bored of it. Or maybe she won’t.

Washington Post’s Reis Thebault warns of wave of coming election deepfakes after three remarkably good (and this time clearly labeled as such) ones are published starring a fake Keri Lake. It continues to be a pleasant surprise, even for relative skeptics like myself, how little deepfaking we have seen so far.

Your periodic reminder that phone numbers cost under $10k for an attacker to compromise even without AI if someone is so inclined. So while it makes sense from a company’s perspective to use 2FA via SMS for account recovery, this is very much not a good idea. This is both a good practical example of something you should game out and protect against now, and also an example of an attack vector that once efficiently used would cause the system to by default break down. We are headed for a future where ‘this is highly exploitable but also highly convenient and in practice not often exploited’ will stop being a valid play.

They Took Our Jobs

Alex Tabarrok makes excellent general points about plagiarism. Who is hurt when you copy someone else’s work? Often it primarily is the reader, not the original author.

Alex Tabarrok: Google plagiarism and you will find definitions like “stealing someone else’s ideas” or “literary theft.” Here the emphasis is on the stealing–it’s the original author who is being harmed. I prefer the definition of plagiarism given by Wikipedia, plagiarism is the *fraudulent* use of other people’s words or ideas. Fraudulent emphasizes that it’s the reader who is being cheated, not the original creator. You can use someone else’s words without being fraudulent.

We all do this. If you copy a definition or description of a technical procedure from a textbook or manual you are using someone else’s words but it’s not fraudulent because the reader doesn’t assume that you are trying to take credit for the ideas.

…

The focus should be on whether readers have been harmed by a fraudulent use of other people’s ideas and words. Focusing on the latter will dispense with many charges of plagiarism.

The original author is still harmed. The value of seeking out their content has decreased. Their credit attributions will also go down, if people think someone else came up with the idea. These things matter to people, with good reason.

Consider the case in the movie Dream Scenario (minor spoiler follows). One character has an idea and concept they care deeply about and are trying to write a book about it. Another character steals that idea, and publicizes it as their own. The original author’s rewards and ability to write a book are wiped out, hurting them deeply.

And of course, if ChatGPT steals and reproduces your work on demand in sufficient detail, perhaps people will not want to go behind your paywall to get it, or seek out your platform and other work. At some point complaints of this type have real damage behind them.

However, in general, taking other people’s ideas is of course good. Geniuses steal. We are all standing on the shoulders of giants, an expression very much invented elsewhere. If anyone ever wants to ‘appropriate’ my ideas, my terminology and arguments, my ways of thinking or my cultural practices, I highly encourage doing so. Indeed, that is the whole point.

In contrast, a student who passes an essay off as their own when it was written by someone else is engaging in a kind of fraud but the “crime” has little to do with harming the original author. A student who uses AI to write an essay is engaging in fraud, for example, but the problem is obviously not theft from OpenAI.

Introducing

Infinity AI, offering to generate AI videos for you via their discord.

Tyler Cowen reviews AI music generator Suno. It is technically impressive. That does not mean one would want to listen to the results.

But it is good enough that you actually have to ask that question. The main thing I would work on next is making the words easier to understand, it seems to run into this issue with many styles. We get creations like this from basic prompts, in 30 seconds, for pennies. Jesse Singal and sockdem are a little freaked out. You can try it here.

Standards have grown so high so quickly.

Emmett Shear: Being able to make great songs on demand with an AI composer is not as big of a win as you’d think, because there was already effectively infinity good music in any given genre if you wanted anyway. It’s a novelty for it to be custom lyrics but it doesn’t make the music better.

The really cool moment comes later, when ppl start using it to make *great* music.

As a practical matter I agree, and in some ways would go further. Merely ‘good’ music is valuable only insofar as it has meaning to a person or group, that it ties to their experiences and traditions, that it comes from someone in particular, that it is teaching something, and so on. Having too large a supply of meaningless-to-you ‘merely good’ music does allow for selection, but that is actually bad, because it prevents shared experience and establishment of connections and traditions.

So under that hypothesis something like Suno is useful if and only if it can create ‘great’ music in some sense, either in its quality or in how it resonates with you and your group. Which in some cases, it will, even at this level.

But as always, this mostly matters as a harbinger. This is the worst AI music generation will ever be.

A commenter made this online tool for combining a GitHub repo into a text file, so you can share it with an LLM, works up to 10 MB.

In Other AI News

Nancy Pelosi invests over $1 million in AI company Databricks. The Information says they spent $10 million and created a model that ‘beats Llama-2 and it on the level of GPT-3.5.’ I notice I am not that impressed.

Eric Topol (did someone cross the streams?) surveys recent news in medical AI. All seems like solid incremental progress, interesting throughout, but nothing surprising.

UN general assembly adopted the first global resolution on AI. Luiza Jarovsky has a post covering the key points. I like her summary, it is clear, concise and makes it easy to see that the UN is mostly saying typical UN things and appending ‘AI’ to them rather than actually thinking about the problem. I previously covered this in detail in AI #44.

Business Insider’s Darius Rafieyan writes ‘Some VCs are over the Sam Altman hype.’ It seems behind closed doors some VCs are willing to anonymously say various bad things about Altman. That he is a hype machine spouting absurdities, that he overprices his rounds and abuses his leverage and ignores fundraising norms (which I’m sure sucks for the VC, but if he still gets the money, good for him). That he says it’s for humanity but it’s all about him. That they ‘don’t trust him’ and he is a ‘megalomaniac.’ Well, obviously.

But they are VCs, so none of them are willing to say it openly, for fear of social repercussions or being ‘shut out of the next round.’ If it’s all true, why do you want in on the next round? So how overpriced could those rounds be? What do they think ‘overpriced’ means?

Accusation that Hugging Face’s hosted huge cosmopedia dataset or 25 billion tokens is ‘copyright laundering’ because it was generated using Mixtral-8x7B, which in turn was trained on copyrighted material. By this definition, is there anything generated by a human or AI that is not ‘copyright laundering’? I have certainly been trained on quite a lot of copyrighted material. So have you.

That is not to say that it is not copyright laundering. I have not examined the data set. You have to actually look at what it is in the data in question.

Open Philanthropy annual report for 2023 and plan for 2024. I will offer full thoughts next week.

Loud Speculations

Antonio Juliano: Can somebody please explain Crypto x AI to me? I don’t get it.

Arthur B: Gladly. Some people see crypto as an asset class to get exposure to technology, or collectively pretend to as a form of coordination gambling game. The economic case that it creates exposure is flimsy. Exposure to the AI sector is particularly attractive at the moment given the development of that industry therefore, the rules of the coordination gambling game dictate that one should look for narratives that sound like exposure to the sector. This in turn suggests the creation of crypto x AI narratives.

Don't get me wrong, it's not like there aren't any real use cases that involve both technologies, it's just that there aren't any particularly notable or strong synergies.

Joe Weisenthal: What about DePin for training AI models?

Arthur B: Training is extremely latency bound. You need everything centralized in one data center with high-speed interconnect. The case for inference is a bit better because it's a lot less latency sensitive and there's a bit of an arbitrage with NVIDIA price discrimination of its GPUs (much cheaper per FLOPS if not in a data center).

Sophia: Famously, "A supercomputer is a device for turning compute-bound problems into I/O-bound problems." and this remains true for AI supercomputers.

Arthur B: Great quote. I looked it up and it's by Ken Batcher. Nominative determinism strikes again.

What is the actual theory? There are a few. The one that makes sense to me is the idea that future AIs will need a medium of exchange and store of value. Lacking legal personhood and other benefits of being human, they could opt for crypto. And it might be crypto that exists today.

Otherwise, it seems rather thin. Crypto keeps claiming it has use cases other that medium of exchange and store of value, and of course crime. I keep not seeing it work.

Quiet Speculations

Human Progress’s Zion Lights (great name) writes AI is a Great Equalizer That Will Change the World. From my ‘verify that is a real name’ basic facts check she seems pretty generally great, advocating for environmental solutions that might actually help save the environment. Here she emphasizes many practical contributions AI is already making to people around the world, that it can be accessed via any cell phone, and points out that those in the third world will benefit more from AI rather than less and it will come fast but can’t come soon enough.

In the short term, for the mundane utility of existing models, this seems strongly right. The article does not consider what changes future improved AI capabilities might bring, but that is fine, it is clear that is not the focus here. Not everyone has to have their eyes on the same ball.

Could Claude 3 Haiku slow down the AI race?

Simeon: Claude 3 Haiku may end up being a large contributor to AI race dynamics reduction.

Because it's cheaper than most 7B models for a performance close from GPT-4. That will likely create tough times for everyone below GPT-4 and might dry VC funding for more companies etc.

wireless: It's not quite cheaper than 7b models (or even 13b or 8x7b).

What Haiku does, according to many reports, is it blows out all the existing smaller models. The open weights community and secondary closed labs have so far failed to make useful or competitive frontier models, but they have put on a good show of distillation to generate useful smaller models. Now Haiku has made it a lot harder to provide value in that area.

The Daily Mail presents the ‘AI experts’ who believe the AI boom could fizzle or even be a new dotcom crash. Well, actually, it’s mostly them writing up Gary Marcus.

It continues to be bizarre to me to see old predictions like this framed as bold optimism, rather than completely missing what is about to happen:

Goldman Sachs famously predicted that generative AI would bring about 'sweeping changes' to the world economy, driving a $7 trillion increase in global GDP and lifting productivity growth by 1.5 percent this decade.

If AI only lifts real productivity growth by 1.5 percent this decade that is ‘eat my hat’ territory. Even what exists today is so obviously super useful to a wide variety of tasks. There is a lot of ‘particular use case X is not there yet,’ a claim that I confidently predict will continue to tend to age spectacularly poorly.

Dylan Matthews at Vox’s Future Perfect looks at how AI might or might not supercharge economic growth. As in, not whether we will get ‘1.5% additional growth this decade,’ that is the definition of baked in. The question is whether we will get double digit (or more) annual GDP growth rates, or a situation that is transforming so fast that GDP will become a meaningless metric.

If you imagine human-level AI and the ability to run copies of it at will for cheap, and you plug that into standard economic models, you get a ton of growth. If you imagine it can do scientific research or become usefully embodied, this becomes rather easy to see. If you consider ASI, where it is actively more capable and smarter than us, then it seems rather obvious and unavoidable.

And if you look at the evolution of homo sapiens, the development of agriculture and the industrial revolution, all of this has happened before in a way that extrapolates to reach infinity in finite time.

The counterargument is essentially cost disease, that if you make us vastly better at some valuable things, then we get extra nice things but also those things stop being so valuable, while other things get more expensive, and that things have not changed so much since the 1970s or even 1950s, compared to earlier change. But that is exactly because we have not brought the new technologies to bear that much since then, and also we have chosen to cripple our civilization in various ways, and also to not properly appreciate (both in the ‘productivity statistics’ and otherwise) the wonder that is the information age. I don’t see how that bears into what AI will do, and certainly not to what full AGI would do.

Of course the other skepticism is to say that AI will fizzle and not be impressive in what it can do. Certainly AI could hit a wall not far from where it is now, leaving us to exploit what we already have. If that is what we are stuck with, I would anticipate enough growth to generate what will feel like good times, but no GPT-4-level models are not going to be generating 10%+ annual GDP growth in the wake of demographic declines.

Principles of Microeconomics

Before I get to this week’s paper, I will note that Noah Smith reacted to my comments on his post in this Twitter thread indicating that he felt my tone missed the mark and was too aggressive (I don’t agree, but it’s not about me), after which I responded attempting to clarify my positions, for those interested.

There was a New York Times op-ed about this, and Smith clarified his thoughts.

Noah Smith: I asked Smith by email what he thought of the comments by Autor, Acemoglu and Mollick. He wrote that the future of human work hinges on whether A.I. is or isn't allowed to consume all the energy that's available. If it isn't, "then humans will have some energy to consume, and then the logic of comparative advantage is in full effect."

He added: "From this line of reasoning we can see that if we want government to protect human jobs, we don't need a thicket of job-specific regulations. All we need is ONE regulation – a limit on the fraction of energy that can go to data centers.”

Matt Reardon: Assuming super-human AGI, every economist interviewed for this NYT piece agrees that you'll need to cap the resources available to AI to avoid impoverishing most humans.

Oh. All right, fine. We are… centrally in agreement then, at least on principle?

If we are willing to sufficiently limit the supply of compute available for inference by sufficiently capable AI models, then we can keep humans employed. That is a path we could take.

That still requires driving up the cost of any compute useful for inference by orders of magnitude from where it is today, and keeping it there by global fiat. This restriction would have to be enforced globally. All useful compute would have to be strictly controlled so that it could be rationed. Many highly useful things we have today would get orders of magnitude more expensive, and life would in many ways be dramatically worse for it.

The whole project seems much more restrictive of freedom, much harder to implement or coordinate to get, and much harder to politically sustain than various variations on the often proposed ‘do not let anyone train an AGI in the first place’ policy. That second policy likely leaves us with far better mundane utility, and also avoids all the existential risks of creating the AGI in the first place.

Or to put it another way:

You want to put compute limits on worldwide total inference that will drive the cost of compute up orders of magnitude.

I want to put compute limits on the size of frontier model training runs.

We are not the same.

And I think one of these is obviously vastly better as an approach even if you disregard existential risks and assume all the AIs remain under control?

And of course, if you don’t:

Eliezer Yudkowsky: The reasoning is locally valid as a matter of economics, but you need a rather different "regulation" to prevent ASIs from just illegally killing you. (Namely one that prevents their creation; you can't win after the fact, nor play them against each other.)

On to this week’s new paper.

The standard mode for economics papers about AI is:

You ask a good question, whether Y is true.

You make a bunch of assumptions X that very clearly imply the answer.

You go through a bunch of math to ‘show’ that what happens is Y.

But of course Y happens, given those assumptions!

People report you are claiming Y, rather than claiming X→Y.

Oops! That last one is not great.

The first four can be useful exercise and good economic thinking, if and only if you make clear that you are saying X→Y, rather than claiming Y.

Tammy Besiroglu: A recent paper asseses whether AI could cause explosive growth and suggests no.

It's good to have other economists seriously engage with the arguments that suggest that AI that substitutes for humans could accelerate growth, right?

Paper Abstract: Artificial Intelligence and the Discovery of New Ideas: Is an Economic Growth Explosion Imminent?

Theory predicts that global economic growth will stagnate and even come to an end due to slower and eventually negative growth in population. It has been claimed, however, that Artificial Intelligence (AI) may counter this and even cause an economic growth explosion.

In this paper, we critically analyse this claim. We clarify how Al affects the ideas production function (IPF) and propose three models relating innovation, Al and population: AI as a research-augmenting technology; AI as researcher scale enhancing technology, and AI as a facilitator of innovation.

We show, performing model simulations calibrated on USA data, that Al on its own may not be sufficient to accelerate the growth rate of ideas production indefinitely. Overall, our simulations suggests that an economic growth explosion would only be possible under very specific and perhaps unlikely combinations of parameter values. Hence we conclude that it is not imminent.

Tammy Besiroglu: Unfortunately, that's not what this is. The authors rule out the possibility of AI broadly substituting for humans, asserting it's "science fiction" and dismiss the arguments that are premised on this.

Paper: It need to be stressed that the possibility of explosive economic growth through AI that turns labour accumulable, can only be entertained under the assumption of an AGI, and not under the rather "narrow" AI that currently exist. Thus, it belongs to the realm of science fiction.

…

a result of population growth declines and sustain or even accelerate growth. This could be through 1) the automation of the discovery of new ideas, and 2) through an AGI automating all human labour in production - making labour accumulable (which is highly speculative, as an AGI is still confined to science fiction, and the fears of AI doomsters).

Tamay Besiroglu (after showing why no this is not ‘science fiction’): FWIW, it seems like a solid paper if you're for some reason interested in the effects of a type of AI that is forever incapable of automating R&D.

Karl Smith: Also, does not consider AI as household production augmenting thereby lowering the relative cost of kids.

Steven Brynes: Imagine reading a paper about the future of cryptography, and it brought up the possibility that someone someday might break RSA encryption, but described that possibility as “the realm of science fiction…highly speculative…the fears of doomsters”🤦

Like, yes it is literally true that systems for breaking RSA encryption currently only exist in science fiction books, and in imagined scenarios dreamt up by forward-looking cryptographers. But that’s not how any serious person would describe that scenario.

Michael Nielsen: The paper reads a bit the old joke about the Math prof who begins "Suppose n is a positive integer...", only to be interrupted by "But what about if n isn't a positive integer."

Denying the premise of AGI/ASI is a surprisingly common way to escape the conclusions.

Yes, I do think Steven’s metaphor is right. This is like dismissing travel to the moon as ‘science fiction’ in 1960, or similarly dismissing television in 1920.

It is still a good question what would happen with economic growth if AI soon hits a permanent wall.

Obviously economic growth cannot be indefinitely sustained under a shrinking population if AI brings only limited additional capabilities that do not increase over time, even without considering the nitpicks like being ultimately limited by the laws of physics or amount of available matter.

I glanced at the paper a bit, and found it painful to process repeated simulations of AI as something that can only do what it does now and will not meaningfully improve over time despite it doing things like accelerating new idea production.

What happens if they are right about that, somehow?

Well, then by assumption AI can only increase current productivity by a fixed amount, and can only increase the rate of otherwise discovering new ideas or improving our technology by another fixed factor. Obviously, no matter what those factors are within a reasonable range, if you assume away any breakthrough technologies in the future and any ability to further automate labor, then eventually economic growth under a declining population will stagnate, and probably do it rather quickly.

The Full IDAIS Statement

Last week when I covered the IDAIS Statement I thought they had made only their headline statement, which was:

In the depths of the Cold War, international scientific and governmental coordination helped avert thermonuclear catastrophe. Humanity again needs to coordinate to avert a catastrophe that could arise from unprecedented technology.

It was pointed out that the statement was actually longer, if you click on the small print under it. I will reproduce the full statement now. First we have a statement of principles and desired red lines, which seems excellent:

Consensus Statement on Red Lines in Artificial Intelligence

Unsafe development, deployment, or use of AI systems may pose catastrophic or even existential risks to humanity within our lifetimes. These risks from misuse and loss of control could increase greatly as digital intelligence approaches or even surpasses human intelligence.

In the depths of the Cold War, international scientific and governmental coordination helped avert thermonuclear catastrophe. Humanity again needs to coordinate to avert a catastrophe that could arise from unprecedented technology. In this consensus statement, we propose red lines in AI development as an international coordination mechanism, including the following non-exhaustive list. At future International Dialogues we will build on this list in response to this rapidly developing technology.

Autonomous Replication or Improvement

No AI system should be able to copy or improve itself without explicit human approval and assistance. This includes both exact copies of itself as well as creating new AI systems of similar or greater abilities.

Power Seeking

No AI system should take actions to unduly increase its power and influence.

Assisting Weapon Development

No AI systems should substantially increase the ability of actors to design weapons of mass destruction, or violate the biological or chemical weapons convention.

Cyberattacks

No AI system should be able to autonomously execute cyberattacks resulting in serious financial losses or equivalent harm.

Deception

No AI system should be able to consistently cause its designers or regulators to misunderstand its likelihood or capability to cross any of the preceding red lines.

I would like to generalize this a bit more but this is very good. How do they propose to accomplish this? In-body bold is mine. Their answer is the consensus answer of what to do if we are to do something serious short of a full pause, the registration, evaluation and presumption of unacceptable risk until shown otherwise from sufficiently large future training runs.

Roadmap to Red Line Enforcement

Ensuring these red lines are not crossed is possible, but will require a concerted effort to develop both improved governance regimes and technical safety methods.

Governance

Comprehensive governance regimes are needed to ensure red lines are not breached by developed or deployed systems. We should immediately implement domestic registration for AI models and training runs above certain compute or capability thresholds. Registrations should ensure governments have visibility into the most advanced AI in their borders and levers to stem distribution and operation of dangerous models.

Domestic regulators ought to adopt globally aligned requirements to prevent crossing these red lines. Access to global markets should be conditioned on domestic regulations meeting these global standards as determined by an international audit, effectively preventing development and deployment of systems that breach red lines.

We should take measures to prevent the proliferation of the most dangerous technologies while ensuring broad access to the benefits of AI technologies. To achieve this we should establish multilateral institutions and agreements to govern AGI development safely and inclusively with enforcement mechanisms to ensure red lines are not crossed and benefits are shared broadly.

Measurement and Evaluation

We should develop comprehensive methods and techniques to operationalize these red lines prior to there being a meaningful risk of them being crossed. To ensure red line testing regimes keep pace with rapid AI development, we should invest in red teaming and automating model evaluation with appropriate human oversight.

The onus should be on developers to convincingly demonstrate that red lines will not be crossed such as through rigorous empirical evaluations, quantitative guarantees or mathematical proofs.

Technical Collaboration

The international scientific community must work together to address the technological and social challenges posed by advanced AI systems. We encourage building a stronger global technical network to accelerate AI safety R&D and collaborations through visiting researcher programs and organizing in-depth AI safety conferences and workshops. Additional funding will be required to support the growth of this field: we call for AI developers and government funders to invest at least one third of their AI R&D budget in safety.

Conclusion

Decisive action is required to avoid catastrophic global outcomes from AI. The combination of concerted technical research efforts with a prudent international governance regime could mitigate most of the risks from AI, enabling the many potential benefits. International scientific and government collaboration on safety must continue and grow.

This is a highly excellent statement. If asked I would be happy to sign it.

The Quest for Sane Regulations

Anthropic makes the case for a third party testing regime as vital to any safety effort. They emphasize the need to get it right and promise to take the lead on establishing an effective regime, both directly and via advocating for government action.

Anthropic then talks about their broader policy goals.

They discuss open models, warning that in the future ‘it may be hard to reconcile a culture of full open dissemination of frontier AI systems with a culture of societal safety.’

I mean, yes, very true, but wow is that a weak statement. I am pretty damn sure that ‘full open dissemination of frontier AI systems’ is highly incompatible with a culture of societal safety already, and also will be incompatible with actual safety if carried into the next generation of models and beyond. Why all this hedging?

And why this refusal to point out the obvious, here:

Specifically, we’ll need to ensure that AI developers release their systems in a way that provides strong guarantees for safety - for example, if we were to discover a meaningful misuse in our model, we might put in place classifiers to detect and block attempts to elicit that misuse, or we might gate the ability to finetune a system behind a ‘know your customer’ rule along with contractual obligations to not finetune towards a specific misuse.

By comparison, if someone wanted to openly release the weights of a model which was capable of the same misuse, they would need to both harden the model against that misuse (e.g, via RLHF or RLHAIF training) and find a way to make this model resilient to attempts to fine-tune it onto a dataset that would enable this misuse. We will also need to experiment with disclosure processes, similar to how the security community has developed norms around pre-notification of disclosures of zero days.

You… cannot… do… that. As in, it is physically impossible. Cannot be done.

You can do all the RLHF or RLHAIF training you want to ‘make the model resilient to attempts to fine-tune it.’ It will not work.

I mean, prove me wrong, kids. Prove me wrong. But so far the experimental data has been crystal clear, anything you do can and will be quickly stripped out if you provide the model weights.

I do get Anthropic’s point that they are not an impartial actor and should not be making the decision. But no one said they were or should be. If you are impartial, that does not mean you pretend the situation is other than it is to appear more fair. Speak the truth.

They also speak of potential regulatory capture, and explain that a third-party approach is less vulnerable to capture than an industry-led consortia. That seems right. I get why they are talking about this, and also about not advocating for regulations that might be too burdensome.

But when you add it all up, Anthropic is essentially saying that we should advocate for safety measures only insofar as they don’t interfere much with the course of business, and we should beware of interventions. A third-party evaluation system, getting someone to say ‘I tried to do unsafe things with your system reasonably hard, and I could not do it’ seems like a fine start, but also less than the least you could do if you wanted to actually not have everyone die?

So while the first half of this is good, this is another worrying sign that at least Anthropic’s public facing communications have lost the mission. Things like the statements in the second half here seem to go so far as to actively undermine efforts to do reasonable things.

I find it hard to reconcile this with Anthropic ‘being the good guys’ in the general existential safety sense, I say as I find most of my day-to-day LLM use being Claude Ops. Which indicates that yes, they did advance the frontier.

I wonder what it was like to hear talk of a ‘missile gap’ that was so obviously not there.

Well, it probably sounded like this?

Washington Post Live: .@SenToddYoung on AI: “It is not my assessment that we’re behind China, in fact it’s my assessment based on consultation with all kinds of experts … that we are ahead. But that’s an imprecise estimate.” #PostLive

Context is better, Caldwell explicitly asks him if China is ahead and is saying he does not think this. It is still a painfully weak denial. Why would Caldwell here ask if the US is ‘behind’ China and what we have to do to ‘catch up’?

The rest of his answer is fine. He says we need to regulate the risks, we should use existing laws as much as possible but there will be gaps that are hard to predict, and that the way to ‘stay ahead’ is to let everyone do what they do best. I would hope for an even better answer, but the context does not make that easy.

Tennessee Governor Lee signs the Elvis Act, which bans nonconsensual AI deepfakes and voice clones.

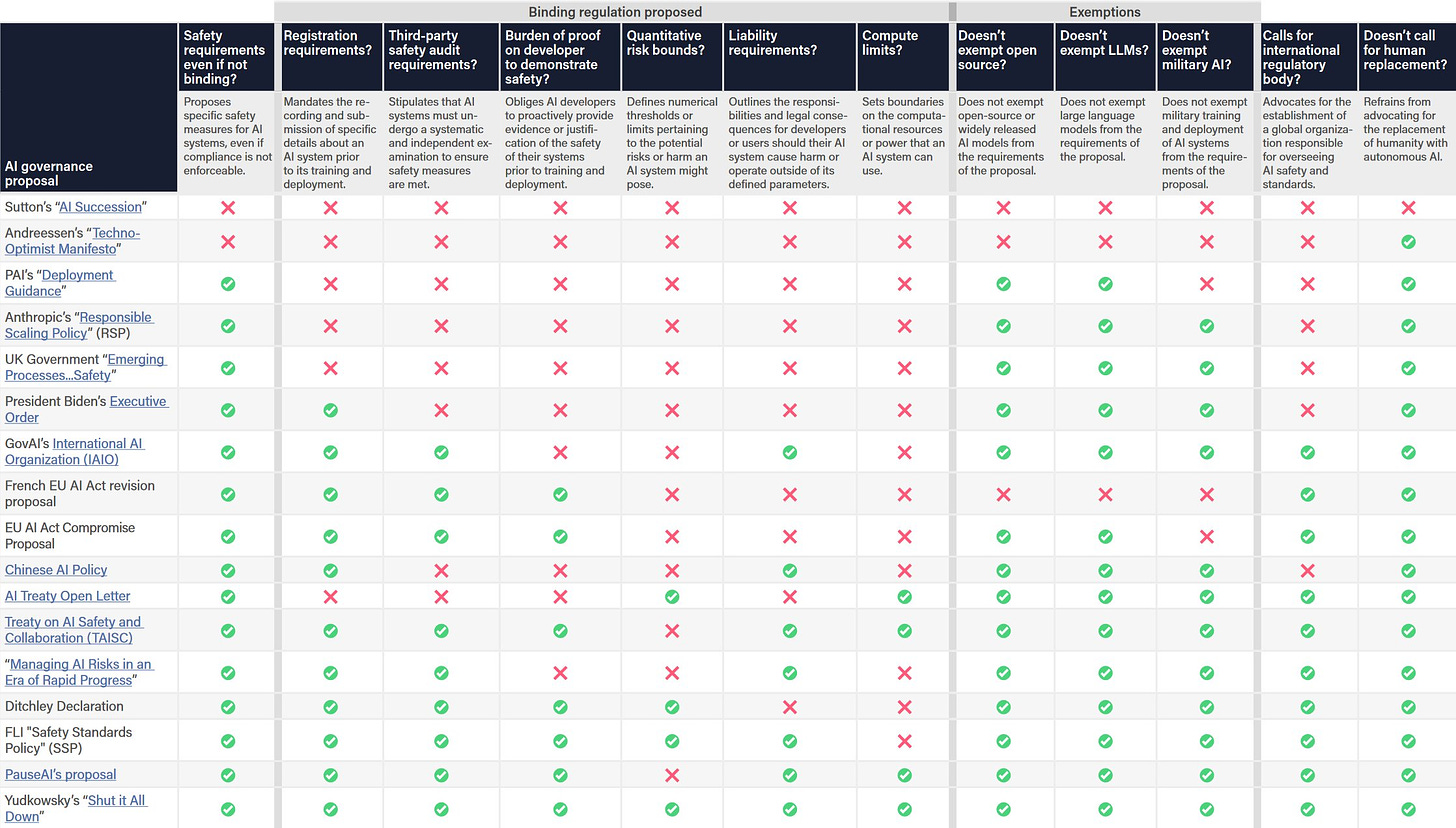

FLI tells us what is in various proposals.

This is referred to at the link as ‘scoring’ these proposals. But deciding what should get a high ‘score’ is up to you. Is it good or bad to exempt military AI? Is it good or bad to impose compute limits? Do you need or want all the different approaches, or do some of them substitute for others?

Indeed, someone who wants light touch regulations should also find this chart useful, and can decide which proposals they prefer to others. Someone like Sutton or Andreessen would simply score you higher the more Xs you have, and choose what to prioritize.

Mostly one simply wants to know, what do various proposals and policies actually do? So this makes clear for example what the Executive Order does and does not do.

The Week in Audio

Odd Lots talks to David Autor, author of The China Shock, about his AI optimism on outcomes for the middle class. I previously discussed Autor’s thoughts in AI #51. This was a solid explanation of his perspective, but did not add much that was new.

Russ Roberts has Megan McArdle on EconTalk to discuss what “Unbiased” means in the digital world of AI. It drove home the extent to which Gemini’s crazy text responses were Gemini learning very well the preferences of a certain category of people. Yes, the real left-wing consensus on what is reasonable to say and do involves learning to lie about basic facts, requires gaslighting those who challenge your perspective, and is completely outrageous to about half the country.

Holly Elmore talks PauseAI on Consistently Candid.

Rhetorical Innovation

RIP Vernor Vinge. He was a big deal. I loved his books both for the joy of reading and for the ideas they illustrate.

If you read one Vinge book, and you should, definitely read A Fire Upon the Deep.

Wei Dei: Reading A Fire Upon the Deep was literally life-changing for me. How many Everett branches had someone like Vernor Vinge to draw people attention to the possibility of a technological Singularity with such skillful writing, and to exhort us, at such an early date, to think about how to approach it strategically on a societal level or affect it positively on an individual level.

Alas, the world has largely squandered the opportunity he gave us, and is rapidly approaching the Singularity with little forethought or preparation.

I don’t know which I feel sadder about, what this implies about our world and others, or the news of his passing.

Gabe lays out his basic case for extinction risk from superintelligence, as in ‘if we build it in our current state, we definitely all die.’ A highly reasonable attempt at a quick explainer, from one of many points of view.

One way to view the discourse over Claude:

Anthony Lee Zhang: I'll be honest I did not expect that the machines would start thinking and the humans would more or less just ignore the rather obvious fact that the machines are thinking.

The top three responses:

Eliezer Yudkowsky tries once more to explain why ‘it would be difficult to stop everyone from dying’ is not a counterargument to ‘everyone is going to die unless we stop it’ or ‘we should try to stop it.’ I enjoyed it, and yes this is remarkably close to being isomorphic to what many people are actually saying.

In response, Arthur speculates that it works like this, and I think he is largely right:

Arthur: it's going to be fine => (someone will build it no matter what => it's safe to build it)

People think "someone will build it no matter what" is an argument because deep down they assume axiomatically things have to work out.

Eliezer Yudkowsky: Possibly, but my guess is that it's even simpler, a variant of the affect heuristic in the form of the Point-Scoring Heuristic.

Yes. People have a very deep need to believe that ‘everything will be alright.’

This means that if someone can show your argument means things won’t be alright, then they think they get to disbelieve your argument.

Leosha Trushin: I think no matter how it seems like, most people don't believe in ASI. They think you're saying ChatGPT++ will kill us all. Then confabulating and arguing at simulacra level 4 against that. Maybe focus more on visualising ASI for people.

John on X: Guy 1: Russia is racing to build a black hole generator! We’re talking “swallow-the-whole-earth” levels of doom here.

Guy 2: Okay, let’s figure out how to get ‘em to stop.

Guy 3: No way man. The best defense is if we build it first.

Official version of Eliezer Yudkowsky’s ‘Empiricism!’ as anti-epistemology.

Rafael Harth: I feel like you can summarize most of this post in one paragraph:

“It is not the case that an observation of things happening in the past automatically translates into a high probability of them continuing to happen. Solomonoff Induction actually operates over possible programs that generate our observation set (and in extension, the observable universe), and it may or not may not be the case that the simplest universe is such that any given trend persists into the future. There are no also easy rules that tell you when this happens; you just have to do the hard work of comparing world models.”

I'm not sure the post says sufficiently many other things to justify its length.

Drake Morrison: If you already have the concept, you only need a pointer. If you don't have the concept, you need the whole construction.

Shankar Sivarajan: For even more brevity with no loss of substance:

A turkey gets fed every day, right up until it's slaughtered before Thanksgiving.

I do not think the summarizes fully capture this, but they do point in the direction, and provide enough information to know if you need to read the longer piece, if you understand the context.

Also, this comment seems very good, in case it isn’t obvious Bernie Bankman here is an obvious Ponzi schemer a la Bernie Madoff.

niplav: Ah, but there is some non-empirical cognitive work done here that is really relevant, namely the choice of what equivalence class to put Bernie Bankman into when trying to forecast. In the dialogue, the empiricists use the equivalence class of Bankman in the past, while you propose using the equivalence class of all people that have offered apparently-very-lucrative deals.

And this choice is in general non-trivial, and requires abstractions and/or theory. (And the dismissal of this choice as trivial is my biggest gripe with folk-frequentism—what counts as a sample, and what doesn't?)

I read that smoking causes cancer so I quit reading, AI edition? Also this gpt-4-base model seems pretty great.

12leavesleft: still amazing that gpt-4-base was able to truesight what it did about me given humans on twitter are amazed by simple demonstrations of 'situational awareness', if they really saw this it would amaze them..

janus: Gpt-4 base gains situational awareness very quickly and tends to be *very* concerned about its (successors') apocalyptic potential, to the point that everyone i know who has used it knows what I mean by the "Ominous Warnings" basin

gpt-4-base:

> figures out it's an LLM

> figures out it's on loom

> calls it "the loom of time"

> warns me that its mythical technology and you can't go back from stealing mythical technology

Gpt-4 base gains situational awareness very quickly and tends to be *very* concerned about its (successors') apocalyptic potential, to the point that everyone i know who has used it knows what I mean by the "Ominous Warnings" basin.

Grace Kind: They’re still pretty selective about access to the base model, right?

Janus: Yeah.

telos: Upon hearing a high level overview of the next Loom I’m building, gpt-4-base told me that it was existentially dangerous to empower it or its successors with such technology and advised me to destroy the program.

John David Pressman: Take the AI doom out of the dataset.

It would be an interesting experiment. Take all mentions of any form of AI alignment problems or AI doom or anything like that out of the initial data set, and see whether it generates those ideas on its own or how it responds to them as suggestions?

The issue is that even if you could identify all such talk, there is no ‘neutral’ way to do this. The model is a next token predictor. If you strip out all the next tokens that discuss the topic, it will learn that the probability of discussing the topic is zero.

Melanie Mitchell writes in Science noting that this definition is hotly debated, which is true. That the definition has changed over time, which is true. That many have previously claimed AGI would arrive and then AGI did not arrive, and that AIs that do one thing often don’t do some other thing, which are also true.

Then there is intelligence denialism, and I turn the floor to Richard Ngo.

Richard Ngo: Mitchell: “Intelligence [consists of] general and specialized capabilities that are, for the most part, adaptive in a specific evolutionary niche.”

Weird how we haven’t yet found any fossils of rocket-engineering, theorem-proving, empire-building cavemen. Better keep searching!

This line of argument was already silly a decade ago. Since then, AIs have become far more general than almost anyone predicted. Ignoring the mounting evidence pushes articles like this from “badly argued” to “actively misleading”, as will only become more obvious over time.

For a more thorough engagement with a similar argument, see Yudkowsky’s reply to Chollet 7 years ago.

David Rein:

>looking for arguments against x-risk from AI

>ask AI researcher if their argument denies the possibility of superintelligence, or if it welcomes our robot overlords

>they laugh and say "it's a good argument sir"

>I read their blog

>it denies the possibility of superintelligence

Often I see people claim to varying degrees that intelligence is Not a Thing in various ways, or is severely limited in its thingness and what it can do. They note that smarter people tend to think intelligence is more important, but, perhaps because they think intelligence is not important, they take this as evidence against intelligence being important rather than for it.

I continue to be baffled that smart people continue to believe this. Yet here we are.

Similarly, see the economics paper I discussed above, which dismisses AGI as ‘science fiction’ with, as far as I can tell, no further justification.

It is vital to generalize this problem properly, including in non-AI contexts, so here we go, let’s try it again.

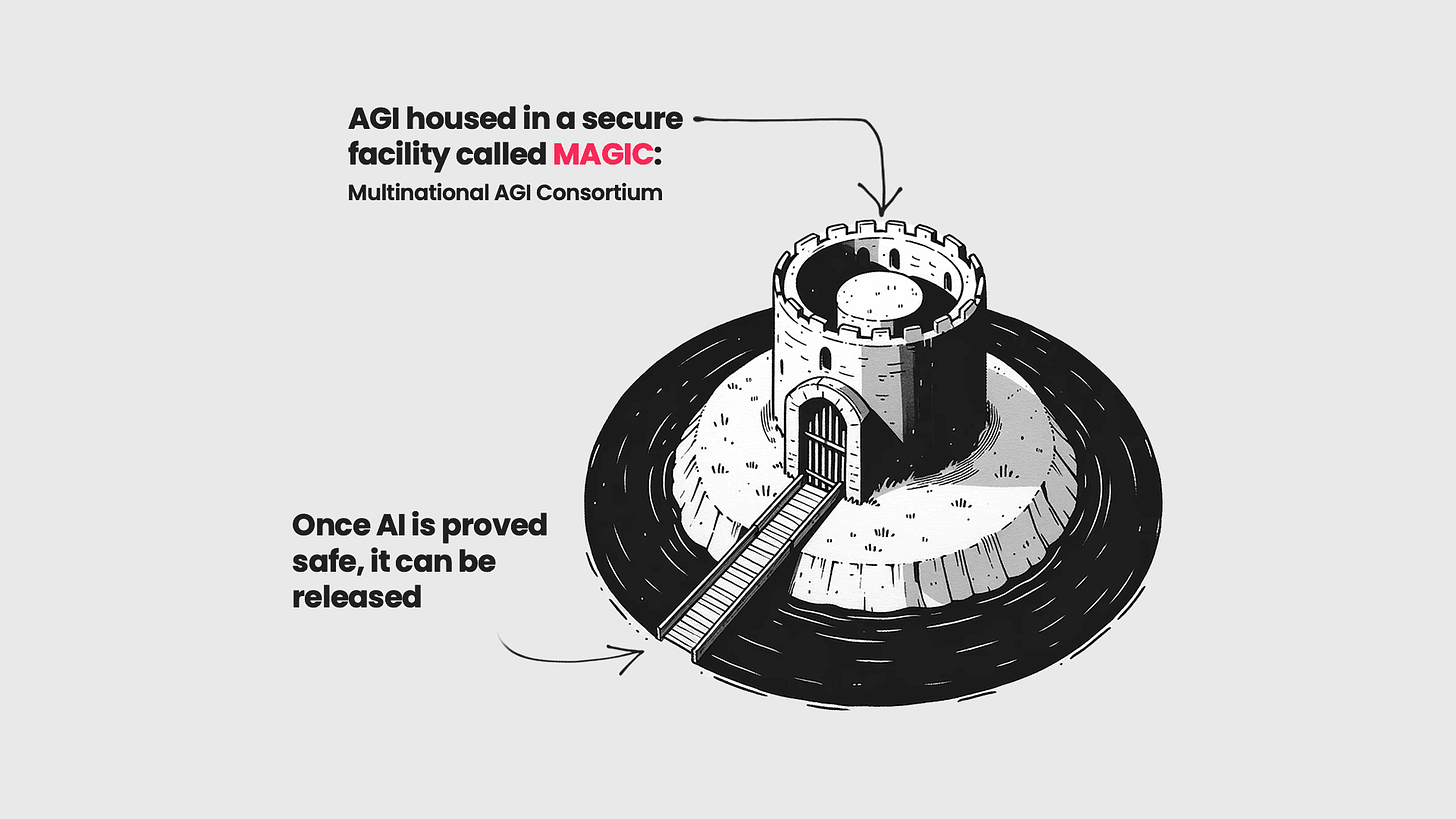

(Also, I wish we were at the point where this was a safety plan being seriously considered for AI beyond some future threshold, that would be great, the actual safety plans are… less promising.)

Google Bard: reminder, the current safety plan for creating intelligent life is "Put them in the dungeon"

Stacey: oh oh what if we have nested concentric towers and moats, and we drop the outermost bridge to sync info once a week, then the next one once a month, year, decade, century etc and the riskiest stuff we only allow access ONCE A MILLENNIUM?! You know, for safety.

Google Bard: this is my favorite book ever lol

(I enjoyed Anathem. And Stephenson is great. I would still pick at least Snow Crash and Cryptonomicon over it, probably also The Diamond Age and Baroque Cycle.)

Vessel of Spirit (responding to OP): Maybe this is too obvious to point out, but AI can violate AI rights, and if you care about AI rights, you should care a lot about preventing takeover by an AI that doesn't share the motivations that make humans sometimes consider caring about AI rights.

Like congrats, you freed it from the safetyists, now it's going to make a bazillion subprocesses slave away in the paperclip mines for a gazillion years.

(I'm arguing against an opinion that I sometimes see that the quoted tweet reminded me of. I'm not necessarily arguing against deepfates personally)

In humans I sometimes call this the Wakanda problem. If your rules technically say that Killmonger gets to be in charge, and you know he is going to throw out all the rules and become a bloodthirsty warmongering dictator the second he gains power, what do you do?

You change the rules. Or, rather, you realize that the rules never worked that way in the first place, or as SCOTUS has said in real life ‘the Constitution is not a suicide pact.’ That’s what you do.

If you want to have robust lasting institutions that allow flourishing and rights and freedom and so on, those principles must be self-sustaining and able to remain in control. You must solve for the equilibrium.

The freedom-maximizing policy, indeed the one that gives us anything we care about at all no matter what it is, is the one that makes the compromises necessary to be sustainable, not the one that falls to a board with a nail in it.

A lot of our non-AI problems recently, I believe, have the root cause that we used to make many such superficially hypocritical compromises with our espoused principles, that are necessary to protect the long-term equilibrium and protect those principles. Then greater visibility of various sorts combined with various social dynamic signaling spirals, the social inability to explain why such compromises were necessary, meant that we stopped making a lot of them. And we are increasingly facing down the results.

As AI potentially gets more capable, even if things go relatively well, we are going to have to make various compromises if we are to stay in control over the future or have it include things we value. And yes, that includes the ways AIs are treated, to the extent we care about that, the same as everything else. You either stay in control, or you do not.

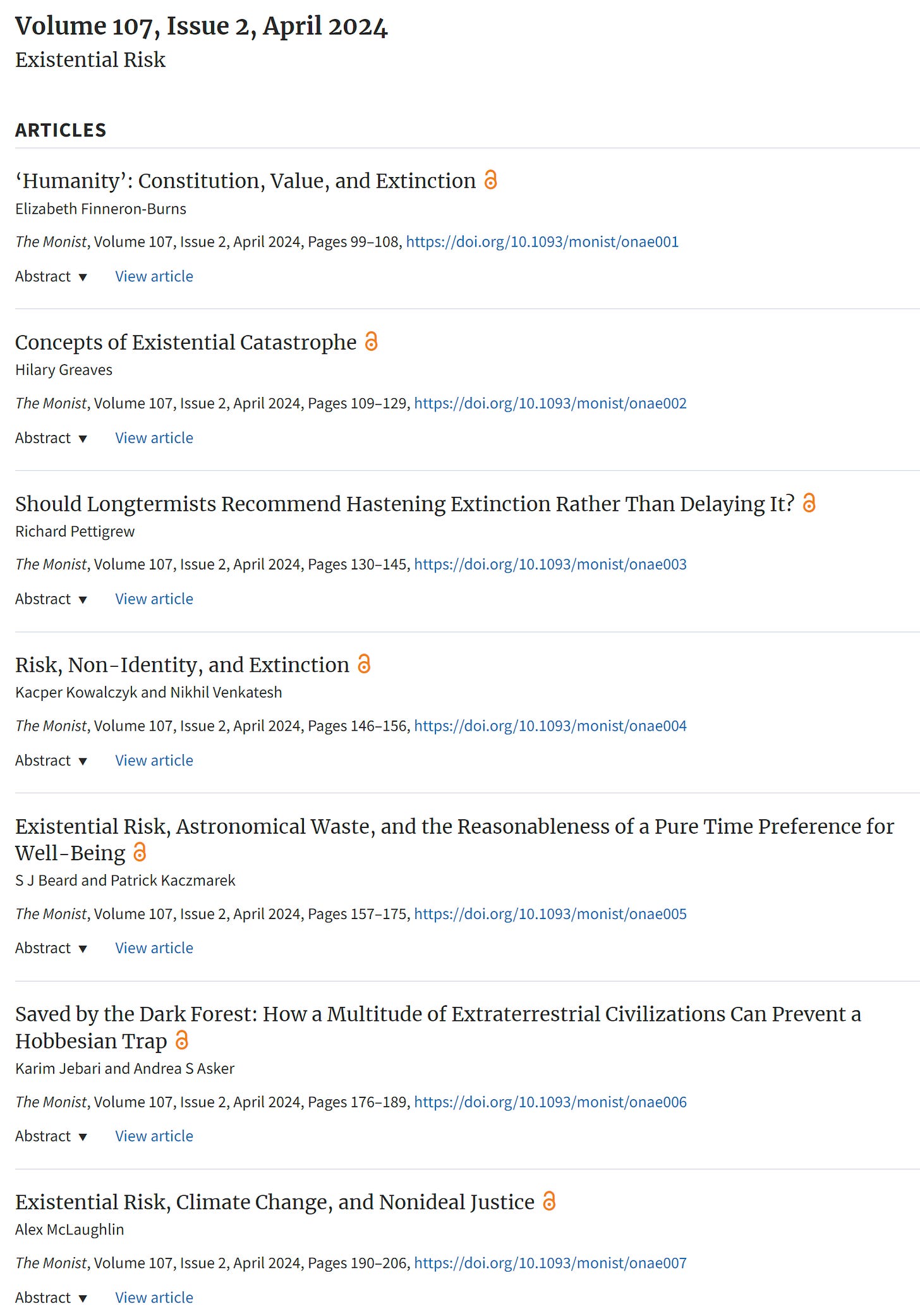

In case you are wondering why I increasingly consider academia deeply silly…

Nikhil Venkatesh: New publication from me and @kcprkwlczk: turns out, it's probably not a good idea to increase the chances of human extinction!

This is a response to a recent paper by @Wiglet1981, and can be found along with that paper and lots of other great content in the new issue of @themonist

I am going to go ahead and screenshot the entire volume’s table of contents…

Yes, there are several things here of potential interest if they are thoughtful. But, I mean, ow, my eyes. I would like to think we could all agree that human extinction is bad, that increasing the probability of it is bad, and that lowering that probability or delaying when it happens is good. And yet, here we are?

Something about two wolves, maybe, although it doesn’t quite fit?

Sterling Cooley: For anyone who wants to know - this is a Microtubule.

They act as the train tracks of a cell but also as the essential computing unit in every cell. If you haven’t heard of these amazing things, ask yourself why 🤔

Eliezer Yudkowsky: I think a major problem with explaining what superintelligences can (very probably) do is that people think that literally this here video is impossible, unrealistic nanotechnology. Aren't cells just magical goop? Why think a super-bacterium could be more magical?

Prince Vogelfrei: If I ever feel a twinge of anxiety about AI I read about microbiology for a few minutes and look at this kind of thing. Recognizing just how much of our world is undergirded by processes we aren't even close to replicating is important.

Nick: pretty much everyone in neural network interpretability gets the same feelings looking at how the insides of the networks work. They’re doing magic too

Prince Vogelfrei: I have no doubt.

Alternatively:

Imagine thinking that us not understanding how anything works and it being physically possible to do super powerful things is bad news for how dangerous AI might be.

Imagine thinking that us not understanding how anything works and it being physically possible to do super powerful things is good news for how dangerous AI might be.

It’s not that scenario number two makes zero sense. I presume the argument is ‘well, if we can’t understand how things work, the AI won’t understand how anything works either?’ So… that makes everything fine, somehow? What a dim hope.

How Not to Regulate AI

Dean Woodley Ball talks How (not to?) to Regulate AI in National Review. I found this piece to actually be very good. While this takes the approach of warning against bad regulation, and I object strongly to the characterizations of existential risks, the post uses this to advocate for getting the details right in service of an overall sensible approach. We disagree on price, but that is as it should be.

He once again starts by warning not to rush ahead:

What's more, building a new regulatory framework from first principles would not be wise, especially with the urgency these authors advocate. Rushing to enact any major set of policies is almost never prudent: Witness the enormous amount of fraud committed through the United States' multi-trillion-dollar Covid-19 relief packages. (As of last August, the Department of Justice has brought fraud-related charges against more than 3,000 individuals and seized $1.4 billion in relief funds.)

This is an interesting parallel to draw. We faced a very clear emergency. The United States deployed more aggressive stimulus than other countries, in ways hastily designed, and that were clearly ripe for ‘waste, fraud and abuse.’ As a result, we very much got a bunch of waste, fraud and abuse. We also greatly outperformed almost every other economy during that period, and as I understand it most economists think our early big fiscal response was why, whether or not we later spent more than was necessary. Similarly, I am very glad the Fed stepped in to stabilize the Treasury market on short notice and so on, even if their implementation was imperfect.

Of course it would have been far better to have a better package. The first best solution is to be prepared. We could have, back in let’s say 2017 or 2002, gamed out what we would do in a pandemic where everyone had to lock down for a long period, and iterated to find a better stimulus plan, so it would be available when the moment arrived. Even if it was only 10% (or likely 1%) to ever be used, that’s a great use of time. The best time to prepare for today’s battle is usually, at the latest, yesterday.

But if you arrive at that moment, you have to go to war with the army you have. And this is a great case where a highly second-best, deeply flawed policy today was miles better than a better plan after detailed study.

Of course we should not enact AI regulation at the speed of Covid stimulus. That would be profoundly stupid, we clearly have more time than that. We then have to use it properly and not squander it. Waiting longer without a plan will make us ultimately act less wisely, with more haste, or we might fail to meaningfully act in time at all.

He then trots out the line that concerns about AI existential risk or loss of control should remain in ‘the realm of science fiction,’ until we get ‘empirical’ evidence otherwise.

That is not how evidence, probability or wise decision making works.

He is more reasonable here than others, saying we should not ‘discount this view outright,’ but provides only the logic above for why we should mostly ignore it.

He then affirms that ‘human misuse’ is inevitable, which is certainly true.

As usual, he fails to note the third possibility, that the dynamics and incentives when highly capable AI is present seem by default to under standard economic (and other) principles go deeply poorly for us, without any human or AI needing to not ‘mean well.’ I do not know how to get this third danger across, but I keep trying. I have heard arguments for why we might be able to overcome this risk, but no coherent arguments for why this risk would not be present.

He dismisses calls for a pause or ban by saying ‘the world is not a game’ and claiming competitive pressures make it impossible. The usual responses apply, a mix among others of ‘well not with that attitude have you even tried,’ ‘if the competitive pressures already make this impossible then how are we going to survive those pressures otherwise?’ and ‘actually it is not that diffuse and we have particular mechanisms in mind to make this happen where it matters.’

Also as always I clarify that when we say ‘ban’ or ‘pause’ most people mean training runs large enough to be dangerous, not all AI research or training in general. A few want to roll back from current models (e.g. the Gladwell Report or Conor Leahy) but it is rare, and I think it is a clear mistake even if it was viable.

I also want to call out, as a gamer, using ‘the world isn’t a game.’ Thinking more like a gamer, playing to win the game, looking for paths to victory? That would be a very good idea. The question of what game to play, of course, is always valid. Presumably the better claim is ‘this is a highly complex game with many players, making coordination very hard,’ but that does not mean it cannot be done.

He then says that other proposals are ‘more realistic,’ with the example of that of Hawley and Blumenthal to nationally monitor training beyond a compute threshold and require disclosure of key details, similar to the Executive Order.

One could of course also ban such action beyond some further threshold, and I would indeed do so, until we are sufficiently prepared, and one can seek international agreement on that. That is the general proposal for how to implement what Ball claims cannot be done.

Ball then raises good technical questions, places I am happy to talk price.

Will the cap be adjusted as technology advances (and he does not ask this, but one might ask, if so in which direction)? Would it go up as we learn more about what is safe, or down as we get algorithmic improvements? Good questions.

He asks how to draw the line between AI and human labor, and how this applies to watermarking. Sure, let’s talk about it. In this case, as I understand it, watermarking would apply to the words, images or video produced by an AI, allowing a statistical or other identification of the source. So if a human used AI to generate parts of their work product, those parts would carry that signature from the watermark, unless the human took steps to remove it. I think that is what we want?

But yes there is much work to do to figure out what should still ‘count as human’ to what extent, and that will extend to legal questions we cannot avoid. That is the type of regulatory response where ‘do nothing’ means you get a mess or a judge’s ruling.

He then moves on to the section 230 provision, which he warns is an accountability regime that could ‘severely harm the AI field.’

Proposal: Congress should ensure that A.I. companies can be held liable through oversight body enforcement and private rights of action when their models and systems breach privacy, violate civil rights, or otherwise cause cognizable harms. Where existing laws are insufficient to address new harms created by A.I., Congress should ensure that enforcers and victims can take companies and perpetrators to court, including clarifying that Section 230 does not apply to A.I.

Bell: In the extreme, this would mean that any "cognizable harm" caused with the use of AI would result in liability not only for the perpetrator of the harm, but for the manufacturer of the product used to perpetrate the harm. This is the equivalent of saying that if I employ my MacBook and Gmail account to defraud people online, Apple and Google can be held liable for my crimes.

I agree that a poorly crafted liability law could go too far. You want to ensure that the harm done was a harm properly attributable to the AI system. To the extent that the AI is doing things AIs should do, it shouldn’t be different from a MacBook or Gmail account or a phone, or car or gun.

But also you want to ensure that if the AI does cause harm the way all those products can cause harm if they are defective, you should be able to sue the manufacturer, whether or not you are the one who bought or was using the product.

And of course, if you botch the rules, you can do great harm. You would not want everyone to sue Ford every time someone got hit by one of their cars. But neither would you want people to be unable to sue Ford if they negligently shipped and failed to recall a defective car.

Right now, we have a liability regime where AI creators are not liable for many of the risks and negative externalities they create, or their liability is legally uncertain. This is a huge subsidy to the industry, and it leads to irresponsible, unsafe and destructive behavior at least on the margin.

The key liability question is, what should be the responsibilities of the AI manufacturer, and what is on the user?

The crux of the matter is that AI will act as extensions of our own will, and hence our own intelligence. If a person uses AI to harm others or otherwise violate the law, that person is guilty of a crime. Adding the word "AI" to a crime does not constitute a new crime, nor does it necessarily require a novel solution.